Sample Cross-Modal Integration Research Paper. Browse other research paper examples and check the list of research paper topics for more inspiration. iResearchNet offers academic assignment help for students all over the world: writing from scratch, editing, proofreading, problem solving, from essays to dissertations, from humanities to STEM. We offer full confidentiality, safe payment, originality, and money-back guarantee. Secure your academic success with our risk-free services.

1. Multiple Sensory Representations In The Brain

Large brains generally are found in large animals. This is primarily because the body evolves in concert with the neural architecture necessary to handle the in-formation it provides and to control its movement. Although there are certainly exceptions to the rule, and the human brain is disproportionately large, the actual acumen of the host is not as relevant to overall evolution of brain size as one might think, and even big dim-witted animals can have big brains.

Academic Writing, Editing, Proofreading, And Problem Solving Services

Get 10% OFF with 24START discount code

Because animals have multiple senses, and each requires a good deal of neural circuitry to make them work, much of even the largest brain ends up being devoted to representing the sensory complex. Thus, the cerebral cortex, generally considered the highest stage of information processing and the seat of perception, appears to have a large portion of its area organized into a succession of higher order sensory (and motor) processing stations. Thus far, a minimum of 32 visual, 15 auditory, and 8 somatosensory areas have been identified in the primate cortex. Somehow, though no one really knows how, the coordinated operation of multiple cortical areas, each responsible for dealing with a limited set of stimulus qualities, produces a unified perceptual experience.

That so much of the brain is devoted to sensory processing and its associated motor commands is due to the fact that survival depends on the speed and accuracy with which an organism can evaluate external events and properly react to them. Consequently, having many senses is generally better than having few and, despite the increased metabolic cost of building and maintaining the necessary underlying brain circuits, there are significant overall gains. For one thing, sensory modalities can substitute for one another in different circumstances (e.g., sight and touch substitute for vision in the dark). For another, multiple senses allow the organism to simultaneously sample very different forms of environmental energy, changes in any one of which may provide important information about an event. Multiple inputs from different senses also increase the likelihood of correctly identifying a given event, for it may be difficult to distinguish on the basis of a single sensory dimension (two events may sound alike, but look different).

2. Multi-Sensory Integration And Perception

Yet, the brain is able to make more of its multiple senses than might at first be apparent, and by synthesizing information from two or more modality-specific inputs it creates something entirely new. At the level of sensation perception, the new product of this ‘multi-sensory integration’ is a unique experience. For example, what we think of as ‘taste’ is not due solely to the signals the brain receives about the food-induced chemical changes on the tongue. Rather, taste represents a synthesis of signals derived from these chemical changes (gustatory) and those from the aroma (olfactory) and texture (somatosensory) of the food. Thus, multi-sensory integration provides a mechanism for producing much richer and more diverse sensory experiences than would otherwise be possible. At the neural level, multi-sensory integration appears to create a new, amplified signal that is greater than that produced in response to either of its constituent modality-specific cues. In fact, this signal is often greater than the sum of the constituent responses; an excellent biological example of the whole being greater than the sum of its parts. Such an enhanced sensory signal is strongly correlated with an increased probability of a behavioral response. Furthermore, as will be shown below, the multi-sensory amplification process is selective; it increases the brain’s ability to detect and identify events by amplifying only those cross-modal sensory signals whose spatial and temporal properties indicate that they are likely to have a common origin. Indeed, those cross-modal signals that appear to be derived from different events can be minimized via these same synthetic mechanisms. When the stimulus conditions do favor an enhanced multi-sensory signal, not only is the detection of the event and the probability of an appropriate response enhanced, but the speed of the response is increased as well. These multi-sensory consequences have been demonstrated in both animals (Frens et al. 1998, Stein et al. 1989) and humans (Frens et al. 1995, Goldring et al. 1996, Hughes et al. 1994). Furthermore, multisensory integration has been associated with enhancing object recognition (Giard and Peronnet 1999, O’Hare 1991), increasing the apparent intensity of a signal (Stein et al. 1996) and aiding in speech perception (see Massaro and Stork 1998).

It has been known for some time that multiple sensory inputs must be bound together to produce what we experience as a ‘unitary’ perceptual experience, and that this ‘binding’ process can have potent perceptual consequences. Yet, we are largely unaware of its actual operation. Perhaps we don’t notice the process because it is the normal mode of brain operation. For example, seeing a speaker’s face enhances our ability to perceive what is being said (see Sumby and Pollack 1954). Yet, we are rarely conscious of this except, perhaps, in a noisy room where the benefit of combined visual-auditory inputs is particularly important and, as a result, we try very hard to focus on the speaker’s face. The consequence is an enhancement of the brain activity that underlies speech perception (e.g., see Calvert et al. 1999, Sams et al. 1991) induced by the congruent visual (lip movement) and auditory (spoken words) stimuli we receive and integrate. Indeed, visual-auditory integration in speech perception is so seamless that we are quite surprised when we experience the so-called ‘McGurk effect.’ Here, the coupling of the sound of one word with the lip movements of another produces a cross-modal product that differs from either. One example of this is hearing the word ‘bows’ while seeing lip movements that form the word ‘goes’ and perceiving ‘doze’ or ‘those.’ This cross-modal illusion underscores the potent multi-sensory interactions that normally occur between vision and audition.

Whereas the perceived meaning of the signal is altered in the McGurk effect, the perceived location of the signal is affected in another compelling visual-auditory illusion, the ‘ventriloquism effect’ (Howard and Templeton 1966). In this illusion a visual cue (e.g., movement of the lips) provides a signal that alters the apparent location of the source of the sound. We commonly experience this illusion when watching a movie. Voices always seem to be coming from the appropriate characters, regardless of their location or movement on the screen and despite the fact that all the sounds actually originate from the same location—the speakers at the sides of the screen. There are many other compelling illusions involving different senses, such as the ‘parchment skin’ illusion, in which the apparent texture of the skin is changed as a consequence of coupling the sensation of rubbing of the fingers with a grating sound that is played through earphones the subject is wearing (Jousmaki and Hari 1998), or the oculogravic illusion (Graybiel 1952), in which vestibular cues alter visual perceptions. Al-though the neural basis of these individual illusions is poorly understood at present, we have begun to understand some of the general principles by which individual neurons in the brain combine simple stimuli from different sensory inputs to produce a multisensory signal. It is believed that understanding these principles will help us better understand the behavioral and perceptual consequences of multi-sensory integration.

3. Multi-Sensory Neurons And The Superior Colliculus (SC)

In order to effect multi-sensory integration at the level of the single neuron, there must be sites in the brain where neurons receive converging inputs from different senses. Such regions have been found at nearly every level in the central nervous system (see Stein and Meredith 1993), thereby paralleling areas that are devoted to processing information for each individual sensory modality. As a consequence of these seemingly parallel systems, higher animals have developed a curious duality: some areas of the brain are specialized for processing information on a sense-by-sense basis, and probably give rise to the peculiar subjective impressions that are so closely bound to one modality or another (e.g., the perceptions of color, pitch, tickle, or itch). Conversely, other areas are specialized for information processing regardless of the specific sense or senses from which that information is derived. These latter areas depend on a convergence of inputs from different senses onto a common set of (multisensory) neurons.

One of the best known structures in which multi-sensory convergence occurs is found in the midbrain: the superior colliculus (SC). In fact, researchers have leaned heavily on the responses of multi-sensory SC neurons to develop a model that is helpful in under-standing the general neural properties of multi-sensory integration, and this has proved to be an excellent strategy for a host of reasons. First, the SC is richly endowed with converging visual, auditory, and somatosensory inputs, and thus has many multi-sensory neurons that can be studied electrophysio-logically. Second, the SC is also known to play an important role in producing motor actions that are guided by sensory stimuli. Specifically, it is involved in generating orienting behaviors to stimuli in contra-lateral space and, thus, for example, mediates rapid shifts of gaze (i.e., eye and head movements) to objects of interest. These factors make it possible to use the same structure to explore the principles governing multi-sensory integration at the neuronal and behavioral levels.

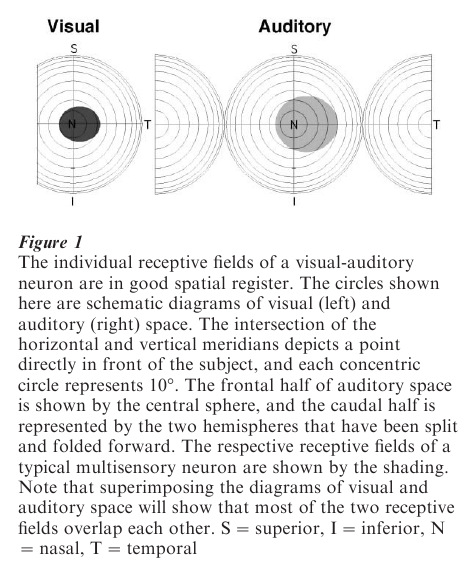

A multi-sensory SC neuron has multiple receptive fields, one for each sensory modality to which it is responsive. A receptive field defines the area of sensory space (e.g., visual space, auditory space, somatosensory space) where a given stimulus will be effective in activating the neuron. For multi-sensory neurons, their different receptive fields are in spatial register with one another. For example, a visual-auditory neuron with a visual receptive field in central space will be responsive to auditory cues in an overlapping region of auditory space as shown in Fig. 1. The spatial register among the modality-specific receptive fields of a multi-sensory neuron plays a critical role in its synthesis of cross-modal cues. Such register is characteristic of multisensory neurons regardless of where they are found in the nervous system, and is maintained even if, for example, one set of peripheral sensory organs moves. Thus, for example, any eye movement will shift visual space relative to auditory (head-axis) or somatosensory (body-axis) space because the retina and its receptor cells have moved. Rather than disrupt receptive field alignment, movement-induced misalignment of sensory reference frames sets into motion compensatory neural mechanisms that attempt to maintain receptive field register. Thus, a shift in eye position to the right produces a rightward shift in the auditory receptive field of an SC neuron (Harrington and Peck 1998, Hartline et al. 1995, Jay and Sparks 1984). Similarly, there is even evidence that the somatosensory receptive fields of visual-somatosensory neurons show compensatory changes as a consequence of eye movements (Groh and Sparks 1996). Although the mechanisms underlying these dynamic shifts in receptive fields are not well understood, using the retina as a referent for shifting nonvisual receptive fields in the SC reflects its principal role in generating gaze shifts to targets of any modality, including those that are multi-sensory. This is not the case everywhere multi-sensory neurons are found. In regions of premotor cortex, where visual-somatosensory neurons are found and whose activity generates limb rather than eye movements, movements of the limb produce compensatory changes in the location of visual receptive fields (Gentilucci et al. 1983, Graziano et al. 1994). Once again, cross-modal receptive field register is a key element in the maintenance of a modality-independent coordinate frame to properly code movement.

4. Principles Of Multi-Sensory Integration In Single SC Neurons

4.1 Spatial

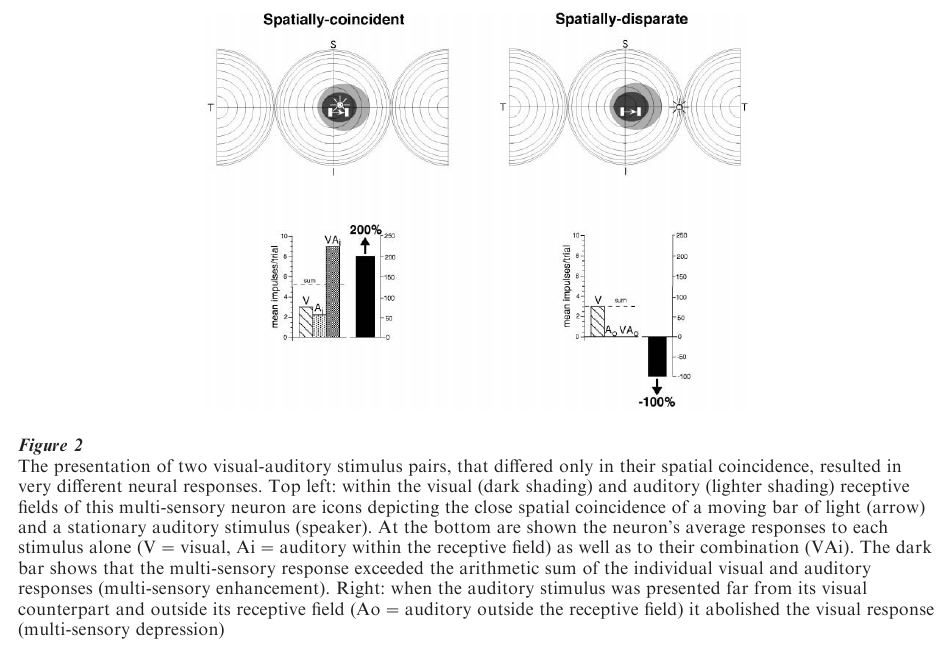

The enhanced multi-sensory response of a neuron depends on stimuli having access to its two (or more) receptive fields simultaneously or within a short window of time. Given the spatially overlapping nature of receptive fields in multi-sensory neurons, the activating stimuli should derive from the same location in space, such as when they originate from the same event. The consequent multi-sensory response typically is a train of discharges that contains more impulses than one evoked by either of the modality-specific stimuli. Indeed, if the modality-specific responses are themselves weak, the response to the combined stimulus often exceeds the arithmetic sum of the modality-specific responses (Fig. 2).

If, however, the stimuli are derived from different locations (e.g., originate from different events), so that one stimulus falls outside its receptive field and is spatially discordant with the other stimulus, no such response enhancement is produced. In fact, under such circumstances there is a good probability that the opposite effect, response depression, will occur. In such cases, even vigorous visual responses can be abolished (Fig. 2). These changes at the single neuron level are reflected at the behavioral level as enhanced attentive and/orientation responses to spatially co-incident cross-modal stimuli, and depressed attentive and orientation responses to spatially disparate cross-modal stimuli (Stein et. al. 1989).

These opposing effects of the same stimuli are believed to be due to a neuron receiving synergistic excitatory inputs when its two input channels are stimulated by spatially aligned stimuli (both stimuli fall within the excitatory receptive fields of that neuron), but, because some receptive fields have inhibitory regions at their borders, response depression can be explained by the antagonism between the inhibitory input from the stimulus outside the receptive field and the excitatory input produced by the stimulus within its receptive field (Kadunce et al. 1997).

4.2 Temporal

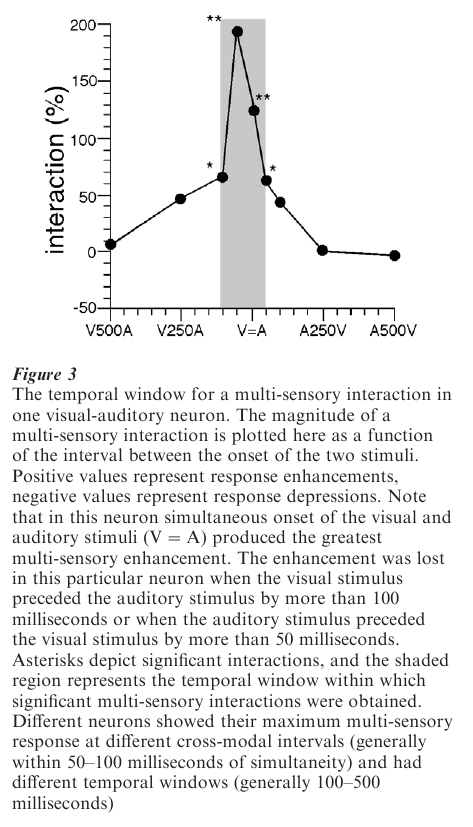

Integrated multi-sensory responses also depend on the relative timing of the stimuli. When the interval between the occurrence of the stimuli is too large, they are recognizable in the neuronal response as discrete events. In other words, no integration takes place. Nevertheless, for any given neuron this temporal ‘window’ may be several hundred milliseconds and generally is predictable from the duration of its response to the stimulus that causes the earliest excitation. So, for example, if a somatosensory stimulus elicits a response that lasts 200 milliseconds, a multi-sensory interaction will occur if the activation produced by a stimulus of a second modality occurs during that period (Meredith et al. 1987, Wallace and Stein 1997).

This is important because even though different senses may be activated by the same event, the speed with which the modality-specific components activate a multi-sensory neuron can differ substantially (auditory and somatosensory information reaches SC neurons in 10–25 ms, while visual information requires 40–120 ms). If precise coincidence were required, very few multi-sensory interactions would take place. Nevertheless, the temporal interaction window is finite and different neurons respond best to different stimulus asynchronies. Generally speaking, the magnitude of the interaction reflects the degree to which the modality-specific activation patterns overlap in time. Thus, the magnitude of the interaction diminishes rapidly as this period of temporal overlap declines (Fig. 3). In some cases, temporal intervals that are transitional between those that produce enhanced responses and those that produce two independent responses give rise to response depression. In the case of visual-auditory neurons, the ability to respond differentially to stimuli with different input latencies suggests a tuning to events occurring at different distances from the animal. Whether there is some systematic organization of these neurons that may be helpful in localizing stimuli in 3-dimensional space remains to be determined.

4.3 Inverse Effectiveness

The degree to which the response to a multi-sensory stimulus exceeds that to either of the unimodal component stimuli is most often inversely related to the effectiveness of the modality-specific stimuli. This makes intuitive sense if we consider the possible functional consequences of multi-sensory enhancement. By definition, very effective stimuli evoke strong neural responses and are very likely to elicit a behavioral response. Thus, if the objective of multisensory enhancement is to increase the probability of detecting and/or responding to a stimulus, there would be little gained from augmenting an already vigorous modality-specific response. On the other hand, substantial benefit might be had by combining the influences of weak modality-specific stimuli. But what is to be gained from this? One could argue that increasing the sensitivity to relatively weak stimuli would make the system susceptible to environmental and/or neural ‘noise.’ However, one could counter this argument by noting that the probability that spatially and temporally coincident activation occur-ring on independent modality-specific channels by chance is low and that, more often than not, this circumstance would correspond to a real event in the external world. It is this sort of reasoning that has provided the impetus for many organizations (most prominently, industry and the military) to develop sensor-fusion devices for navigation or to aid in searching for objects embedded in ‘noisy’ environments such as a forest or sea floor.

5. The Essential Circuit For Multi-Sensory Integration In SC Neurons

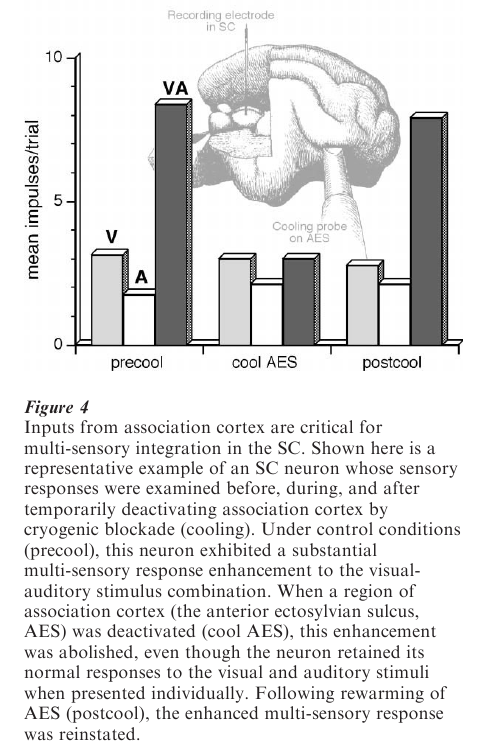

One might reasonably assume that any neuron receiving inputs from two different sensory modalities would have the ability to integrate these inputs. However, this is not the case. Multi-sensory neurons in the SC receive converging modality-specific inputs from many sources (see Stein et al. 1993). However, recent experiments in cats have shown that modality-specific inputs derived from a region of cerebral cortex play a critical role in mediating multi-sensory integration in SC neurons (see Jiang et al. 1999, Wallace and Stein 1994). As shown in Fig. 4, when the SC is deprived of inputs from this region of the cortex (by reversible cryogenic blockade of cortical activity), SC neurons retain the ability to respond to inputs from different sensory modalities, but lose their ability to integrate them and thereby enhance their responses. Rather, they respond to combinations of cross-modal stimuli in much the same way as they would respond to the most effective of these modality-specific stimuli in isolation.

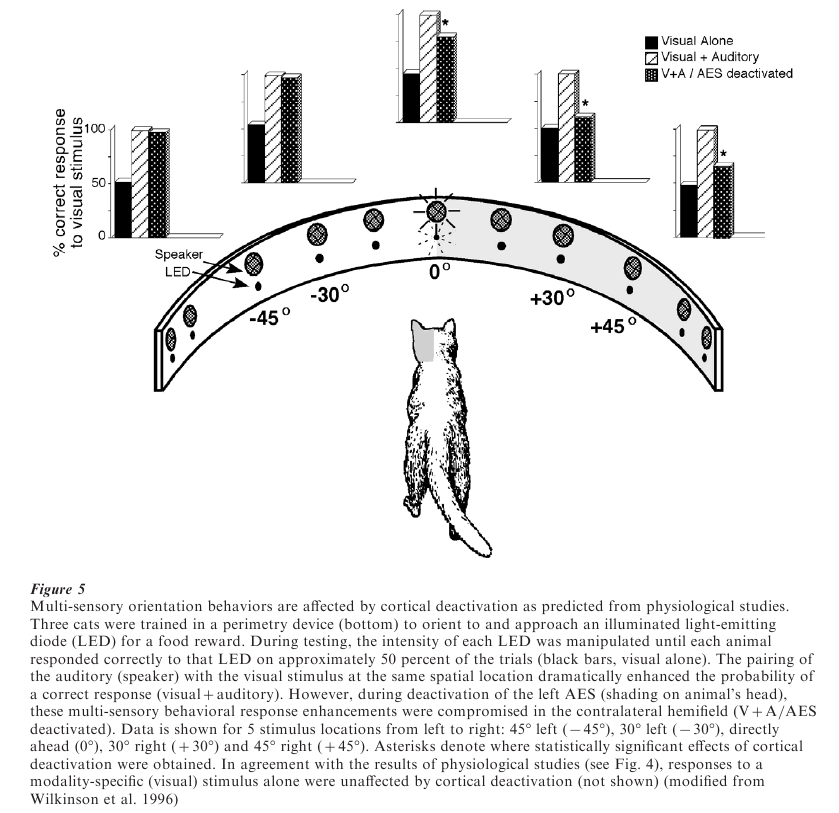

Presumably, these cortical deactivation-induced physiological changes in SC neurons would disrupt SC-mediated multi-sensory behaviors. A test of this was made by training animals to respond to visual cues in the presence or absence of auditory stimuli, and then testing them with or without this region of cortex deactivated (Fig. 5). When the cortex was temporarily deactivated (with either a local anesthetic injection or cryogenic blockade) there was no change in the animal’s responses to the visual cue, but the ability of the auditory cue to enhance (spatially coincident stimuli) or degrade (spatially disparate stimuli) responses was severely disrupted (Wilkinson et al. 1996). These behavioral observations, coupled with the electrophysiological results described above, demonstrate that the neural circuit in the SC essential for multisensory integration must include inputs from the cerebral cortex.

6. Commonalities In Multi-Sensory Integration Across Species

Although many of the specifics that were presented above were drawn from experiments in cats, most of the sensory features that characterize the SC of this animal are also found in every other laboratory species that has been examined thus far. Particularly important in the current context is the spatial register among the different receptive fields of multi-sensory neurons. This has been found in rodents, carnivores, and primates. The widespread nature of this scheme for coupling inputs from different sensory modalities, and its presence in neurons of the nonmammalian homologue of the SC, the optic tectum (see Stein and Meredith 1993), suggest that it was preserved during the transition from nonmammalian to mammalian forms and is adaptable to a wide variety of ecological circumstances. Furthermore, its utility is not restricted to neurons involved in SC-mediated behaviors, as it is also characteristic of multi-sensory neurons in many neocortical areas (e.g., Bruce et al. 1981, Duhamel et al. 1991, Fogassi et al. 1996, Graziano et al. 1997, Ramachandran et al. 1993, Rizzolatti et al. 1981a, 1981b, Stein et al. 1993, Wallace et al. 1992), where such neurons are likely to be involved in functional roles very different from those involving the SC. The few experiments that have examined multi-sensory processes in cortical neurons have yielded similar results. Indeed, despite the observation that spatially disparate stimuli produce a weaker inhibitory inter-action in cortex than SC, many of the same principles of multi-sensory integration appear to be operative in both areas. This lends credence to the notion that the fundamental principles of multi-sensory integration are likely to supersede structure, function, and species.

This does not mean that there are not substantial species differences in the stimuli that are effective in activating multi-sensory neurons or in differences in the roles played by multi-sensory neurons in different regions of the brain. Nevertheless, it does appear likely that despite the various specialized characteristics of multi-sensory neurons in different animals and/or structures, there exists a common set of principles by which they pool information from their different sensory channels. The presence of a common set of integrative principles that operate at different levels of the neuraxis is likely to provide a comparatively simple means of simultaneously enhancing or degrading (i.e., coordinating) the activity of different brain areas that cooperate in the production of an integrated behavior. It now remains to be seen whether that postulate is confirmed.

Bibliography:

- Bruce C, Desimone R, Gross C G 1981 Visual properties of neurons in a polysensory area in superior temporal sulcus of the macaque. Journal of Neurophysiology 46: 369–84

- Calvert G A, Brammer M J, Bullmore E T, Campbell R, Iversen S D, David A S 1999 Response amplification on sensory-specific cortices during cross-modal binding. NeuroReport 10: 2619–23

- Duhamel J-R, Colby C L, Goldberg M E 1991 Congruent representations of visual and somatosensory space in single neurons of monkey ventral intraparietal cortex (area VIP). In: Paillard J (ed.) Brain and Space. Oxford University Press, New York

- Fogassi L, Gallese V, Fadiga L, Luppino G, Matelli M, Rizzolatti G 1996 Coding of peripersonal space in inferiorpremotor cortex (area F4). Journal of Neurophysiology 76: 141–57

- Frens M A, Van Opstal A J, Van der Willigen R F 1995 Spatial and temporal factors determine auditory-visual interactions in human saccadic eye movements. Perception and Psychophysics 57: 802–16

- Gentilucci M, Scandolara C, Pigarev I N, Rizzolatti G 1983 Visual responses in the postarcuate cortex (area 6) of the monkey that are independent of eye position. Experimental Brain Research 50: 464–8

- Giard M H, Peronnet F 1999 Auditory-visual integration during multimodal object recognition in humans: A behavioral and electrophysiological study. Journal of Cognitive Neuroscience 11: 473–90

- Goldring J E, Dorris M C, Corneil B D, Ballantyne P A, Munoz D P 1996 Combined eye-head gaze shifts to visual and auditory targets in humans. Experimental Brain Research 111: 68–78

- Graybiel A 1952 Oculogravic illusion. American Medical Association Archives of Ophthalmology 48: 605–15

- Graziano M S, Hu T, Gross C G 1997 Visuospatial properties of ventral premotor cortex. Journal of Neurophysiology 77: 2268–92

- Graziano M S, Yap G S, Gross C G 1994 Coding of visual space by premotor neurons. Science 266: 1054–7

- Groh J M, Sparks D L 1996 Saccades to somatosensory targets. III. Eye-position-dependent somatosensory activity in primate superior colliculus. Journal of Neurophysiology 75: 439–53

- Harrington L K, Peck C K 1998 Spatial disparity affects visual-auditory interactions in human sensorimotor processing. Experimental Brain Research 122: 247–52

- Hartline P H, Pandey Vimal R L, King A J, Kurylo D D, Northmore D P M 1995 Effects of eye position on auditory localization and neural representation of space in superior colliculus of cats. Experimental Brain Research 104: 402–8

- Howard I P, Templeton W B 1966 Human Spatial Orientation. Wiley, London

- Hughes H C, Reuter-Lorenz P A, Nozawa G, Fendrich R 1994 Visual-auditory interactions in sensorimotor processing: sac-cades versus manual responses. Journal of Experimental Psychology: Human Perception and Performance 20: 131–53

- Jay M F, Sparks D L 1984 Auditory receptive fields in primate superior colliculus shift with changes in eye position. Nature 309: 345–7

- Jiang W, Wallace M T, Jiang H, Vaughan J W, Stein B E 1999 Two cortical areas contribute to the multisensory integration in superior colliculus neurons. Society for Neuroscience Abstracts 25: 1413

- Jousmaki V, Hari R 1998 Parchment-skin illusion: Sound-biased touch. Current Biology 8: R190

- Kadunce D C, Vaughan J W, Wallace M T, Benedek G, Stein B E 1997 Mechanisms of within- and cross-modality suppression in the superior colliculus. Journal of Neurophysiology 78: 2834–47

- Massaro D W, Stork D G 1998 Sensory integration and speech-reading by humans and machines. American Scientist 86: 236–44

- Meredith M A, Nemitz J W, Stein B E 1987 Determinants of multisensory integration in superior colliculus neurons: I. Temporal factors. Journal of Neuroscience 10: 3215–29

- O’Hare J J 1991 Perceptual integration. Journal of the Washington Academy of Science 81: 44–59

- Ramachandran R, Wallace M T, Stein B E 1993 Distribution and properties of multisensory neurons in rat cerebral cortex. Society for Neuroscience Abstracts 19: 1447

- Rizzolatti G, Scandolara C, Matelli M, Gentilucci M 1981a Afferent properties of periarcuate neurons in macaque monkeys. I. Somatosensory responses. Behavioural Brain Research 2: 125–46

- Rizzolatti G, Scandolara C, Matelli M, Gentilucci M 1981b Afferent properties of periarcuate neurons in macaque monkeys. II. Visual responses. Behavioural Brain Research 2: b 47–63

- Sams M, Aulanko R, Hamalainen M, Hari R, Lounasmaa O V, Lu S-T, Simola J 1991 Seeing speech: Visual information from lip movements modified activity in the human auditory cortex. Neuroscience Letters 127: 141–5

- Stein B E, Meredith M A 1993 The Merging of the Senses. MIT Press, Cambridge, MA

- Stein B E, London N, Wilkinson L K, Price D D 1996 Enhancement of perceived visual intensity by auditory stimuli: A psychophysical analysis. Journal of Cognitive Neuroscience 8: 497–506

- Stein B E, Meredith M A, Honeycutt W S, McDade L 1989 Behavioral indices of multisensory integration: Orientation of visual cues is affected by auditory stimuli. Journal of Cognitive Neuroscience 1: 12–24

- Stein B E, Meredith M A, Wallace M T 1993 The visually-responsive neuron and beyond: Multisensory integration in cat and monkey. Progress in Brain Research 95: 79–90

- Sumby W H, Pollack I 1954 Visual contribution to speech intelligibility in noise. Journal of the Acoustical Society of America 26: 212–5

- Wallace M T, Meredith M A, Stein B E 1992 Integration of multiple sensory modalities in cat cortex. Experimental Brain Research 91: 484–8

- Wallace M T, Stein B E 1994 Cross-modal synthesis depends on input from cortex. Journal of Neurophysiology 71: 429–32

- Wallace M T, Stein B E 1997 Development of multisensory neurons and multisensory integration in cat superior colliculus. Journal of Neuroscience 17: 2429–44

- Wilkinson L K, Meredith M A, Stein B E 1996 The role of anterior ectosylvian cortex in cross-modality orientation and approach behavior. Experimental Brain Research 112: 1–10