Sample Dynamic Decision Making Research Paper. Browse other research paper examples and check the list of research paper topics for more inspiration. iResearchNet offers academic assignment help for students all over the world: writing from scratch, editing, proofreading, problem solving, from essays to dissertations, from humanities to STEM. We offer full confidentiality, safe payment, originality, and money-back guarantee. Secure your academic success with our risk-free services.

Dynamic decision making is defined by three common characteristics: a series of actions must be taken over time to achieve some overall goal; the actions are interdependent so that later decisions depend on earlier actions; and the environment changes both spontaneously and as a consequence of earlier actions (Edwards 1962). Dynamic decision tasks differ from sequential decision tasks in that the former are primarily concerned with the control of dynamic systems over time to achieve some goal, whereas the latter are more concerned with sequential search for information to be used in decision making. (Dynamic decision tasks also need to be distinguished from dynamic decision processes. Dynamic decision models (e.g., Busemeyer and Townsend 1993), describe the moment-to-moment deliberation processes that precede each action within the sequence of actions.)

Academic Writing, Editing, Proofreading, And Problem Solving Services

Get 10% OFF with 24START discount code

Psychological research on dynamic decision making began with Toda’s (1962) pioneering study of human performance with a game called the ‘fungus eater,’ in which human subjects controlled a robot’s search for uranium and fuel on a hypothetical planet. Subsequently, human performance has been examined across a wide variety of dynamic decision tasks including computer games designed to simulate stock purchases (Rapoport 1975), welfare management (Dorner 1980, Mackinnon and Wearing 1980), vehicle navigation (Jagacinski and Miller 1978, Anzai 1984), health management (Kleinmuntz and Thomas 1987, Kerstholt and Raaijmakers 1997), production and inventory control (Berry and Broadbent 1988, Sterman 1994), supervisory control (Kirlik et al. 1993), and fire-fighting (Brehmer 1992). Cumulative progress in this field has been summarized in a series of empirical reviews by Rapoport (1975), Funke (1991), Brehmer (1992), Sterman (1994), and Kerstholt and Raaijmakers (1997).

1. Stochastic Optimal Control Theory

To illustrate how psychologists study human performance on dynamic decision tasks, consider the following experiment by You (1989). Subjects were initially presented with a ‘cover’ story describing the task: ‘Imagine that you are being trained as a psychiatrist, and your job is to treat patients using a psychoactive drug to maintain their health at some ideal level.’ Subjects were instructed to choose the drug level for each day of a simulated patient after viewing all of a patient’s previous records (treatments and health states). Subjects were trained on 20 simulated patients, with 14 days per patient, all controlled by a computer simulation program.

There are a few general points to make about this type of task. First, laboratory tasks such as this are oversimplifications of real-life tasks, designed for experimental control and theoretical tractability. However, more complex simulations have also been studied to provide greater realism (e.g., Brehmer’s (1992) fire-fighting task). Second, the above task is an example of a discrete-time task (only the sequence of events is important), but real-time simulations have also been examined, where the timing of decisions becomes critical (e.g., fire-fighting). Third, the cover story (e.g., health management) provides important prior knowledge for solving the task, and so the findings depend on both the abstract task properties and the concrete task details (Kleiter 1975). Fourth, the stimulus events are no longer under complete control of the experimenter, but instead they are also influenced by the subject’s own behavior. Thus experimenters need to switch from a stimulus–response paradigm toward a cybernetic paradigm for designing research (Rapoport 1975, Brehmer 1992).

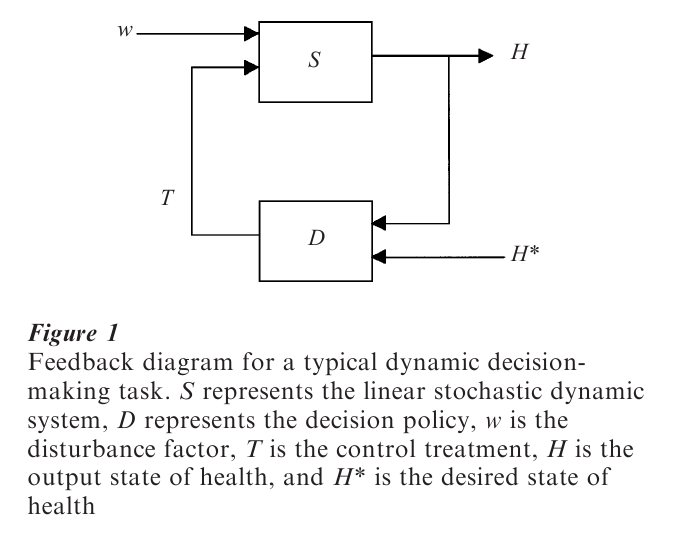

This health management task can be formalized by defining H(t) as the state of the patient’s health on day t; T(t) is the drug treatment presented on day t; and w(t) is a random shock that may disturb the patient on any given day. Figure 1 is a feedback diagram that illustrates this dynamic decision task. S represents the dynamic environmental system that takes both the disturbance, w, and the decision maker’s control action, T, as inputs, and produces the patient’s state of health, H, as output. D represents the decision-maker’s policy that takes both the observed, H, and desired, H*, states of health as input, and produces the control action, T, as output.

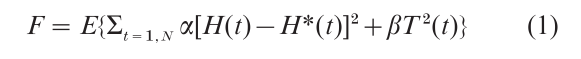

Based on these definitions, this task can be analyzed as a stochastic linear optimal control problem (Bertsekas 1976): determine treatments T(1), …, T(N), for N=14 days, that minimize the objective function

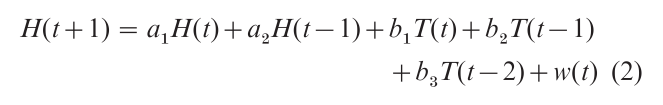

contingent upon the environmentally programmed linear stochastic dynamic system,

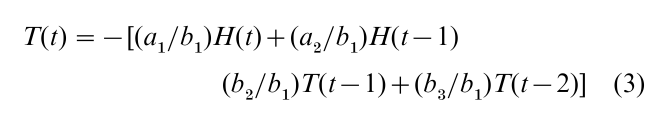

Standard dynamic programming methods (Bertsekas 1976) may be used to find the optimal solution to this problem. For the special case where the desired state of health is neutral (H* =0), some treatment effect takes place the very next day (b10), and there is no cost associated with the treatments (i.e., β=0), then the optimal policy

is the treatment that forces the mean health state to equal the ideal (zero) on the next day. If the cost of treatment is nonzero (i.e., β>0), then the solution is a more complex linear function of the previous health states and past treatments (Bertsekas 1976, You 1989).

Dynamic programming is a general-purpose method that can be used to solve for optimal solutions to many dynamic decision tasks. Although the example above employed a linear control task, dynamic programming can also been used to solve many nonlinear control problems (Bertsekas 1976). However, for highly complex tasks, dynamic programming may not be practical, and heuristic search methods such as genetic algorithms (Holland 1994) may be more useful.

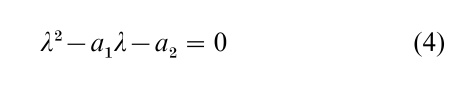

The formal task analysis presented above provides a basis for determining factors that may affect human performance on the task (Brehmer 1992). One factor is the stability of the dynamic system, which for Eqn. (2), depends on the two coefficients, a1 and a2. In particular, this system is stable if the roots of the characteristic equation

are<1 in magnitude (Luenberger 1979). A second factor is the controllability of the system, which depends on the three coefficients, b1, b2, and b3(Luenberger 1979). For example, if the treatment effect is delayed (b1=0), then the simple control policy shown in Eqn. (3) is no longer feasible, and the optimal policy is more a complex linear function of the system coefficients.

Consistent with previous research, You (1989) found that even after extensive experience with the task, subjects frequently lost control of their patients, and the average performance of human subjects fell far below optimal performance. However, this is a gross understatement. Sterman (1994) found that when subjects tried to manage a simulated production task, they produced costs 10 times greater than optimal, and their decisions induced costly cycles even though the consumer demand was constant. Brehmer (1992) found that when subjects tried to fight simulated forest fires, they frequently allowed their headquarters to burn down despite desperate efforts to put the fire out. Kleinmuntz and Thomas (1987) found that when subjects tried to manage their simulated patients’ health, they often let their patients die while wasting time waiting for the results of nondiagnostic tests and performed more poorly than a random benchmark.

2. Alternative Explanations For Human Performance

There are many alternative reasons for this suboptimal performance. By their very nature, dynamic decision tasks entail the coordination of many tightly interrelated psychological processes including causal learning, planning, problem solving, and decision making (Toda 1962). Six different psychological approaches for understanding human dynamic decision-making behavior have been proposed, each focusing on one of the component processes.

The first approach was proposed by Rapoport (1975), who suggested that suboptimal performance could be derived from an optimal model either by adding information processing constraints on the planning process, or by including subjective utilities into the objective function of Eqn. (1). For example, Rapoport (1975) reported that human performance in his stock purchasing tasks was reproduced accurately by assuming that subjects could only plan a few steps ahead (about three steps), as compared with the optimal model with an unlimited planning horizon. In this case, dynamic programming was useful for providing insights about the constraints on human planning capabilities. As another example, Rapoport (1975) reported attempts to predict performance in a multistage investment game by assuming that the utility function was a concave function of monetary value. In this case, dynamic programming was used to derive an elegant decision rule, which predicted that investments should be independent of the size of the capital accumulated during play. Contrary to this prediction, human decision makers were strongly influenced by the capital that they accumulated, and so in this case, dynamic programming was useful for revealing qualitative empirical flaws with the theory.

An alternative approach, proposed by Brehmer (1992) and Sterman (1994), is that suboptimal performance is caused by a misconception of the dynamic system (Eqn. (2)). In other words, a subject’s internal model of the system does not match the true model. In particular, human performers seem to have great difficulty in discerning the influence of delayed feedback and understanding the effects of nonlinear terms in the system. Essentially, subjects solve the problem as if it was a linear system that has only zero lag terms. In the case of Eqn. (2), this yields the simplified policy

where c1 is estimated from a subject’s control decisions. Diehl and Sterman (1993) found that this type of simplified subjective policy described their subjects’ behavior very accurately.

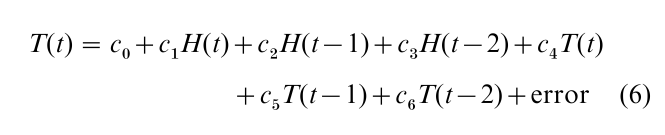

A more general method for estimating subjective decision policies was proposed by Jagacinski and Miller (1978). Consider once again the example problem employed by You (1989). In this case, a subject’s treatment decision on trial T(t) could be represented by a linear control model:

where the subjective coefficients (c0, c1,…, c6) are estimated by a multiple regression analysis. This is virtually the same as performing a ‘lens model’ analysis to reveal the decision-maker’s policy (Slovic and Lichtenstein 1971, Kleinmuntz 1993). This approach has been applied successfully in numerous applications (Jagacinski and Miller 1978, Kleinman et al. 1980, You 1989, Kirlik et al. 1993). Indeed, You (1989) found that subjects made use of information from both lags 1 and 2 for making their treatment decisions.

Heuristic approaches to strategy selection in dynamic decision tasks have been explored by Kleinmuntz and Thomas (1987), and Kerstholt and Raaijmakers (1997). These researchers examined health management tasks that entailed dividing time between two strategies: collecting information from diagnostic tests before treatment vs. treating patients immediately without performing any more diagnostic tests. A general finding is that even experienced subjects tended to overuse information collection, resulting in poorer performance than that which could be obtained from an immediate treatment (no test) strategy. This finding seems to run counter to the adaptive decision-making hypothesis of Payne et al. (1993), which claims that subjects prefer to minimize effort and maximize performance. The information collection strategy is both more effortful and less effective than the treatment strategy in this situation.

Finally, an individual difference approach to understanding performance on complex dynamic decision tasks was developed by Dorner and his colleagues (see Funke (1991) for a review). Subjects are divided into two groups (good vs. poor) on the basis of their performance on a complex dynamic decision task. Subsequently, these groups are compared with respect to various behaviors in order to identify the critical determinants of performance. This research indicates that subjects who perform best are those who set integrative goals, collect systematic information, and evaluate progress toward these goals. Subjects who tend to shift from one specific goal to another, or focus exclusively on only one specific goal, perform more poorly.

3. Learning To Control Dynamic Systems

Although human performers remain suboptimal even after extensive task training, almost all past studies reveal systematic learning effects. First, overall performance improves rapidly with training (see, e.g., Rapoport 1975, Mackinnon and Wearing 1985, Brehmer 1992, Sterman 1994). Furthermore, subjective policies tend to evolve over trial blocks toward the optimal policy (Jagacinski and Miller 1978, You 1989). Therefore, learning processes are important for explaining much of the variance in human performance on dynamic decision tasks (Hogarth 1981). Three different frameworks for modeling human learning processes in dynamic decision tasks have been proposed.

A production rule model was developed by Anzai (1984) to describe how humans learn to navigate a simulated ship. The general idea is that past and current states of the problem are stored in working memory. Rules for transformation of the physical and mental states are represented as condition–action-type production rules. A product rule fires whenever the current state of working memory matches the conditions for a rule. When a production rule fires, it deposits new inferences or information in memory, and it may also produce physical changes in the state of the system. Navigation is achieved by a series of such recognize–act cycles of the production system. Learning occurs by creating new production rules that prevent earlier erroneous actions, with new rules being formed on the basis of means–ends type of analyses for generating subgoal strategies. Simulation results indicated that the model eventually learned strategies similar to those produced in the verbal protocols of subjects, although evaluation of the model was based on qualitative judgments rather than quantitative measurements.

An instance or exemplar-based model was later developed by Dienes and Fahey (1995) to describe how humans learn to control a simulated sugar production task, and to describe how they learn to manage the emotional state of a hypothetical person. This model assumes that whenever an action leads to a successful outcome, then the preceding situation and the successful response are stored together in memory. On any given trial, stored instances are retrieved on the basis of similarity to the current situation, and the associated response is applied to the current situation. This model was compared to a simple rule-based model like that employed by Anzai (1984). The results indicated that the exemplar learning model produced more accurate predictions for delayed feedback systems, but the rule-based model performed better when no feedback delays were involved. This conclusion agrees with earlier ideas presented by Berry and Broadbent (1988), that delayed feedback tasks involve implicit learning processes, whereas tasks without delay are based on explicit learning processes.

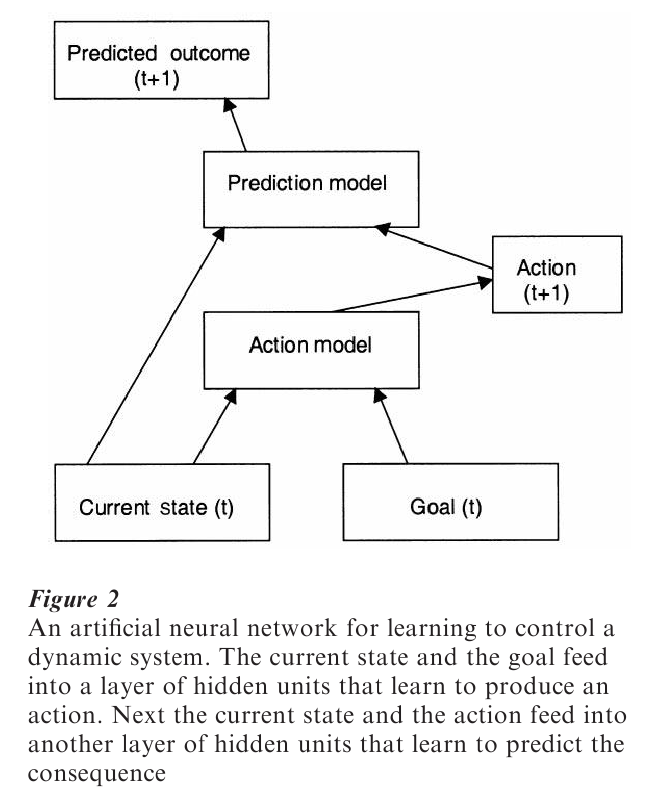

An artificial neural network model was developed by Gibson et al. (1997) to describe learning in a sugar production task. Figure 2 illustrates the basic idea. At the bottom of the figure are two types of input nodes, one representing the current state of the environment, and the other representing the current goal for the task. These inputs feed into the next layer of hidden nodes that compute the next action, given the current state and goal. The action and the current state then feed into another layer of hidden nodes, which is used to predict the consequence of the action, given the current state. The connections from the current state and action to the prediction hidden layer are learned by back-propagating prediction errors, and the connections from the current state and current goal to the action hidden layer are learned by back-propagating deviations between the observed outcome and the goal state (holding the connections to the prediction layer constant; see Jordan and Rumelhart (1992) for more details.) This learning model provided good accounts of subjects’ performance during both training and subsequent generalization tests under novel conditions. Unfortunately, no direct comparisons with rule or exemplar-based learning models were conducted, and this remains a challenge for future research.

Another type of artificial neural network model for learning dynamic systems is the reinforcement learning model (see Miller et al. (1991) for a review). Although this approach has proven to be fairly successful for robotic applications, it has not yet been empirically tested against human performance in dynamic decision tasks.

4. Laboratory vs. Naturalistic Decision Research

Klein and his associates (Klein 1998) have made progress toward understanding dynamic decisions in applied field research settings, which they call naturalistic decision making (e.g., interview fire chiefs after fighting a fire). Field research complements laboratory research in two ways: on the one hand, it provides a reality check on the practical importance of theory developed in the laboratory; on the other, it provides new insights that can be tested with more control in the laboratory.

The general findings drawn from naturalistic decision research provide converging support for the general theoretical conclusions obtained from the laboratory. First, it is something of a misnomer to label this kind of research ‘decision making,’ because decision processes comprise only one of the many cognitive processes engaged by these tasks—learning, planning, and problem solving are just as important. Decision making is used to define the overall goal, but then the sequence of actions follows a plan that has either been learned in the past or generated by a problem-solving process. Second, learning processes may explain much of the variance in human performance on dynamic decision tasks. Decision makers use the current goal and current state of the environment to retrieve actions that have worked under similar circumstances in the past. Klein’s (1998) recognition-primed decision model is based on this principle, and this same basic idea underlies the production rule, exemplar, and neural network learning models. Third, learning from extensive experience is the key to understanding why naıve subjects fail in the laboratory whereas experts succeed in the field—it is the mechanism that moves performance from one end of the continuum to the other.

Bibliography:

- Anzai Y 1984 Cognitive control of real-time event driven systems. Cognitive Science 8: 221–54

- Berry D C, Broadbent D E 1988 Interactive tasks and the implicit–explicit distinction. British Journal of Psychology 79: 251–72

- Bertsekas D P 1976 Dynamic Programming and Stochastic Control. Academic Press, New York

- Brehmer B 1992 Dynamic decision making: Human control of complex systems. Acta Psychologica 81: 211–41

- Busemeyer J R, Townsend J T 1993 Decision field theory: A dynamic–cognitive approach to decision-making in an uncertain environment. Psychological Review 100: 432–59

- Diehl E, Sterman J D 1993 Effects of feedback complexity on dynamic decision making. Organizational Behavior and Human Decision Processes 62: 198–215

- Dienes Z, Fahey R 1995 Role of specific instances in controlling a dynamic system. Journal of Experimental Psychology: Learning, Memory, and Cognition 21: 848–62

- Dorner D 1980 On the problems people have in dealing with complexity. Simulation and Games 11: 87–106

- Edwards W 1962 Dynamic decision theory and probabilistic information processing. Human Factors 4: 59–73

- Funke J 1991 Solving complex problems: Exploration and control of complex systems. In: Sternberg R J, Frensch P A (eds.) Complex Problem Solving: Principles and Mechanisms. Lawrence Erlbaum Associates, Hillside, NJ, pp. 185–222

- Gibson F, Fichman M, Plaut D C 1997 Learning in dynamic decision tasks: Computational model and empirical evidence. Organizational Behavior and Human Performance 71: 1–35

- Hogarth R M 1981 Beyond discrete biases: Functional and dysfunctional aspects of judgmental heuristics. Psychological Bulletin 90: 197–217

- Holland J 1994 Adaptation in Natural and Artificial Systems. MIT Press, Cambridge, MA

- Jagacinski R J, Miller R A 1978 Describing the human operator’s internal model of a dynamic system. Human Factors 20: 425–33

- Jordan M I, Rumelhart D E 1992 Forward models: Supervised learning with a distal teacher. Cognitive Science 16: 307–54

- Kerstholt J H, Raaijmakers J G W 1997 Decision making in dynamic task environments. In: Crozier W R, Svenson O (eds.) Decision Making: Cognitive Models and Explanations. Routledge, London, pp. 205–17

- Kirlik A, Miller R A, Jagacinski R J 1993 Supervisory control in a dynamic and uncertain environment: A process model of skilled human–environment interaction. IEEE Transactions on Systems, Man, and Cybernetics 23: 929–52

- Klein G 1998 Sources of Power: How People Make Decisions. MIT Press, Cambridge, MA

- Kleinman D L, Pattipati K R, Ephrath A R 1980 Quantifying an internal model of target motion in a manual tracking task. IEEE Transactions on Systems, Man, and Cybernetics 10: 624–36

- Kleinmuntz D 1993 Information processing and misperceptions of the implications of feedback in dynamic decision making. System Dynamics Review 9: 223–37

- Kleinmuntz D, Thomas J 1987 The value of action and inference in dynamic decision making. Organizational Behavior and Human Decision Processes 39: 341–64

- Kleiter G D 1975 Dynamic decision behavior: Comments on Rapoport’s paper. In: Wendt D, Vlek C (eds.) Utility, Probability, and Human Decision Making. Reidel, Dordrecht, The Netherlands, pp. 371–80

- Luenberger D G 1979 Introduction to Dynamic Systems. Wiley, New York

- Mackinnon A J, Wearing A J 1980 Complexity and decision making. Behavioral Science 25: 285–96

- Mackinnon A J, Wearing A J 1985 Systems analysis and dynamic decision making. Acta Psychologica 58: 159–72

- Miller W T, Sutton R S, Werbos P J 1991 Neural Networks for Control. MIT Press, Cambridge, MA

- Payne J W, Bettman J R, Johnson E J 1993 The Adaptive Decision Maker. Cambridge University Press, New York

- Rapoport A 1975 Research paradigms for studying dynamic decision behavior. In: Wendt D, Vlek C (eds.) Utility, Probability, and Human Decision Making. Reidel, Dordrecht, The Netherlands, pp. 347–69

- Slovic P, Lichtenstein S 1971 Comparison of Bayesian and regression approaches to the study of information processing in judgment. Organizational Behavior and Human Performance 6: 649–744

- Sterman J D 1989 Misperceptions of feedback in dynamic decision making. Organizational Behavior and Human Decision Processes 43: 301–35

- Sterman J D 1994 Learning in and about complex systems. System Dynamics Review 10: 291–330

- Toda M 1962 The design of the fungus eater: A model of human behavior in an unsophisticated environment. Behavioral Science 7: 164–83

- You G 1989 Disclosing the decision-maker’s internal model and control policy in a dynamic decision task using a system control paradigm. MA thesis, Purdue University