Sample Dual Task Performance Research Paper. Browse other research paper examples and check the list of research paper topics for more inspiration. iResearchNet offers academic assignment help for students all over the world: writing from scratch, editing, proofreading, problem solving, from essays to dissertations, from humanities to STEM. We offer full confidentiality, safe payment, originality, and money-back guarantee. Secure your academic success with our risk-free services.

1. Introduction

Studies on dual task performance belong to the core research areas on divided attention and also have strong applied relevance. In the epoch of automation, it is important to know which tasks can be performed together more or less easily and how they contribute to work load. Intuitively people can do only a limited number of ‘things’ at once, but much may depend on the level of skill. During the 1970s this wisdom was expressed in terms of the influential conceptual metaphor of the human as a limited capacity processor which was inspired by the early digital computers. Present theoretical formulations emphasize ‘crosstalk’ and ‘specification of action’ as the main sources of performance limits in dual task performance. This research paper presents a concise review of the various theoretical positions. A more complete account can be found in Sanders (1998).

Academic Writing, Editing, Proofreading, And Problem Solving Services

Get 10% OFF with 24START discount code

2. Limited Capacity Theory

The limited capacity was thought to be distributed over the tasks, permitting time sharing as long as the joint task demands did not exceed the limits, with the exception of a little capacity which was required for coordination of the tasks or for monitoring the environment. Effects of skill on capacity demands were described originally in terms of more efficient programming of the individual tasks. More recently, the notion of automatic vs. controlled processing has gained currency. In contrast to a controlled process, an automatic process—the ultimate result of practice— was not supposed to demand any capacity, and could therefore be always time shared. Sometimes, capacity was assumed to be constant (Moray 1967, Navon and Gopher 1979), whereas it was thought to vary as a function of arousal on other occasions (Kahneman 1973). A variable capacity has the problem that performance measures of capacity limits are basically unreliable. This was the reason why Kahneman proposed physiological measures with pupil size as favorite. Among others, pupil size was found to increase as a function of cognitive processing demands, e.g., the number of items to be rehearsed in a memory span task. However, such effects are easily overruled by the reaction of the pupil to light. More generally, there is the problem that cognitive information processing and autonomous physiological responses have no common conceptual framework.

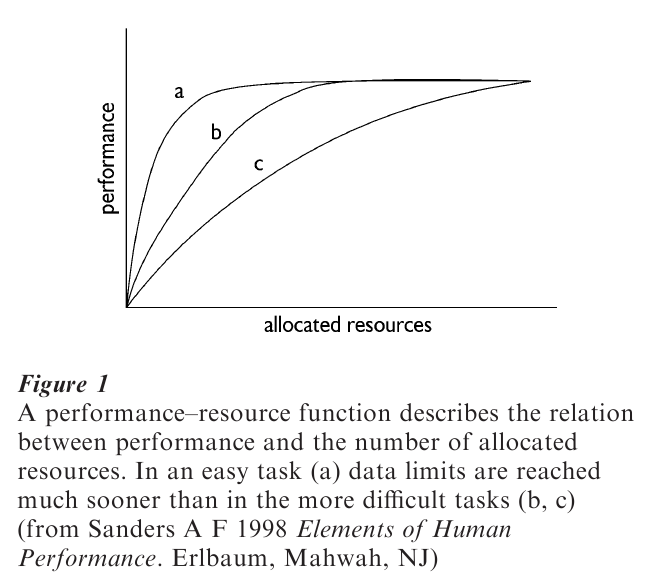

The most elaborate capacity theory stemmed from economics rather than from the digital computer (Navon and Gopher 1979). A performance–resource function (PRF, Fig. 1) depicts the relation between performance and invested capacity or resources. Performance improves as more resources are invested— the resource-limited part of the function—until reaching a maximum, which signals the start of the data-limited part of the function. As is clear from Fig. 1, a data limit means that performance does not improve further when more resources are allocated. Task difficulty is defined as marginal efficiency of the resources.

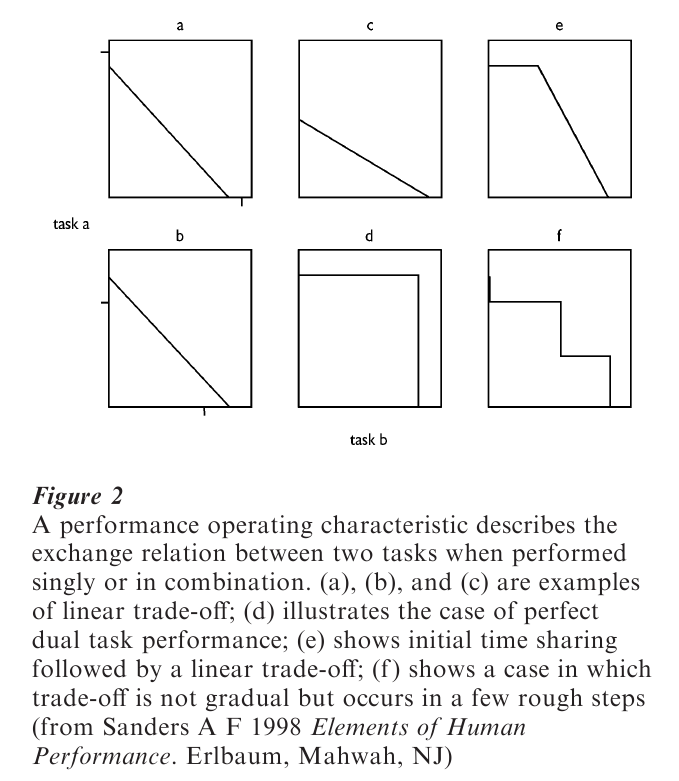

Unlike in economics, a behavioral PRF cannot be obtained directly but it can be estimated from a performance operating characteristic (POC) which describes the relation between performances of two tasks in a dual task setting. Examples of POCs are given in Fig. 2.

Figures 2(a)–(c) have linear exchange relations, suggesting that, when performed alone, either task consumes all resources so that performance suffers when the second task acquires some resources as well. The slope of the POC expresses the relative difficulty of the two tasks. Thus, in Fig. 2(c), task A is more difficult than task B, since a small loss on task A has the effect of a relatively large gain on task B. Figure 2(a) shows a case in which maximum performance on task A, when carried out alone, is somewhat better than dual task performance with an exclusive emphasis on task A. This difference is labeled concurrence costs, which is the opposite of concurrence benefits (Fig. 2(b)) in which case maximum performance on task A profits from the presence of task B. Doing two tasks together without any performance loss on either one (Fig. 2(d)) suggests pronounced data limits in both tasks. The available resources cannot be used by either task, thus enabling maximum joint performance. In Fig. 2(e) maximum performance on task A can still be combined with some activity on task B, followed by a linear exchange relation between both tasks. Presumably task A has some data limits but much less strongly than in Fig. 2(d). Finally, Fig. 2(f ) raises the question of continuous vs. a discrete trade-off between the tasks. People may be only capable of dividing resources by way of a few discrete steps.

The main difference between economics-and computer-based capacity models is that the economics view assumes that people always aim at investing all resources. A proper study on dual tasks, therefore, should only be concerned with the resource-limited parts of the PRF. The difference between a difficult and an easy task is that people do more on the easy task but all resources are fully invested in either case. In contrast, computer models have usually defined difficulty in terms of resource demands, a difficult task leaving less spare capacity than a simple task. The two hypotheses have different predictions when capacity shrinks somehow. The economics theory suggests that the easy task will suffer most, since the quantitative effect of removing resources is larger on the easy task. In contrast, the computer model suggests that the difficult task has largest capacity demands and will therefore suffer most. The evidence appears to favor the economics model; as long as both tasks are resource-limited, limiting resources appeared to have more effect on the easy task.

The above capacity concepts are aspecific and assume task invariance. Aspecificity means that any task or activity consume the same resources. Task invariance means that the quantity of performance may vary with resource allocation but the nature of the task remains the same. All models implicitly assume a central executive which is responsible for the allocation policy. The distinction between resource and data limits adds the important suggestion that tasks may be timeshared because each one only needs part of the available capacity to reach its maximum performance. This will usually refer to capacity demands over time. Indeed, one popular measure of workload—timeline analysis—involves a time record of activities; an operator is supposed to be overloaded when task demands overlap in time, which implies the assumption that only a single activity is possible at any moment. It should be noted, though, that this issue— serial vs. parallel processing of demands from various tasks—is not raised in capacity models. The point is that they never specify whether ‘capacity demands’ refer to a single moment or to an extended period of time.

It is not surprising that models of dual task performance started with the above simple capacity notions They had the advantage of providing a simple approach to the applied problem of mental workload— the more demands, the higher is the load—and of task difficulty. In addition, they give a straightforward definition of selective attention in terms of capacity limits and allocation policy, and they suggested basic rules why two tasks can be sometimes timeshared whereas they are mutually exclusive on other occasions. An aspecific limited capacity was also an appealing metaphor during the 1960s and 1970s and had at least one interesting prediction. Thus the level of dual task performance should not depend on the specific characteristics of the various activities involved. In other words, if a task A suffers more from task C than from task D, task B should also suffer more from task C than from task D (Navon and Gopher 1979). Perhaps the most compelling failure of this prediction stems from a classical study by Brooks (1968). He found that a task requiring spatial working memory (task A) was performed better in combination with a verbal processing task (task B) than with a spatial processing task (task D), whereas a task demanding verbal working memory (task B) was performed better in combination with spatial processing (task D) than with verbal processing (task B). Other evidence showed that search in verbal short-term memory was more easily combined with manual tracking than with recalling abstract words. However, searching spatial short-term memory was performed better in combination with recalling abstract words than with tracking. Various other examples are discussed by Wickens (1992). They all relate to effects on dual task performance of structural alteration, i.e., changing the structure of a task while keeping its difficulty constant, and to difficulty insensitivity, i.e., conditions in which increasing the difficulty of one task hardly affects the performance level of another. Both effects are at odds with a single aspecific limited capacity.

3. Multiple Resources

This was the reason for considering multiple and mutually independent resources (e.g., Sanders 1998), in which a task is characterized by its profile of demands for various resources. Wickens (1992) proposed three resource dimensions, one relating to input modality (visual vs. auditory), one to the relative emphasis in central processing (perceptual cognitive vs. response) and one to central processing codes (verbal vs. spatial). This last dimension relates to the finding that a spatial task is usually easier to combine with a verbal task than with another spatial task. Similarly, there is evidence that, when two tasks have the same input modality, their joint maximum performance is less than when input modalities differ. Finally, a predominantly motor task, e.g., tapping a regular interval, can be combined more easily with a predominantly cognitive task, e.g., classifying letters, than with another motor task such as tracking. Tapping is insensitive to demands on classification (Donk and Sanders 1989).

Multiple resources have some important theoretical implications. Thus, there will usually be ample spare capacity. Whenever a task is limited to certain resource pools, the demands on those pools may exceed capacity whereas capacity from the remaining pools is not used. This undermines the notion of task difficulty. Indeed, one task may only need and overload one particular resource pool, whereas another task may require all resource pools without imposing a heavy load on any. Which one is harder? Viewed from the perspective of total resource consumption, the last task may be the most demanding. However, the first cannot be completed successfully, which may be decisive in the discussion of workload. This point also bears on assessing workload. In the single-capacity models this was simply a matter of resource consumption. In the case of multiple resources, resource allocation is multidimensional, which prohibits a single-dimensional workload concept. As an example, the proposal of assessing workload by measuring performance on a second task derived from the assumption that a main task leaves aspecific spare capacity which is allocated to the second task. However, from the perspective of multiple resources, the cognitive architecture of the main and second tasks controls the second task performance. The multiple resource view has the obvious merit of recognizing the role of the cognitive architecture of tasks. Finally, Wickens’ three dimensions capture some important cognitive differences which are particularly relevant from an applied point of view. For example, when, as in car driving, a task is spatially loading, additional information, e.g., route information, should be processed verbally. In fact, studies showing almost perfect time sharing in complex tasks, such as piano playing and repeating a verbal message (Allport et al. 1972), used tasks that differed in all three of Wickens’ dimensions. Thus, piano playing may proceed from visual input to analogue representation to manual output, whereas the verbal message was auditorily presented, verbally encoded, and ended with a vocal output. In addition, the participants in such studies were always highly skilled, which means a large marginal efficiency of resource consumption.

Despite these merits, the heuristic value of resource models has come under heavy attack (Neumann 1987). One reason was that, in line with the enormous processing capacity of the brain, the new generation of computers overruled the notion of limited capacity as a viable metaphor. Second, ‘interference among tasks is usually much more specific than predicted on the basis of a limited amount of resources and, on the other hand, there is unspecific interference that seems not to depend on any specific resource’ (Neumann 1987, p. 365). For instance, timesharing a spelling task and a mental arithmetic task is better than timesharing two arithmetic or two spelling tasks. However, they would all be subsumed under Wickens’ verbal central processing resource. It is obvious that not much is gained by postulating a new resource for each new case in which timesharing is found. In regard to aspecific interference, Gladstones et al. (1989) had participants perform two machine-paced serial reaction tasks, singly and in combination, and found no indication for better shared performance when the stimuli for one task were visual and of the other task auditory in comparison with visual stimuli in both tasks. Performance did not profit either from a difference in effectors, that is, when one task had a vocal and the other a manual response, in comparison with two manual responses. Third, merely distinguishing among resources runs the risk of conceptualizing information processing in terms of a set of distinct faculties without detailed process descriptions and without an integrative account. An attempt to account for the last point was the assumption of an additional general-purpose resource responsible for attention control in scheduling and timing. The notion of attention control was fostered by evidence that practice not only has the effect of diminishing resource demands but also that attention control profits from practice. Thus, practicing two single tasks until full proficiency does not mean that they can be easily time shared without additional practice in dual task performance (Detweiler and Lundy 1995). The relevance of scheduling and timing is evident from findings that two tracking tasks—which presumably share the same resources—are much easier to do together when their temporal dynamics are the same than when they deviate (Fracker and Wickens 1989).

4. Interference

The above objections led to accounts in terms of interference—outcome conflict and cross-talk— among simultaneous tasks, depending on the extent the various task demands compete in an abstract space. There may be cooperation, conceived of as a single more complex action plan for both tasks, or confusion, referring to problems in establishing correct input–output connections (Wickens 1992) As an example, in a dual task consisting of manual tracking and discrete choice reactions, tracking proficiency appears to impair briefly before a choice reaction, suggesting outcome conflict in motor programming. Neumann (1987) suggested two reasons for limits in performance, both concerned with the control of action, namely effector recruitment and parameter specification. The issue is not that the capacity is limited but that one has to choose among competing actions. Effector recruitment refers to the selection of effectors which should become active at a given moment. The main meaning of effector recruitment is to abort competing responses, in which way it may lead to a bottleneck in response selection. Parameter specification refers to getting the proper values, e.g., direction, force, size of a movement, but also selecting appropriate stimuli for completing an intended action. Practice has the effect that default values develop which means that the action can be completed without time-consuming specifications. Effector recruitment and parameter specification at the time of an action prohibit other actions from occurring at the same time. The consequence is that time sharing two actions is hard when they are independent. In contrast, complex skills usually consist of integrated sequences, summarized in chunks and groups of actions which are initiated by a single command. This may account for the finding that time sharing has been found mainly in well-trained complex tasks and not in uncorrelated reactions to successive stimuli, as for instance in the study of Gladstones et al (1989). Time sharing is better as tasks (a) allow processing and acting in larger units, (b) permit preview and advance scheduling of demands, and (c) do not involve similar or competing patterns of stimuli and actions.

Thus, formulating performance limits by way of interference puts full weight on processing architecture and timing of action. Its popularity is at least partly due to the advent of performance models in terms of connectionist networks (Detweiler and Schneider 1991) and to the present interest in the neurophysiology of behavior. A potential problem for the interference models may be a lack of predictive power. Thus far the research has been insufficiently specific about constraints and too occupied with the description of the existing results beyond the concept of limited capacity. Although the connectionist models are quantitative, their detailed predictions are still open to post hoc parameter setting.

With regard to future research, patterns of interference should be described as a function of the stage of processing at which such patterns are supposed to arise. This should lead to a more detailed catalog of actions which are liable to output conflict and, hence, to be avoided in task design. There are interesting recent developments into this direction, among which Pashler’s research on the extent of parallel versus serial processing in responses to successive stimuli (e.g., Pashler 1994) and the EPIC simulation (Meyer and Kieras 1997) of human performance. In addition, much may be expected from the analysis of brain images while performing a dual task. The new approaches should enable a more detailed account of dual performance and of attention control of multiple tasks than was possible on the basis of capacity notions.

Bibliography:

- Allport A, Antonis B, Reynolds P 1972 On the division of attention: a disproof of the single channel hypothesis. Quarterly Journal of Experimental Psychology 24: 225–35

- Brooks L R 1968 Spatial and verbal components of the act of recall. Canadian Journal of Psychology 22: 349–68

- Detweiler M C, Lundy D H 1995 Effects of single and dual-task practice on acquiring dual-task skill. Human Factors 37: 193–211

- Detweiler M C, Schneider W 1991 Modelling the acquisition of dual task skill in a connectionist control architecture. In: Damos D L (ed.) Multiple Task Performance. Taylor & Francis, Washington, DC, pp. 247–95

- Donk M, Sanders A F 1989 Resources and dual task performance: resource allocation versus task integration. Acta Psychologica 72: 221–32

- Fracker M L, Wickens C D 1989 Resources, confusions and compatibility in dual axis tracking: displays controls and dynamics. Journal of Experimental Psychology: Human Perception and Performance 15: 80–96

- Gladstones W H, Regan M A, Lee R B 1989 Division of attention: the single channel hypothesis revisited. Quarterly Journal of Experimental Psychology 41: 1–17

- Kahneman D 1973 Attention and Eff Prentice Hall, Englewood Cliffs, NJ

- Meyer D E, Kieras E 1997 A computational theory of executive cognitive processes and multiple task performance. Part 1. Basic mechanisms. Psychological Review 104: 3–65

- Moray N H 1967 Where is capacity limited? A survey and a model. Acta Psychologica 27: 84–92

- Navon D, Gopher D 1979 On the economy of processing systems. Psychological Review 86: 214–55

- Neumann O 1987 Beyond capacity: a functional view of attention. In: Heuer H, Sanders A F (eds.) Perspectives on Perception and Action. Erlbaum, Hillsdale, NJ, pp. 361–94

- Pashler H 1994 Dual-task interference in simple tasks: data and theory. Psychological Bulletin 116: 220–44

- Sanders A F 1998 Elements of Human Performance. Erlbaum, Mahwah, NJ

- Wickens C D 1992 Engineering Psychology and Human Performance. HarperCollins, New York