Sample Consensus Panels Research Paper. Browse other research paper examples and check the list of research paper topics for more inspiration. iResearchNet offers academic assignment help for students all over the world: writing from scratch, editing, proofreading, problem solving, from essays to dissertations, from humanities to STEM. We offer full confidentiality, safe payment, originality, and money-back guarantee. Secure your academic success with our risk-free services.

Should you avoid electromagnetic fields produced by home appliances and overhead transmission wires, or secondhand smoke from cigars and cigarettes because they may increase your risk of cancer? These are just two recent examples of the recurrent ‘menace[s] of daily life’ that have been resolved by consensus panels. A consensus is a shared belief that results from a group judgment. A consensus panel is a group created for the purpose of forming or reaching a consensus. The demand for formal consensus methods has greatly increased in recent decades as the complexity of the decisions, their importance, and the amount of conflicting information involved have also increased. Four prominent, widely used consensus panel methods are described.

Academic Writing, Editing, Proofreading, And Problem Solving Services

Get 10% OFF with 24START discount code

1. Important Consensus Panel Characteristics

The two most critical aspects of consensus are: (a) how widely shared is the belief, and (b) how is the panel selected? With respect to the first question, while consensus is often thought of as a belief shared by all panel members (i.e., belief unanimity), it also can connote a majority opinion or even, in practice, an average of the group’s belief. The answer depends on the procedure used to reach a consensus or the source of the panel’s decision.

1.1 Source Of Panel Decision

There are two sources or ways that a consensus decision can be achieved that result in either a majority or unanimous consensus. The first, and most commonly associated with the consensus process, is that the decision is internal to the panel arising from discussion and sharing of information. In such cases an ad hoc panel of experts could be convened to make a unanimous recommendation. The decision to ad-minister the swine flu vaccine to the US public resulted from such a qualitative method (Neustadt and Fine-berg 1978).

Since experts with divergent beliefs often disagree, an internal consensus is often impossible. In that case, a second source of decision-making uses a number of quantitative methods to derive a majority or average opinion external to the panel or entirely independent of it. The most common of these is the Delphi method (Dalkey 1969) developed at the RAND Corporation that was actually employed in parallel with the swine flu decision (Schoenbaum et al. 1976). A more recent quantitative method known as meta-analysis (Smith and Glass 1977) or research synthesis (Cooper and Hedges 1994) is widely used to develop an average consensus of the empirical, scientific literature produced by experts.

1.2 Panel Composition

Consensus panels are composed of experts or non-experts. Expert or ‘blue-ribbon’ panels are most commonly employed since such groups presumably not only have the requisite knowledge to reach a correct consensus, but also the credibility required to convince others of the validity of their opinion.

Since selection of experts can easily result in bias (see discussion of ad hoc panels, nonexperts who are neutral on the issue have commonly been convened as a jury to evaluate the existing evidence and reach a decision or recommendation. This method has been used extensively by the US National Institutes of Health (NIH) to assess the effectiveness of medical innovations (Perry and Kalberer 1980). While selection bias is not a problem for the panel’s composition, it is still possible that it could affect the information being considered.

2. Consensus Panel Methods

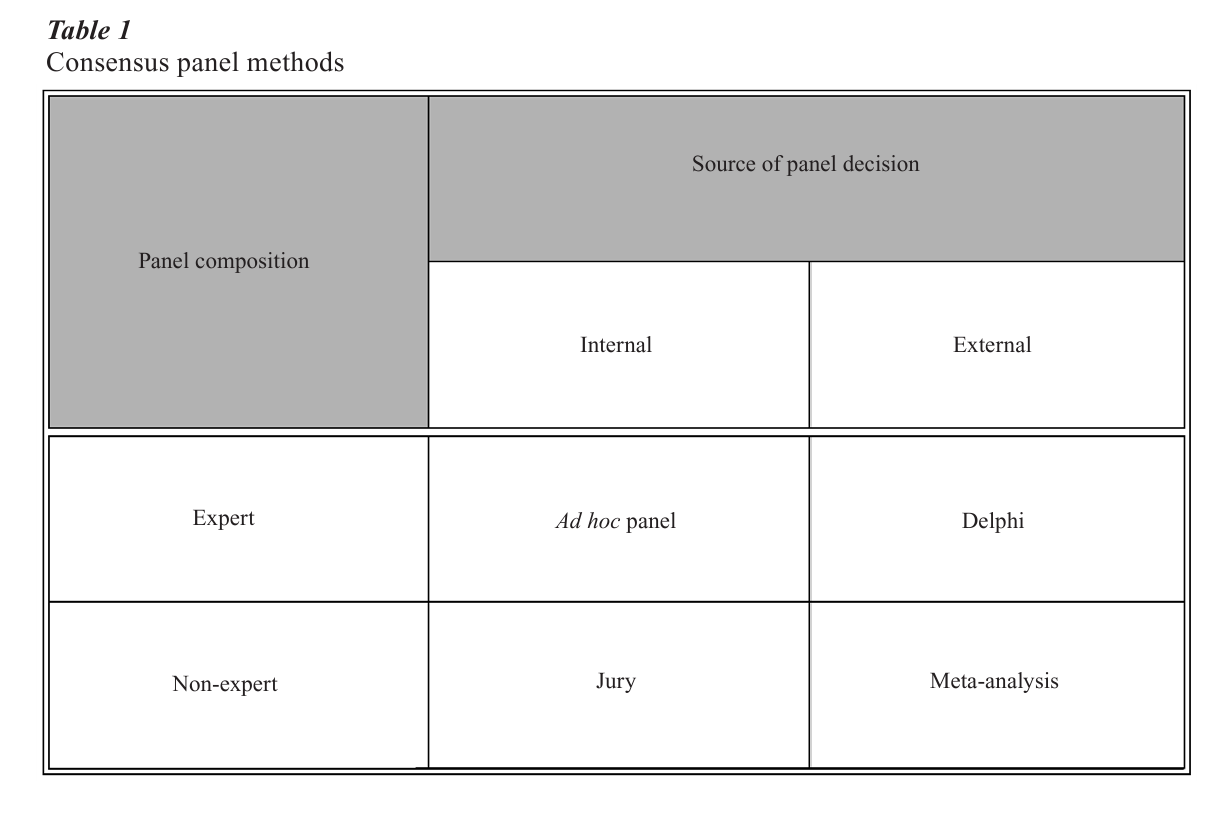

The four methods in Table 1 illustrate the contemporary methods for reaching group consensus that embody the characteristics described in the previous section. Experts and nonexperts are both directly involved in internal decisions while, by using quantitative techniques, they can indirectly produce a consensus externally. Both approaches have proven useful in different circumstances, and both have strengths and weaknesses.

2.1 Ad Hoc Panel Method

The use of ad hoc panels or groups is a widely-employed organizational method for consensus decision-making. Such panels are composed of experts convened in a face-to-face meeting to make a specific decision. The method can be either unstructured or structured. In an unstructured procedure, a nonrepresentative (or nonprobability) convenience sample of experts is gathered for one session to address a single, global question such as, ‘Should the swine flu vaccine be produced and distributed?’ Such convenience panels are often neither representative of the range of beliefs nor independent of the agency or organization re-questing the consensus decision (Neustadt and Fine-berg 1978).

In a structured procedure, an effort is made to create a panel containing members representing all the views on the topic. This involves an extended search and nomination process, and often open public comment sessions that include the press as well. Rather than one global issue, the decision is also broken down into a set of subsidiary questions or issues with a procedure for addressing them. Typically, subgroups are formed to address these issues. They can either be members of the panel or another group of experts who are not part of the group and thus not subject to its potential biases (e.g., groupthink, the dominance of prestigious panel members, etc.).

The swine flu decision, as reviewed at government request (Neustadt and Fineberg 1978), illustrates both the strengths and weaknesses of this method. In early 1976 four cases of swine flu were reported among US Army recruits at Fort Dix. The Center for Disease Control (CDC) convened an ad hoc panel to determine if a vaccine should be produced and distributed to prevent a pandemic that fall. The panel was composed of members of four Federal and State agencies plus CDC staff. A month later this same group reconvened in a joint meeting with a standing CDC advisory committee appointed and staffed by the Director. Only one of the acknowledged elder statesmen in the field of virology participated in this meeting, and he strongly believed that the country was approaching the end of an 11-year cycle when a new pandemic would occur. At the end of a day’s discussion a consensus emerged that ‘the possibility of a pandemic existed’ and that everyone was at risk. Therefore, the panel recommended that enough vaccine be produced to inoculate the entire US population.

A similar biased consensus unanimously endorsing a swine flu vaccine program also resulted from a third panel appointed by President Ford one month later. He wanted the advice of a ‘representative group’ of experts, but also had to rely largely on the CDC to select them. Although some famous scientists were added, there were still notable exceptions. Most of the panelists felt that the decision had already been ‘programmed.’ President Ford publicly announced the Federal program later that day. A series of disasters then followed including the deaths of three high-risk elderly people in Pittsburgh early that fall shortly after being vaccinated, and soon thereafter numerous reports of vaccine-related paralysis. In December, upon the recommendation of CDC, President Ford suspended the program.

A month later an outbreak of Victoria flu erupted in a Florida nursing home. The CDC advisory panel recommended ‘limited resumption of the swine flu program’ so that the Victoria vaccine that was included in the bivalent doses would be available. The new US Secretary of Health, Education, and Welfare decided to convene a new, fourth ‘advisory group’ that was more representative and independent than the first three—two CDC and President Ford’s—panels. The ad hoc group was to be chaired by two of the ‘nation’s most distinguished scientists’ who were not part of the ‘flu establishment’ and the meeting was open to the public and the press. The ‘improvised’ consensus procedure led to a recommendation to lift the ban on the bivalent vaccine for ‘high-risk’ groups such as the elderly. It was considered ‘a great success.’

2.2 Delphi Method

One of the major problems facing ad hoc panels such as those considering the swine flu vaccine program was the lack of information, particularly concerning a key issue—the probability of a swine flu pandemic. Other related problems concerned the influence of dominant group members, conformity, and hidden agendas. The Delphi method (Dalkey 1969) was created to solve all of these problems.

Group process artifacts are eliminated in the Delphi Method by avoiding a face-to-face meeting. According to Dalkey (1969), one of the developers of this group judgment method, the Delphi procedure has three important ‘features’: (a) anonymity of the panelist, (b) multiple sessions or ‘interations,’ and, as noted above, (c) an external, quantitative group decision. Panelists remain anonymous since their contact is by phone or mail through the Delphi coordinator. Multiple sessions are conducted in which feedback from the previous session or ‘round’ is provided to the individual panelists. Typically, the information is a quantitative summary of all the panelists ratings on important questions needed to make a decision. For example, to obtain an estimate of the probability of a swine flu pandemic five experts were surveyed using a mailed questionnaire with their responses obtained by telephone (Schoenbaum et al. 1976). The process was repeated until the change in the average (in this case, the median) was less than a specified criterion (here 10 percent).

The Delphi method has a number of other benefits. It is ‘rapid,’ inexpensive, and can produce a relatively ‘objective’ estimate of ‘opinion’ where there is a minimal amount of information available. The swine flue decision which was investigated using this method took only four weeks, comparable to the time taken by the ad hoc panels. It also produced a median probability estimate for the pandemic of 0.10—a figure too low to justify a Federal program to vaccinate the entire US population.

More recently researchers at RAND (Brook et al. 1986) have developed a newer consensus method using a variant of the Delphi technique. Nine-member expert physician panels were asked to rate the ‘appropri-ateness’ of an exhaustive set of ‘indications’ for performing (or not performing) various procedures (e.g., carotid endarterectomy, CE) using a nine-point interval scale. Panels were selected to balance speciality, practice, and location. There were two rounds of ratings. The first, typical of the Delphi method, involved anonymous ratings using the mail to provide the panelists relevant information including literature reviews. The second, however, involved a one-or two-day, face-to-face meeting at RAND to rerate the procedures. Each physician was provided a summary distribution of all the panelists’ initial ratings with their own clearly indicated. After discussion, the indications were rerated. Four quantitative methods for determining unanimity were calculated (i.e., absolute with all ratings in a three-point region indicating ‘inappropriate’ (1–3), ‘equivocal’ (4–6), or ‘appropriate’ (7–9); scale indicating all ratings in a consecutive three-point region; and both absolute and scale with the highest and lowest ratings removed).

2.3 Jury Method

Juries are arguably the single most common method for consensus decision making given their pervasive use in legal proceedings. While such procedures may seem beyond the scope of a discussion of formal consensus methods, that is simply not the case. Not only have juries been used to make decisions on the same issues that have been dealt with by other consensus methods (Angell 1996), but a variant of the jury method, the ‘Consensus Development Program’ developed by the US NIH (Perry and Kalberer 1980), has been adopted by other western nations (Lomas et al. 1988, Vang 1986).

In both cases, the juries or panels are composed of nonexpert, neutral persons who listen to an adversarial discussion or presentation by experts over a number of days before reaching a judgment or decision. For example, juries have awarded billions of dollars in damages to women who have successfully sued companies making silicon breast implants claiming that the implants have caused a variety of major diseases (e.g., cancer, arthritis, lupus, etc.). Nevertheless, a 16-member expert scientific panel of the US National Academy of Sciences concluded in a unanimous consensus that ‘women with implants are no more likely than other women [without implants] to develop these systemic illnesses’ (Institute of Medicine 1999).

The major difference between legal juries and those more formal methods such as the NIH Consensus Development Program is the role of experts (Angell 1996). The law has a liberal definition of expert that allows those with strong beliefs, but not equally strong credentials or evidence, to present their views to juries. Since it is often difficult for both lawyers and juries to assess the credibility of the evidence for these views, persuasion and argumentation skills generally prevail. This is not solely the case in formal consensus procedures such as the one used by NIH since 1977. The neutral panelists have both the range of disciplinary background and skills to assess the evidence, and the expert presenters or witnesses focus on the evidence, usually their own research, rather than their interpretation or opinion.

Donald Fredrickson, the former director of NIH, developed the NIH Consensus Development Program based on his experience with the successful swine flu ad hoc panel described (Perry and Kalberer 1980). In particular, he incorporated into this jury procedure the use of a distinguished panel chair, a series of questions, and open meetings. The panel or jury meets in open session over a two-day period at NIH, listening to presentations on the various questions, and interrogating the speakers or presenters. On the third day the panel presents its verdict—a written draft consensus statement—to the public and the press for comment, and then revises it prior to publication.

2.4 The Meta-Analysis Method

Meta-analysis is the most recent consensus method. Like the Delphi method, it uses quantitative methods to aggregate or summarize expert knowledge—in this case, the scientific research literature (cf., Cooper and Hedges 1994). An average effect of some human intervention or activity (e.g., the effectiveness of CE surgery to prevent stroke or the adverse health effects of secondhand smoke) is calculated by the meta-analyst, whose role is similar to the Delphi coordinator, from all available studies—both published and unpublished—that represent the views of all experts. Consequently, it is of most value in areas where a substantial amount of conflicting scientific research has been done on a controversial issue.

A well-done meta-analysis avoids the subjectivity inherent in the other consensus methods since it relies entirely on the actual evidence developed by experts rather than their opinions or interpretations, and can be subject to replication. Moreover, it overcomes well-known human cognitive limitations in aggregating large amounts of information (Smith and Glass 1977). For example, a comparison of meta-analysis to both ad hoc groups and the RAND modified Delphi method has shown meta-analysis to provide a more accurate estimate than those consensus panels (Antmann et al. 1992, Wortman et al. 1988).

Such summaries or research syntheses can either stand alone, be commissioned to answer a policy question similar to the breast implant issue, or be incorporated into a group decision process. A meta- analysis to assess the impact of US public school desegregation on the academic achievement of Afro- Americans illustrates all three of these uses (Cooper 1986). As the meta-analysis was being completed, the US government convened an expert panel to examine this issue.

The six experts selected to represent the range of opinions about the benefits of school desegregation on black achievement—with two in favor, two against, and two neutral—decided to replicate the meta- analysis using only the strongest evidence. Consequently, an initial unanimous consensus was reached to include only 18 of the original 31 studies. An additional study by two members of the panel that had originally been rejected as low in quality was added. All panelists then conducted their own meta-analyses with the assistance of the original meta-analyst as well as an outside methodological consultant. However, the panelists’ prior beliefs affected their choice of evidence (Cooper 1986). Despite this bias, ‘the panelists achieved a high degree of correspondence … [and] agreed the effect [of desegregation] was positive’ (Cooper 1986 p. 348). Nevertheless, the panel moderator methodologist was unable to persuade the panel to agree on a written consensus statement to that effect.

3. Conclusions

The descriptions of the four consensus methods indicate that they have differing strengths and weak-nesses. One important weakness that potentially affects all of them, however, is selection bias, either in the composition of the panels, the questions asked, or the evidence retrieved. While selection bias in the first three methods involving human panels has been noted above, it is also the main problem in meta-analysis. Selection or publication bias may result in the omission of unpublished studies thus overestimating the average effectiveness of an intervention. The use of open meetings is one way to reduce this bias as is careful initial planning to seek out all relevant experts and evidence. In cases where evidence is available, a combination of methods such as the jury and meta-analysis may be warranted (Wortman et al. 1988). With the rapid increase in both information and access to large computer databases, both quantitative, external consensus combined with qualitative, internal consensus may be the next step in the development of consensus methods.

Bibliography:

- Angell M 1996 Science on Trial. W. W. Norton, New York

- Antmann E M, Lau J, Kupelnick B, Mosteller F, Chalmers T C 1992 A comparison of the results from meta-analysis of randomized control trials and recommendations of clinical experts: treatments for myocardial infarction. Journal of the American Medical Association 268: 240–8

- Brook R H, Chassin M R, Fink A, Solomon D H, Kosecoff J, Park R E 1986 A method for the detailed assessment of the appropriateness of medical technologies. International Journal of Technology Assessment in Health Care 2: 53–63

- Cooper H M 1986 On the social psychology of using research reviews: the case of desegregation and black achievement. In: Feldman R S (ed.) The Social Psychology of Education. Cambridge University Press, New York

- Cooper H, Hedges L V 1994 The Handbook of Research Synthesis. Russell Sage Foundation, New York

- Dalkey N 1969 An experimental study of group opinion: The Delphi method. Futures 1: 408–26

- Institute of Medicine 1999 Safety of Silicon Breast Implants. National Academy Press, Washington, DC

- Lomas J, Anderson G, Enkin M, Vayda E, Roberts R, MacKinnon B 1988 The role of evidence in the consensus process: Results from a Canadian consensus exercise. Journal of the American Medical Association 259: 3001–5

- Neustadt R E, Fineberg H V 1978 The Swine Flu Affair: Decision-making on a Slippery Disease. US Govt. Printing office (No. 017–000-00210-4), Washington, DC

- Perry S, Kalberer J T 1980 The NIH consensus development program and the assessment of health-care technologies: The first two years. New England Journal of Medicine 303: 169–72

- Schoenbaum S C, McNeil B J, Kavet J 1976 The Swine-influenza decision. New England Journal of Medicine 295: 759–65

- Smith M L, Glass G V 1977 Meta-analysis of psychotherapy outcome studies. American Psychologist 32: 752–60

- Vang J 1986 The consensus development conference and the European experience. International Journal of Technical Assessment in Health Care 2: 65–76

- Wortman P M, Smyth J M, Langenbrunner J C, Yeaton W H 1998 Consensus among experts and research synthesis: A comparison of methods. International Journal of Technical Assessment in Health Care 14: 109–22

- Wortman P M, Sechrest L 1988 Do consensus conferences work? A process evaluation of the NIH Consensus Development Program. Journal of Health Politics, Policy, and Law 13: 469–98