Sample Quasi-Experimental Designs Research Paper. Browse other research paper examples and check the list of research paper topics for more inspiration. If you need a religion research paper written according to all the academic standards, you can always turn to our experienced writers for help. This is how your paper can get an A! Feel free to contact our research paper writing service for professional assistance. We offer high-quality assignments for reasonable rates.

The theory of quasi-experimentation addresses one of the great unanswered questions in the social and behavioral sciences: how can researchers make a valid causal inference when units cannot be randomly assigned to conditions? Like all experiments, quasi-experiments deliberately manipulate presumed causes to discover their effects. However, quasi-experiments are also defined by what they do not do: the researcher does not assign units to conditions randomly. Instead, quasi-experiments use a combination of design features, practical logic, and statistical analysis to show that the presumed cause is likely to be responsible for the observed effect, and other causes are not. The term nonrandomized experiment is synonymous with quasi-experiment; and the terms observational study and nonexperimental design often include quasi-experiments as a subset. This research paper discusses the need for quasi-experimentation, describes the kinds of designs that fall into this class of methods, reviews the intellectual and practical history of these designs, and notes important current developments.

Academic Writing, Editing, Proofreading, And Problem Solving Services

Get 10% OFF with 24START discount code

1. The Need For Quasi-Experimentation

Given the desirable properties of randomized experiments, one might question why quasi-experiments are needed. When properly implemented, randomized experiments yield unbiased estimates of treatment effects, accompanied by known probabilities of error in identifying effect size. Quasi-experimental designs do not have these properties. Yet quasi-experiments are necessary in the arsenal of science because it is not always possible to randomize. Ethical constraints may preclude withholding treatment from needy people based on chance, those who administer treatment may refuse to honor randomization, or questions about program effects may arise after a treatment was already implemented so that randomization is impossible. Consequently, the use of quasi-experimental designs is frequent and inevitable in practice.

2. Kinds Of Quasi-Experimental Designs

The range of quasi-experimental designs is large, including but not limited to: (a) Nonequivalent control group designs in which the outcomes of two or more treatment or comparison conditions are studied but the experimenter does not control assignment to conditions; (b) Interrupted time series designs in which many consecutive observations over time (prototypically 100) are available on an outcome, and treatment is introduced in the midst of those observations to demonstrate its impact on the outcome through a discontinuity in the time series after treatment; (c) Regression discontinuity designs in which the experimenter uses a cutoff score on a measured variable to determine eligibility for treatment, and an effect is observed if the regression line (of the assignment variable on outcome) for the treatment group is discontinuous from that of the comparison group at the cutoff score; (d) Single-case designs in which one participant is repeatedly observed over time (usually on fewer occasions than in time series) while the scheduling and dose of treatment are manipulated to demonstrate that treatment controls outcome.

In the preceding designs, treatment is manipulated, and outcome is then observed. Two other classes of designs are sometimes included as quasi-experiments, even though the presumed cause is not manipulated (and often not even manipulable) prior to observing the outcome. In (e) case–control designs, a group with an outcome of interest is compared to a group without that outcome to see if they differ retrospectively in exposure to possible causes in the past; and in (f ) correlational designs, observations on possible treatments and outcomes are observed simultaneously, often with a survey, to see if they are related. Because these designs do not ensure that cause precedes effect, as it must logically do, they usually yield more equivocal causal inferences.

3. The History Of Quasi-Experimental Designs

Quasi-experimental designs have an even longer history than randomized experiments. For example, around 1,850 epidemiologists used case–control methods to identify contaminated water supplies as the cause of cholera in London (Schlesselman 1982), and in 1898, Triplett used a nonequivalent control group design to show that the presence of audience and competitors improved the performance of bicyclists. In fact, nearly all experiments conducted prior to Fisher’s work were quasi-experiments.

However, it was not until 1963 that the term quasi-experiment was coined by Campbell and Stanley (1963) to describe this class of designs. Campbell and his colleagues (Cook and Campbell 1979, Shadish et al. in press) extended the theory and practice of these designs in three ways. First, they described a large number of these designs, including variations of the designs described above. For example, some quasi-experimental designs are inherently longitudinal (e.g., time series, single case designs), observing participants over time, but other designs can be made longitudinal by adding more observations before or after treatment. Similarly, more than one treatment or control group can be used, and the designs can be combined, as when adding a nonequivalent control group to a time series.

Second, Campbell developed a method to evaluate the quality of causal inferences resulting from quasi-experimental designs—a validity typology that was elaborated in Cook and Campbell (1979). The typology includes four validity types and threats to validity for each type. Threats are common reasons why researchers may be wrong about the causal inferences they draw. Statistical conclusion validity concerns inferences about whether and how much presumed cause and effect co-vary; examples of threats to statistical conclusion validity include low statistical power, violated assumptions of statistical tests, and inaccurate effect size estimates. Internal validity concerns inferences that observed co-variation is due to the presumed treatment causing the presumed outcome; examples include history (extraneous events that could also cause the effect), maturation (natural growth processes that could cause an observed change), and selection (differences between groups before treatment that may cause differences after treatment). Construct validity concerns inferences about higher-order constructs that research operations represent; threats include experimenter expectancy effects whereby participants react to what they believe the experimenter wants to observe rather than to the intended treatment, and mono-operation bias in which researchers use only one measure that reflects a construct imperfectly or incorrectly. External validity concerns inferences about generalizing a causal relationship over variations in units, treatments, observations, settings, and times; threats include interactions of the treatment with other features of the design that produce unique effects that would not otherwise be observed.

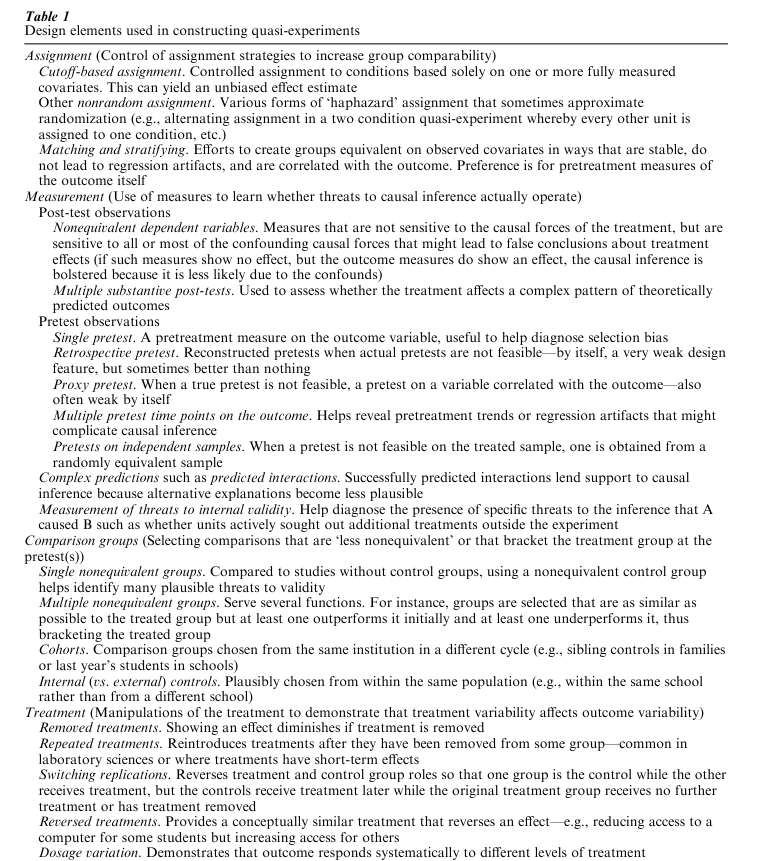

Third, Campbell’s theory emphasized addressing threats to validity using design features—things that a researcher can manipulate to prevent a threat from occurring or to diagnose its presence and potential impact on study results (see Table 1). For example, suppose maturation (normal development) is an anticipated threat to validity because it could cause a pretest–post-test change like that attributed to the treatment. The inclusion of several consecutive pretests before treatment can indicate whether the rate of maturation before treatment is similar to the rate of change from during and after treatment. If it is similar, maturation is a threat. All quasi-experiments are combinations of these design features, thoughtfully chosen to diagnose or rule out threats to validity in a particular context. Conversely, Campbell was skeptical about the more difficult task of trying to adjust threats statistically after they have already occurred. The reason is that statistical adjustments require making assumptions, the validity of which are usually impossible to test, and some of which are dubious (e.g., that the selection model is known fully, or that the functional form of errors is known).

Other scholars during this time were also interested in causal inferences in quasi-experiments, such as Cochran (1965) in statistics, Heckman (1979) in economics, and Hill (1953) in epidemiology. However, Campbell’s work was unique for its extensive emphasis on design rather than statistical analysis, for its theory of how to evaluate causal inferences, and for its sustained development of quasi-experimental theory and method over four decades. Both the theory and the methods he outlined were widely adopted in practice during the last half of the twentieth century, and his terms like internal and external validity became so much a part of the scientific lexicon that today they are often used without reference to Campbell.

4. Contemporary Research About Quasi-Experimental Design

4.1 Statistics And Quasi-Experimental Design

Although work on the statistical analysis of quasi-experimental designs deserves separate treatment, several contemporary developments deserve mention here. One is the work of statisticians such as Paul Holland, Paul Rosenbaum, and Donald Rubin on statistical models for quasi-experimental designs (e.g., Rosenbaum 1995). They emphasize the need to measure what would have happened to treatment participants without treatment (the counterfactual), and focus on statistics that can improve estimates of the counterfactual without randomization. A central method uses propensity scores, a predicted probability of group membership obtained from logistic regression of actual group membership on predictors of outcome or of how participants got into treatment. Matching, stratifying, or co-varying on the propensity score can balance nonequivalent groups on those predictors, but they cannot balance groups for unobserved variables, so hidden bias may remain. Hence these statisticians have developed sensitivity analyses to measure how much hidden bias would be necessary to change an effect in important ways. Both propensity scores and sensitivity analysis are promising developments warranting wider exploration in quasi-experimental designs.

A second statistical development has been pursued mostly by economists, especially James Heckman and his colleagues, called selection bias modeling (Winship and Mare 1992). The aim is to remove hidden bias in effect estimates from quasi-experiments by modeling the selection process. In principle the statistical models are exciting, but in practice they have been less successful. A series of studies in the 1980s and 1990s found that effect estimates from selection bias models did not match results from randomized experiments. Economists responded with various adjustments to these models, and proposed tests for their appropriate application, but so far results remain discouraging. Most recently, some economists have improved results by combining selection bias models with propensity scores. Although selection bias models cannot yet be recommended for widespread adoption, this topic continues to develop rapidly and serious scholars must attend to it. For example, other economists have developed useful econometrically based sensitivity analyses. Along with the incorporation of propensity scores, this may promise a future convergence in statistical and econometric literatures.

A third development is the use of structural equation modeling (SEM) to study causal relationships in quasi-experiments, but this effort has also been only partly successful (Bollen 1989). The capacity of SEM to model latent variables can sometimes reduce problems of bias caused by unreliability of measurement, but its capacity to generate unbiased effect estimates is hamstrung by the same lack of knowledge of selection that thwarts selection bias models.

4.2 The Empirical Program Of Quasi-Experimental Design

Many features of quasi-experimentation pertain to matters of empirical fact that cannot be resolved by statistical theory or logical analysis. For these features, a theory of quasi-experimental design benefits from empirical research about these facts. Shadish (2000) has presented an extended discussion of what such an empirical program of quasi-experimentation might look like, including studies of questions like the following:

(a) Can quasi-experiments yield accurate effect estimates, and if so, under what conditions?

(b) Which threats to validity actually occur in practice (e.g., pretest sensitization, experimenter expectancy effects), and if so, under what conditions?

(c) Do the design features in Table 1 improve causal inference when applied to quasi-experimental design, and if so, under what conditions?

Some of this empirical research has already been conducted (see Shadish 2000, for examples). The methodologies used to investigate such questions are eclectic, including case studies, surveys, literature reviews (quantitative and qualitative), and experiments themselves. Until very recently, however, these studies have generally not been systematically used to critique and improve quasi-experimental theory.

5. Conclusion

Three important factors have converged at the end of the twentieth century to create the conditions under which the development of better quasi-experimentation may be possible. First, over 30 years of practical experience with quasi-experimental designs have provided a database from which we can conduct empirical studies of the theory. Second, after decades of focus on randomized designs, statisticians and economists have turned their attention to improving quasi-experimental designs. Third, the computer revolution provided both theorists and practitioners with increased capacity to invent and use more sophisticated and computationally intense methods for improving quasi-experiments. Each in their own way, these three factors have taken us several steps closer to answering that great unanswered question with which this research paper began.

Bibliography:

- Bollen K A 1989 Structural Equations with Latent Variables. Wiley, New York

- Campbell D T, Stanley J C C 1963/1966 Experimental and Quasi-experimental Designs for Research. Rand-McNally, Chicago

- Cochran W G 1965 The planning of observational studies in human populations. Journal of the Royal Statistical Society, Series A General 128: 234–66

- Cook T D, Campbell D T 1979 Quasi-experimentation: Design and Analysis Issues for Field Settings. Rand-McNally, Chicago

- Heckman J J 1979 Sample selection bias as a specification error. Econometrica 47: 153–61

- Hill A B 1953 Observation and experiment. The New England Journal of Medicine 248: 995–1001

- Rosenbaum P R 1995 Observational Studies. Springer-Verlag, New York

- Schlesselman J J 1982 Case–Control Studies: Design, Conduct, Analysis. Oxford University Press, New York

- Shadish W R 2000 The empirical program of quasi-experimentation. In: Bickman L (ed.) Validity and Social Experimentation: Donald Campbell’s Legacy. Sage Publications, Thousand Oaks, CA

- Shadish W R, Cook T D, Campbell D T in press Experimental and Quasi-experimental Designs for Generalized Causal Inference. Houghton-Mifflin, Boston

- Triplett N 1898 The dynamogenic factors in pace-making and competition. American Journal of Psychology 9: 507–33

- Winship C, Mare R D 1992 Models for sample selection bias. Annual Review of Sociology 18: 327–50