Sample Logic and Linguistics Research Paper. Browse other research paper examples and check the list of research paper topics for more inspiration. If you need a research paper written according to all the academic standards, you can always turn to our experienced writers for help. This is how your paper can get an A! Feel free to contact our research paper writing service for professional assistance. We offer high-quality assignments for reasonable rates.

Although the founding fathers of modern logic showed some interest in the analysis of meaning in natural language, solid links between the two disciplines of logic and linguistics had not been established before the middle of the twentieth century. In the late 1950s logical techniques were first applied to the analysis of grammars as theories of linguistic competence. These applications rapidly developed into the field of mathematical linguistics and later became a central part of theoretical computer science. They will be briefly addressed in the second part of this research paper. The tightest connections between logic and linguistics, however, grew out of the (re-) discovery of logical analysis as a tool of natural language semantics, starting with the advent of Montague Grammar in the early Seventies. The role of logic in the analysis of meaning forms the subject of the first part of this research paper.

Academic Writing, Editing, Proofreading, And Problem Solving Services

Get 10% OFF with 24START discount code

1. Logic and Natural Language Semantics

The influence of logic on natural language semantics starts with the key notion of the field: meaning. Following the tradition of analytic philosophy (cf. Ammerman 1965), many linguists take a ‘realistic’ approach to meaning that makes abundant use of logical apparatus. The basic ideas of the logical analysis of meaning will be explained in Sect. 1.1. Sect.1.2 is concerned with some more specific relations between languages of formal logic and natural languages such as English. (See Barwise and Etchemendy 1990 and Gamut 1991 for introductions to modern logic from a semantic perspective.)

1.1 The Logical Approach to Meaning

A primary goal of semantic theory is to describe the sense relations that competent speakers perceive between expressions of a language (cf. Lyons 1968, Chap. 10; Larson and Segal 1995, Chap. 1). Thus, e.g., knowledge of the following facts about English phrases is part of native speakers’ semantic competence: (a) brown paper bag is more specific than, or hyponymous to, paper bag; (b) blonde eye-doctor and fair-haired ophthalmologist are synonymous in that they necessarily apply to the same persons; (c) Mary owns a dog and Nobody owns a dog are incompatible with each other. Many sense relations can be expressed in terms of entailments between sentences: (a) holds because This is a brown paper bag entails This is a paper bag; (b) holds because This is a blonde eye-doctor and This is a fair-haired ophthalmologist entail each other; (c) holds because Nobody owns a dog entails It is not the case that Mary owns a dog. A theory that correctly predicts the entailment relations holding between the sentences of a particular language may therefore be expected to go a long way towards accurately describing its sense relations in general.

In modern logic, the notion of entailment has been successfully formalized by means of truth conditions: statement A entails statement B if, and only if, any way of making A true is also a way of making B true. Ways of making statements true are called models, which is why the enterprise of describing meaning in terms of truth-conditions is known as model-theoretic semantics. More specifically, a model consists of a set of objects, the model’s Uni erse, plus an Interpretation assigning to every non-logical word a suitable denotation. For instance, the Universe of a specific model might be the set of all numbers, past US presidents, plants, or whatever—any set would do, as long as it is not empty. Similarly, the Interpretation could assign any individual to the name Mary—the number 20, Jimmy Carter, my little green cactus—as, long as that individual is in the model’s Universe; and it could assign any set (even the empty set) to the noun ophthalmologist, as long as that set’s members are all in the model’s universe, and as long as it is the same set as that which the model assigns to eye-doctor. The idea is that any model in which the individual assigned to Mary happens to be a member of the set assigned to ophthalmologist makes the sentence Mary is an ophthalmologist true; and any model in which the set assigned to ophthalmologist happens to be empty, is one that makes the sentence Nobody is an ophthalmologist true.

In general, then, a model assigns each expression of the language a denotation, thereby deciding the truth and falsity of each (declarative) sentence. In fact, the denotation of a sentence is identified with its truth value: the number 1 if the sentence is true, and the number 0 otherwise. Using this numerical convention and writing [X] ͫ for the denotation of an expression X according to a model m , a statement A thus turns out to entail B just in case, for any model m , [A] ͫ≤ [B] ͫwhenever A’s truth value A according to any model is 1, then so is B’s truth value B according to the same m.

Traditionally, the statements made true by models are logical formulae. In linguistic semantics, there are two ways of adapting models to the interpretation of natural language: either natural language expressions are translated into logical notation (indirect interpretation); or models directly assign denotations to them (direct interpretation). On either approach, complex expressions, i.e., anything longer than one word, are usually interpreted following the Principle of Compositionality (Janssen 1997): the translation or the denotation of a complex expression consisting of more than one word [like a sentence] is obtained by combining the translations or, respectively, the denotations of its immediate parts [like subject and predicate]. As a consequence, the denotations models assign to (the translations of ) natural language expressions tend to be rather abstract. If, for instance, the denotation of the noun phrase my car, i.e., a concrete object, is to be obtained by combining the denotations of the noun car, which is a set of concrete objects, and that of the possessive my, the latter would have to be some ‘selection mechanism’ that picks out the unique object belonging to the speaker when combined with a set of objects. More abstract denotations turn out to be needed in a compositional treatment of determiners (like e ery and most), adverbs (like quickly) and prepositions (like between). This is one of the reasons why the most common systems of logic, propositional calculus and first-order logic, do not suffice as the basis of indirect interpretation.

The Principle of Compositionality has an important consequence, known as the Substitution Principle: if the denotation of a complex expression is completely determined by the denotations of its parts, then one may replace any part by something with the same denotation without thereby changing the denotation of the whole. A case in point is the sentence (a) Mary is an ophthalmologist. If Mary happens to be the speaker’s neighbor, then the name Mary and the noun phrase my neighbor have the same denotation. Hence, if (a) is true and thus denotes the truth value 1, then, by the Substitution Principle, (b) My neighbor is an ophthalmologist has the same denotation as (a), i.e., it is also true. While the Substitution Principle appears to make the right prediction in this case, this is not always so. Together with the assumption that sentences denote truth values, the Substitution Principle immediately leads to what, in the philosophy of language, is known as Frege’s Problem (Frege 1892): replacing any sentence by another one with the same truth value ought to preserve denotation, which it does not always do. For instance, the denotation, i.e., the truth value, of (b) Nobody doubts that Mary is an ophthalmologist ought to be preserved when (a) is replaced by a sentence with the same truth value. If Mary is an ophthalmologist, the truth value of (a) is 1 and hence the same as that of (c) Paris is the capital of France. However, (b) might well be false while (b ) Nobody doubts that Paris is the capital of France is true. Clausal embedding under so-called attitude erbs like doubt, know, or tell, thus turns out to be an intensional construction, i.e., one that defies the Substitution Principle.

Various solutions to Frege’s Problem have been proposed. Practically all of them employ the idea that, at least in certain constructions, the contribution that a sentence makes to the denotations of larger expressions is not its truth value but something else. According to a widely accepted view (originating with Frege), it is its informational content: attitude verbs like doubt denote a relation between the individual denoted by the subject and the information conveyed by the embedded clause. A common way of modelling informational content is by means of possible worlds—whence the term possible worlds semantics. (See Barwise 1989 and Devlin 1991 for alternative approaches.) The basic idea is akin to that of measuring the expectance value of an event by the proportion between favorable and unfavorable cases. The cases become possible worlds, the event is the information (as expressed by a sentence) being correct and instead of the quantitative relation between the sets of cases expressed by a ratio, it is the qualitative difference that is emphasized, i.e., which worlds are favorable and which are not. Following this line of thought, the informational content of a sentence, then, can be represented by a function (in the mathematical sense) pairing worlds with truth values. For example, the informational content of (a) above becomes a function assigning to any possible world the truth value 1 if that world is such that, in it, Mary is an ophthalmologist; all other worlds will be assigned the truth value 0. Each possible world is thought of as representing a specific way the world could be, or might have been—to the last detail. So the informational content of a sentence will typically assign 1 to an infinity of possible worlds differing in all sorts of aspects that are irrelevant to the truth of that sentence; and it will typically assign 0 to innumerably many worlds differing in matters that do not affect the falsity of the sentence.

In order to give a full solution to Frege’s Puzzle within possible worlds semantics, the informational content of each sentence must be systematically determined, which is again done compositionally, replacing denotations by intensions. The intensions of sentences are their informational contents, but the notion of intension is more general in that it applies to all kinds of expressions. The intension of any expression is its denotation (or extension) as it varies from world to world, i.e., a function (in the mathematical sense) assigning to each possible world the extension of the expression in that world. As a case in point, one may compare the descriptions (d ) the present capital of France and (e) the largest city in France: in some worlds, including our actual reality, their extensions coincide, but given a different course of history, as represented by a possible world w, Reims, though smaller than Paris, would be the capital of France. So the intensions of (d ) and (e) cannot be the same, because they disagree on w (and on many other worlds). In general, then, expressions with the same denotations may still differ in their intensions and hence, given compositionality, in the contribution they make to the informational contents of the sentences in which they occur.

The method of extension and intension complements, rather than replaces, the model-theoretic approach to meaning. Instead of directly assigning arbitrary denotations to expressions, the models of possible worlds semantics couple each expression with an arbitrary intension, which then, being a function, uniquely determines an extension relative to a given world. Moreover, just like models come with their own Universes, in possible worlds semantics each model is also equipped with its own Logical Space of worlds. This construction originates from modal logic (see below) and offers an alternative to the model-theoretic reconstruction of entailment: a sentence A strictly implies a sentence B if B is true in all worlds in which A is true, i.e., if the intension of B yields the truth value 1 for any possible world for which the intension of A yields 1.

1.2 Logic and Language

The basic tools of logically based linguistic semantics—models and possible worlds—had originally been developed for artificial languages designed for specific purposes, like the analysis of mathematical proofs. These logical formulas can usually be paraphrased in colloquial language; the relation between formula and paraphrase is taught in introductory logic classes. Conversely, many constructions in natural language (passive, relative clauses, …) have long been known to be systematically expressible in languages of logic (cf. Quine 1960); formalization, i.e., the art of translating ordinary sentences into logical notation, is also something to be picked up in an introduction to logic. As a side-effect of the logical approach to natural language semantics, these relations between natural language grammar and logical notation can be explored in a more rigorous way. We will briefly go through some pertinent examples; more detailed casestudies can be looked up in any textbook or handbook on formal semantics (Dowty et al. 1981, Heim and Kratzer 1998, Gabbay and Guenthner 1984, Vol. 4, von Stechow and Wunderlich 1991, van Benthem and ter Meulen 1997).

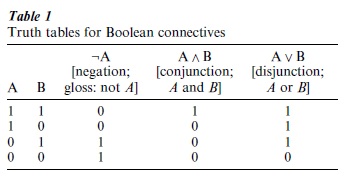

The most obvious connections between logic and language concern the meaning of logical words, i.e., those lexical items that translate the basic inventory of logical formalism: the (‘Boolean’) connectives ‘ ¬,’ ‘ Λ,’

‘ V,’ … translated as [it is] not [the case that], and, or, … and the quantifiers ‘Ɐ’ and ‘ꓱ’ corresponding to e erything and something. The logicians’ version of the former are combinations of truth-values. For example, just like a sentence of the form ‘A and B’ is true just in case both A and B are, ‘Λ’ combines 1 and 1 into 1 and the other pairs of truth values into 0. Similarly, ‘¬’ (glossed as not) turns truth (1) into falsity and vice versa; ‘V’ ( or) combines 0 and 0 into 0 and everything else into 1, etc. These combinations of truth values can thus be given by means of the truth tables in Table 1.

Even though truth tables work fine as first approximations to a semantic analysis of the words not, and and or as they are used in mathematical jargon, there is more to the meaning of these words. To begin with, and and or do not always stand between sentences but may be used to connect almost all kinds of expressions: e ery book and a pen; see and or hear; true or false; etc. However, in many cases these non-sentential uses abbreviate coordinated sentences: John owns e ery book and a pen is short for John owns e ery book and John owns a pen, etc. In fact, non-sentential and and or can frequently be systematically reduced to the relevant truth tables by a type shift, a semantic rule that systematically generalizes combinations of truth values to combinations of other denotations (see Keenan and Faltz 1985, Hendriks 1993). However, even this generalization does not cover all nonsentential uses of these words. In particular, there is no obvious reduction of the group reading of and, as in John and Mary are a married couple, to the truth table of Boolean conjunction. Neither does there seem to be a straightforward reduction of alternative or, as in questions like Shall we lea e or do you want us to stay?, to Boolean disjunction. Certain other semantic aspects that the truth table analysis seems to miss may be captured by pragmatic principles governing efficient use, as opposed to literal meaning (Grice 1989, Levinson 1983). As a case in point, the difference between John lit a cigarette and Mary left the room and Mary left the room and John lit a cigarette may be explained by a general principle of discourse organization, according to which the order of sentences should match the order of the events they describe. More involved pragmatic reasonings have been given to account for the exclusive sense of or, according to which John missed the train, or he decided to sleep late implies that John did not both miss the train and decide to sleep late.

In addition to the Boolean connectives, standard formal languages of logic contain both a uni ersal and an existential quantifier, usually translated as e erything and something and expressing properties of predicates: being universal, i.e., true of everything, and being non-empty, i.e., applying to something. Natural language statements are only expressible in standard (‘first-order’) logic if they can be rephrased by combinations of Boolean connectives and the two quantifiers. A case in point is the sentence (N ) Every boy lo es a girl that can be paraphrased by (L) For e erything it holds that either it is not a boy, or for something it holds that it is a girl and the former loves the latter—which translates into predicate logic as (F ) (Ɐ x) [ ¬ B(x) V (ꓱy) [G(y) Λ L(x,y)]]. As the logician’s paraphrase (L) indicates, the internal structure of quantified statements in natural language is quite different from that in logic. In particular, it usually involves determiners like e ery and a instead of the natural language quantifiers e erything and something. The difference is that a determiner relates two sets, whereas a quantifier makes a statement about one set. Thus, in (N ), e ery relates the set of boys with the set of girl-lovers, whereas the quantifier e erything in (L) as well as the universal quantifier ‘ ’ in (F ) attribute universality to the set of individuals that love girls if they are boys, i.e., the set of those that are either not boys or else love a girl.

Another means of expressing quantification in natural language is by way of certain ad erbs. In particular, in so-called donkey sentences like If a farmer owns a donkey, he ne er beats it, the adverb ne er (on one reading) expresses that the set of donkey-owning farmers that beat their donkeys is empty. The very form of this ad erbial quantification is rather remote from the usual logical formalization; it is not even clear whether in this case, as is usually assumed (see above), the indefinites a farmer and a donkey correspond to existential quantifiers. Recent work in natural language semantics has developed special logical systems to give a more adequate logical account of indefinites in donkey sentences and related constructions. (See Kamp and Reyle 1993, Chierchia 1995.)

Apart from these structural differences in the formulation of quantified statements, there are also differences in expressive power. In particular, not all natural language determiners can be paraphrased by combining Boolean connectives and logical quantifiers. For instance, it can be shown that a statement like Most boys are asleep cannot be expressed in firstorder logic (Barwise and Cooper 1981). Adequate formalizations of such sentences, and hence of natural language in general, again call for more powerful and complex languages of logic.

Logicians have devised various extensions of classical propositional and predicate logic as tools of formalizing reasoning involving specific locutions (cf. Gabbay and Guenthner 1984, Vol. 2). For instance, in addition to Boolean connectives, languages of (propositional) modal logic (Chellas 1993, Bull and Segerberg 1984) contain a necessity operator, usually written as ‘□’; a formula ‘□ϕ ’ [read: it is necessarily so that ϕ] is true in a world w of a given model if the formula itself is true in all worlds that are possible for (or, technically: accessible from) w. Combining negation and necessity, it is also possible to express a corresponding notion of possibility as ‘¬ □ ¬.’ Models of modal logic thus contain domains of possible worlds organized by an accessibility relation. Depending on the precise structure of the latter, modal logic can be used to formalize various uses of modal verbs as in Someone must ha e been here (epistemic must) or You may now lea e the room (deontic may). However, detailed studies of modality have revealed that these formalizations are at best approximations of the meanings of modal verbs in natural language (cf. Kratzer 1991). A similar relation is that between tense logic (Burgess 1984) and the tenses and other means of reference to time in natural language.

Various phenomena have led semanticists to explore alternatives to classical logic with its model-theoretic interpretation assigning one of two truth values to each formula or sentence (cf. Gabbay and Guenthner 1984, Vol. 3). A sentence like (1) John knows that there will be a party tonight seems to imply (P) There will be a party tonight; on the other hand, so does its negation (2) John doesn’t know that there will be a party tonight. But if (1) and (2) both implied (P) in the sense of classical model-theory, then (P) would be true throughout the models in which (1) is true—as well as in those models in which (2) is true, i.e., the ones in which (1) is false. But then (3) would have to be a tautology, i.e., a statement that is true in all models; for any model would have to make (1) true or false. Giving up this last assumption, one enters the realm of (certain) non-classical logics, the simplest among which are threealued logics with an additional truth value U (read: undefined ) which models assign to sentences like (1) if their presupposition (P) is not true in them.

2. The Logic of Grammar

As theories of language structure, grammars themselves have been the objects of logical analysis. The earliest such investigations mark the beginning of generative grammar and were directed to shedding a light on the complexity of human language (see Sect. 2.1). Later approaches focused on the role of grammatical rules in the activity of parsing, which bears some resemblance to the process of deduction in logic; and more recently, grammatical reasoning has been seen as a non-monotonic process, thus reaching beyond the limits of classical logic (see Sect. 2.2).

2.1 Syntactic Complexity

A syntactic theory of a particular language may be seen as a body of theoretical statements describing the structure of grammatically correct phrases. Formal reconstruction reveals that these theories come in different formats and complexities. For instance, a context-free description of a language only contains grammatical rules of the form: ‘If a phrase of category B is immediately followed by a phrase of category C, the result will be a phrase of category A.’ The standard notation for a rule of this form is: ‘A→B+C . Thus the following rule of English grammar is context-free: if a preposition (like about) is followed by a noun phrase (like corrupt politicians), they form a prepositional phrase (about corrupt politicians). In standard notation, this rule reads: ‘PP Prep NP.’ On the other hand, the following would-be rule is not context-free: after a ditransiti e erb (like gi e), two noun phrases (like e ery kid and a penny) form an object-phrase (a kid a penny). Rather, the rule comes under the more complex scheme: ‘If a phrase of category B is immediately followed by a phrase of category C, the result will be a phrase of category A, provided that it follows a phrase of category D’; one just has to put A = object-phrase, B = C = noun phrase, and D = ditransiti e erb. Rules of this form are called contextsensiti e. Using standard notation, they are written as: ‘A →B+C/D_.’

The distinction between context-free and context-sensitive rules—and similar distinctions between other kinds of grammatical rules—can be used to define an abstract complexity ranking between languages, the Chomsky Hierarchy (after Chomsky 1957, 1959). A language is context-free (or, as the case may be, context-sensitive) if it can in principle be described by a body of context-free (or, respectively, context- sensitive) rules. Languages in the sense of this definition are arbitrary sets of sentences, which themselves are arbitrary strings of words. It turns out that, in this abstract mathematical setting (known as mathematical linguistics or formal language theory), though all context-free languages are trivially context-sensitive, the converse is not the case: one can construct languages that are context-sensitive without being describable by any body of context-free rules whatsoever. A famous example is the ‘language’ anbn n whose ‘sentences’ consist of arbitrarily many occurrences of the ‘word’ a followed as many bs, which are again followed by the same number of cs. (A proof and further examples can be found in Martin 1997, Chap. 8.)

In applying these abstract concepts, one must identify a language like English with the set of its grammatical sentences and individuate the latter by their words only—and not, say, by their internal grammatical structure. Given this identification, it is natural to ask for the exact location of human languages in the Chomsky Hierarchy. It may seem that an answer to this question requires complete knowledge of the grammatical sentences of any given language—something that current linguistic research has not attained. However, at least some of these complexity issues can be settled on the basis of partial information about the languages under investigation. In particular, it has been shown that, given the intricate structure of its verb complexes, the Swiss German dialect spoken in Zurich cannot be captured by context-free means (Shieber 1985); a similar result concerning word formation has been obtained for the African language Bambara spoken in Mali (Culy 1985), thus proving that natural languages in general are not context-free.

2.2 Further Issues

The fact that ordinary syntactic notation looks rather different from logical symbolism should not distract from the close connections between the two. For instance, as indicated above, a context-free rule only abbreviates a lengthy statement about the internal structure of phrases; and this statement can be easily (though somewhat clumsily) expressed in standard logical symbolism (first-order predicate logic). This fact becomes particularly important when it comes to the task of parsing phrases of a given language according to a given grammar. As a case in point, parsing the prepositional phrase about Mary on the basis of the above context-free rule boils down to making a connection between the following three pieces of information: (I ) about is a preposition; (II ) Mary is a noun phrase; and (III ) if x is a preposition and y is a noun phrase, then x y (i.e., x followed by y) is a prepositional phrase. (I) and (II) are basic facts about English words to be recorded in a lexicon (which may be thought of as part of a syntactic description); (III) is just a paraphrase of the above context-free rule: ‘PP → Prep + NP.’ (I)–(III ) obviously entail that about Mary is a prepositional phrase, and this is precisely the kind of entailment that can be formalized by means of elementary logic. Hence it should not come as a surprise that there are close relations between parsing strategies and procedures for verifying logical entailments. This connection has been studied in computational linguistics and in categorical grammar under the heading Parsing as Deduction (see Johnson 1991).

The role of logical deduction in grammatical theory is more complex than can be indicated in an article of this length. In particular, certain phenomena like the markedness of grammatical features or the interaction of phonological constraints, seem to call for nonclassical logics that violate the Law of Monotonicity, according to which a conclusion once drawn remains correct when more premises are added.

Bibliography:

- Ammerman R R (ed.) 1965 Classics of Analytic Philosophy. McGraw-Hill, New York

- Barwise 1989 The Situation in Logic. CSLI, Stanford, CA

- Barwise J, Cooper R 1981 Generalized quantifiers and natural language. Linguistics and Philosophy 4: 159–219

- Barwise J, Etchemendy J 1990 The Language of First-Order Logic. CSLI, Stanford, CA

- van Benthem J, ter Meulen A (eds.) 1997 Handbook of Logic and Language. Elsevier, Amsterdam

- Bull R A, Segerberg K 1984 Basic modal logic. In: Gabbay D, Guenthner F (eds.) Handbook of Philosophical Logic. Kluwer, Dordrecht, Vol. 2

- Burgess J 1984 Basic tense logic. In: Gabbay D, Guenthner F (eds.) Handbook of Philosophical Logic. Kluwer, Dordrecht, Vol. 2

- Chellas B 1993 Modal Logic. Cambridge University Press, Cambridge, UK

- Chierchia G 1995 Dynamics of Meaning. University of Chicago Press, Chicago

- Chomsky N 1957 Syntactic Structures. Mouton, The Hague, The Netherlands

- Chomsky N 1959 On certain formal properties of grammars. Information and Control 2: 137–67

- Culy C 1985 The complexity of the vocabulary of Bambara. Linguistics and Philosophy 8: 345–51

- Devlin K 1991 Logic and Information. Cambridge University Press, Cambridge, UK

- Dowty D, Wall R, Peters S 1981 Introduction to Montague Semantics. Kluwer, Dordrecht, The Netherlands

- Frege G 1892 Uber Sinn und Bedeutung. Zeitschrift fur Philosophie und philosophische Kritik 100: 25–50

- Gabbay D, Guenthner F (eds.) 1984 Handbook of Philosophical Logic, 4 vo1s. Kluwer, Dordrecht, The Netherlands

- Gamut L T F 1991 Logic, Language, and Meaning, 2 vols. University of Chicago Press, Chicago

- Grice P 1989 Studies in the Way of Words. Harvard University Press, Cambridge, MA

- Heim I, Kratzer A 1998 Semantics in Generati e Grammar. Blackwell, Oxford, UK

- Hendriks H 1993 Studied flexibility: Categories and types in syntax and semantics. Ph.D. thesis, University of Amsterdam

- Janssen T M V 1997 Compositionality (with an apppendix by B H Partee). In: van Benthem J, ter Meulen A (eds.) Handbook of Logic and Language. Elsevier, Amsterdam

- Johnson M 1991 Deductive parsing: The use of knowledge of language. In: Berwick R C et al. (ed.) Principle-based Parsing: Computation and Psycholinguistics. Kluwer, Dordrecht, The Netherlands

- Kamp H, Reyle U 1993 From Discourse to Logic. Kluwer, Dordrecht, The Netherlands

- Keenan E, Faltz L 1985 Boolean Semantics for Natural Language. Reidel, Dordrecht, The Netherlands

- Kratzer A 1991 Modality. In: von Stechow A, Wunderlich D (eds.) Semantik. Ein internationales Handbuch zeitgenossischer Forschung. [Semantics. An International Handbook of Contemporary Research.]. De Gruyter, Berlin

- Larson R, Segal G 1995 Knowledge of Meaning. MIT Press, Cambridge, MA

- Levinson, S C 1983 Pragmatics. Cambridge University Press, Cambridge, UK

- Lyons J 1968 Introduction to Theoretical Linguistics. Cambridge University Press, Cambridge, UK

- Martin J C 1997 Introduction to Languages and the Theory of Computation, 2nd ed. McGraw-Hill, Boston

- Quine W V 1960 Word and Object. MIT Press, Cambridge, MA

- Shieber S M 1985 Evidence against the context-freeness of natural language. Linguistics and Philosophy 8: 333–43

- Stechow A von, Wunderlich D (eds.) 1991 Semantik. Ein internationales Handbuch zeitgenossischer Forschung. [Semantics. An International Handbook of Contemporary Research.] De Gruyter, Berlin