Sample Generative Syntax Research Paper. Browse other research paper examples and check the list of research paper topics for more inspiration. If you need a research paper written according to all the academic standards, you can always turn to our experienced writers for help. This is how your paper can get an A! Feel free to contact our custom research paper writing service for professional assistance. We offer high-quality assignments for reasonable rates.

The term generative syntax refers to research on the syntax of natural languages within the general framework of generative grammar. As in generative grammar, the term ‘generative’ means explicit. Thus, generative syntax differs from much other research on syntax in its emphasis on precise and explicit specification both of general theories and of specific analyses. Most work in generative syntax like most work in generative grammar has also been seen as an investigation of I-language (internalized language), the cognitive system underlying the ordinary use of language. Like generative grammar, generative syntax has its origins in Chomsky’s Syntactic Structures (1957). It is often seen as the central component of generative grammar and it has always been the most prominent.

Academic Writing, Editing, Proofreading, And Problem Solving Services

Get 10% OFF with 24START discount code

1. Origins

Syntax was very much a secondary concern for pre-Chomskian linguists and their ideas about syntax were largely unformalized. Chomsky’s first concern was to make these ideas explicit and to investigate their adequacy. He argued that they were unsatisfactory in various ways and proposed a richer conception of grammar; known as transformational grammar (TG).

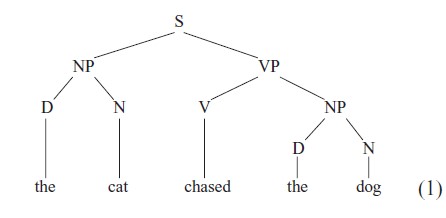

Most pre-Chomskian work on syntax implicitly assumed that words can be classified and that they combine together into phrases which can also be classified. In other words, it assumed that sentences have a constituent structure (or more than one if they are structurally ambiguous). Constituent structures are commonly represented by tree diagrams like (10):

This tells us what categories the words in the sentence belong to and what phrases the sentence contains. Chomsky pointed out that constituent structures could be characterized by phrase structure (PS) rules. A PS rule has the form in (2) and the interpretation in (3).

C0→C1 C2 … Cn (2)

A C0 can consist of a C1, followed by a C2, followed

by … followed by a Cn (3)

Chomsky argued that grammars consisting just of PS rules could not provide an illuminating account of various syntactic phenomena, and argued for a richer conception of syntactic structure in which some constituents are introduced into the structure somewhere other than their superficial position and then moved to their superficial position. For example, he argued that the subject of a passive sentence such as (4) originates in object position.

Kim was arrested (4)

This provides an explanation for the fact that ‘arrested’ is acceptable here without an object although it normally requires one, as the ungrammaticality of (5) shows.

* The policeman has arrested. (5)

It also provides an explanation for the fact that ‘Kim’ is understood in the same way in (4) as in (6), i.e., as the person undergoing the action.

They have arrested Kim. (6)

Phenomena like these led Chomsky to propose that sentences have an underlying constituent structure characterized by PS rules and that the superficial constituent structure is derived from this by various transformational rules. Thus, for most versions of TG, the full syntactic structure of a sentence is a sequence of constituent structures. The underlying structure has been called deep structure or D-structure and the most superficial structure, surface or S-structure.

Since 1957 a variety of different theories have been developed within generative syntax and they have been applied to many different languages.

2. Data

Generative analyses make complex predictions about what is and what is not grammatical, i.e., in accord with the speaker’s I-language. The most efficient way of investigating these predictions is through the judgments or intuitions of native speakers. Hence, generative syntax has always been based mainly on intuitions. Of course, intuitions have to be treated with caution. The fact that a speaker finds some sentence unacceptable does not necessarily mean that it is ungrammatical for her. A speaker might find (7) unacceptable because it is false, and might find (8) unacceptable because it is misleading. (It is natural initially to assume incorrectly that ‘her’ forms a constituent with ‘contributions to the fund.’)

Three fours are thirteen. (7)

Without her contributions to the fund would be quite inadequate. (8)

However, if a sentence is unacceptable and there is no other explanation for the unacceptability, it is reasonable to assume that it is ungrammatical.

None of this implies that other kinds of data, e.g., corpora of naturally occurring speech or writing, cannot be useful. In fact generative syntacticians have often used corpus data. Moreover, there is no alternative to corpus data if the speakers are dead. Hence, generative work on dead languages is inevitably based on a corpus supplemented by intelligent guesses about meanings. Corpus data has also played a central role in generative work on the early stages of first language acquisition.

The emphasis on intuitions has encouraged researchers to work on their own language. In the early years of generative syntax, most researchers were native speakers of English, and as a result English had more attention than any other language. However, as Newmeyer (1986, p. 40) emphasizes, generative syntax was never limited to English. Moreover, languages other than English have been increasingly prominent since about 1980 as a result of the growing numbers of generative syntacticians who are native speakers of other languages. Work in generative syntax often focuses on a single language, but work that compares and contrasts a pair of related languages is also quite common, and some work deals with a broad range of languages.

3. Structures

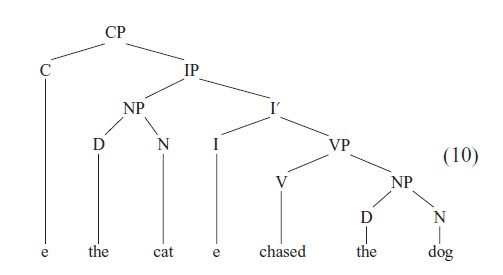

As has been noted, most versions of TG assume that the full syntactic structure of a sentence is a sequence of constituent structures. Different versions of TG differ in how complex these constituent structures are. Typically, since the early 1980s, they have been much more complex than (1) as a result especially of the presence of various phonologically empty elements. These include traces, empty categories ‘left behind’ by movement processes. Thus, (4) will have a trace in object position left behind by movement of the NP ‘Kim.’ Empty elements also include empty functional categories. Functional categories include C (complementizer) and I (inflection), exemplified by ‘that’ and ‘may’ in (9), respectively.

I think that the cat may chase the dog. (9)

It is standardly assumed that a simple sentence contains empty members of these categories. Thus, instead of (1), one might have something like (10)

A variety of functional categories, which are commonly empty, have been assumed in recent TG work.

The assumption that the syntactic structure of a sentence is a sequence of constituent structures has been largely abandoned within the recent minimalist version of TG (Chomsky 1995, Radford 1997). Within this framework, movement processes apply within a constituent before it is combined with another constituent. In (11), for example, ‘Kim’ is moved to subject position in the subordinate clause before this clause is combined with the verb ‘think.’

I think Kim was arrested. (11)

Hence, there is no level of structure after all processes of combination have applied and before any movement processes apply. In other words, there is no level of D-structure.

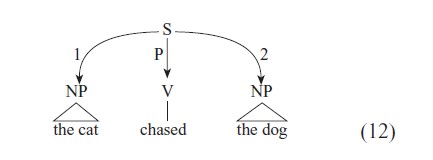

Much work in generative syntax has argued for different sorts of structures. Standard constituent structures do not incorporate labels like subject and object. It is assumed within TG that these notions can be defined in structural terms. Assuming structures like (10), one might define subject as an NP that is an immediate constituent of IP and object as an NP that is an immediate constituent of VP. Proponents of Relational Grammar (RG) argued in the early 1970s that such functional notions should be primitives and that constituent structure trees should be replaced by relational networks like (12), where 1 and 2 stand for subject and object, respectively, and P stands for predicate:

In early work in RG, the structure of a sentence is a sequence of such relational networks and the order of constituents is only significant in the most superficial network. In more recent work, the structure of a sentence is a single complex network and order is determined by its most superficial properties. (For discussion, see Blake 1990.)

The idea that certain functional notions should be primitives is also a feature of Head driven Phrase Structure Grammar (HPSG) (see Pollard and Sag 1994). Here the structure of a sentence is a single quite simple constituent structure enriched with information about whether a constituent is a head (the constituent which determines the identity of the larger expression, a noun in a noun phrase, a verb in a verb phrase, etc.), a subject, a complement, etc. (Object is not a primitive in HPSG.) A somewhat different position is found in Lexical-Functional Grammar (LFG). Here the full structure of a sentence consists of a fairly conventional constituent structure and a functional structure, in which constitutents are identified as subjects, objects, etc. Functional structures provide a way of capturing the similarities between superficially rather different languages. For example, the following sentences from English, Welsh, and Turkish, which have rather different constituent structures because subject, verb, and object are ordered differently, could have essentially the same functional structure:

The cat saw the dog. (13)

Gwelodd y gath y ci (Welsh) (14)

saw the cat the dog

‘The cat saw the dog.’

Kedi kopeg -i gor-du (Turkish) (15)

cat dog-ACC see-Past

‘The cat saw the dog.’

See Bresnan (2001) for discussion.

An important question about the various forms of generative syntax is: what sorts of categories do they assume? Chomsky (1965, 1970) argued that various syntactic phenomena require the assumption that syntactic categories are complex entities made up of smaller elements normally known as features. This position has been generally accepted since then. However, different approaches differ in how complex their categories are. Categories are particularly complex within HPSG, where, as has been noted, the structure of a sentence is a single quite simple constituent structure. In contrast, they appear to be relatively simple within recent TG, which assumes much more complex structures.

4. Rules And Principles

Like any scientific research, work within generative syntax is concerned to capture whatever generalizations can be found in the data. A consequence of this is that broad general statements are preferred to statements of more limited scope.

In the 1960s and early 1970s TG assumed a variety of different PS and transformational rules. More recently, the various transformations have been replaced by a single rule known as Move Alpha or in recent work just Move. This is essentially a license to move anything anywhere. It interacts with various conditions on movement and the results of movement to allow just the right movement processes. Particularly important in recent work have been certain economy conditions, which prefer simpler to more complex derivations. In much the same way, the various PS rules have been replaced by a few general principles interacting with the categorial makeup of lexical items to give the observed variety of basic, premovement structures. In the minimalist version of TG, it is assumed that a single rule of Merge is operative here. A similar position is found in much work in HPSG, in which it is assumed that the variety of syntactic structures are the product of a small number of rules and principles interacting with the properties of lexical items.

Within TG, the move away from large numbers of PS and transformational rules has been associated with a denial that constructions as traditionally understood are of any theoretical significance. Thus, Chomsky remarks that ‘[t] he notion of construction, in the traditional sense, effectively disappears; it is perhaps useful for descriptive taxonomy but has no theoretical status’ (1995, p. 25). However, since the late 1980s a number of syntacticians have argued that it is impossible to characterize the full range of syntactic structures with just broad general principles and individual lexical items, and that it is necessary to recognize constructions with certain properties of their own after all. For example, Sag (1997) argues that data such as the following require the recognition of a relative clause construction with properties of its own which do not derive either from broad general principles or individual lexical items:

someone on whom I relied (16a)

someone who I relied on (16b)

someone on whom to rely (16c)

*someone who to rely on (16d) (See Kay and Fillmore (1999) and Culicover and Jackendoff (1999) for similar arguments.)

Different approaches to syntax have taken different views about the nature of rules of syntax. Much work in TG and especially the minimalist framework has treated most rules as procedures for constructing well-formed structures. In contrast RG, HPSG, and LFG have interpreted rules as constraints to which well-formed structures must conform. Consequently these approaches are often described as constraint-based.

A further important issue is: how do grammatical constraints interact? The traditional view is that syntactic structures must conform to all relevant constraints. In contrast, proponents of what is known as Optimality Theory (OT) have argued that constraints are ranked and that a structure may violate a constraint if that is the only way to satisfy a higher ranked constraint. This view of constraints has been very influential within phonology but has also had some influence within syntax. It is combined with TG assumptions in e.g., Grimshaw (1997). Grimshaw argues, for example, that there is a constraint which rules out the movement of constituents. However, a higher ranked constraint requires movement of ‘who’ to sentence initial position from its underlying position as object of ‘to’ in the following:

Who did you talk to? (17)

The OT view of constraints has also been combined with LFG assumptions e.g. in Bresnan (2000).

5. Syntax And Other Components Of Grammar

A central question for any theory of syntax is: how does the syntactic component of a grammar relate to other components? What is the relation between syntax on the one hand and semantics, morphology, phonology, and the lexicon on the other?

In the late 1960s and early 1970s, the relation between syntactic and semantic representations was a major topic of debate. Proponents of Generative Semantics argued that the deepest level of syntactic representation is a semantic representation. Proponents of Interpretive Semantics rejected this view and argued that semantic representation is derived by rules referring to both deep and surface structure. There are questions as to how different the two positions really were in the early stages of the debate. In the later stages they were clearly distinguished as generative semantics sought to bring more and more linguistic phenomena within the scope of grammar. Generative semantics collapsed in the mid 1970s, partly because of a realization that some of the phenomena it had brought within the scope of grammar could be handled more satisfactorily within pragmatics. (See Newmeyer 1986, Chaps. 4 and 5 for discussion.) It is now generally accepted within generative syntax that syntactic and semantic representations are rather different, and that there is no one-to-one correspondence between them. The following examples are relevant here:

Have you seen a unicorn? (18a)

Never have I seen a unicorn. (18b)

Kim has seen a unicorn and so has Lee. (18c)

Had I seen a unicorn, I would have told you. (18d)

All these sentences exemplify what might be called the English auxiliary + subject construction. The key words are in bold. They show that this construction has a variety of different semantic interpretations. (18a) contains an interrogative, the kind of sentence that is normally used to ask a question. (18b) and (18c) contain declaratives, the type of sentence normally used to make a statement. Finally, (18d) contains a conditional clause. Here, instead of auxiliary+subject order, one might have ‘if’ and subject + auxiliary order.

Since the mid 1970s, most work in TG has assumed that semantic interpretation is based on a level of logical form (LF) derived from S structure by various movement processes. However, Jackendoff (1997) develops a detailed critique of this view. For HPSG, general principles provide a semantic representation for all expressions on the basis of the semantic representations of their constituents. For LFG, semantic interpretation is based on functional structure.

There are also important questions about the relation between syntax and morphology. At various times it has been proposed within TG that word stems may be combined with affixes in syntax. For example, it has sometimes been suggested that an, English verb stem combines with the past tense suffix ‘-ed’ through a movement process. This view largely fell from favor in the 1990s and it is now widely accepted even within TG that word-formation is confined to the lexicon.

There are also questions about the relation between syntax and phonology. It has sometimes been proposed within TG that certain movement processes apply within the phonological component. For example, Chomsky (1995, p. 368) proposes that verbsecond order in German is a result of a rule applying within the phonological component. The following examples illustrate this phenomenon:

Ich las schon letztes Jahr diesen Roman. (19a)

I read already last year this book

Diesen Roman las ich schon letztes Jahr. (19b)

this book read I already last year

Schon letztes Jahr las ich diesen Roman. (19c)

already last year read I this book

‘I read this book already last year.’

Each example has a different constituent in initial position, but all have the verb in second position. It has also been assumed in much work in HPSG that linear order is solely a property of phonology in the sense that it is not words and phrases but only their phonological properties that are ordered.

Questions also arise about the relation between syntax and the lexicon. Within TG a central issue is where the insertion of lexical items into syntactic structures applies. It has often been assumed that it applies before any movement process. However, it has sometimes been proposed that some lexical insertion may take place after movement. Within HPSG, where lexical categories are quite complex, there are important questions about how generalizations about the categorial makeup of words can be captured. Considerable use has been made of lexical rules deriving lexical entries form lexical entries. More recently, it has been argued that most generalizations can be captured if words are classified in terms of a number of hierarchies of lexical types from which they inherit properties. Thus, ‘chased’ might be classified as both a transitive verb and a past tense verb and combine the properties of these two types (see Koenig 1999).

There are further issues about the scope of syntax. Should grammars include information about the contexts in which constructions are appropriate? For example, should a grammar include the information that (19) with no overt object following ‘beat’ is only appropriate in recipes?

Beat until stiff. (20)

It has generally been assumed that such information is something separate interacting with purely grammatical information (see, for example, Culy 1996). How- ever, a number of researchers have argued that it is inseparable from purely grammatical information (see Hudson 1996, Paolillo 2000). Should grammars in- corporate statistical information about how likely certain constructions are to occur? For example, should a grammar incorporate the information that (21a) with a clause in subject position is less likely to occur than (21b) with the clause in final position?

That Kim was there is odd. (21a)

It is odd that Kim was there. (21b)

Traditionally, statistical facts about syntactic structures have been seen as a matter of performance, but Abney (1996) argues that such information is part and parcel of grammars.

6. Some Syntactic Phenomena

There are many unresolved issues in generative syntax but a great deal has been learned about both the universal syntactic properties of natural languages and the ways in which they can vary in their syntax.

A major focus of research has been clause structure and a lot is known about the kinds of clause structure that a language may exhibit and about what kinds of analyses might be appropriate. This includes such matters as the order of subject, verb, and object (see (13)–(15), the realization of tense, mood, and negation, agreement, and case-marking, and the relation between main and subordinate clauses. German, which has verb-final order in subordinate clauses, is relevant here. The following subordinate clause contrasts with the examples in (19):

dass ich schon letztes Jahr diesen Roman las (22a)

that I already last year this book read

‘that I read this book last year’

There has also been a great deal of research on noun phrases and a lot is known about such matters as the realization of definiteness, the positioning of adjectives (which normally precede the noun in some languages, e.g., English, but normally follow in others, e.g., Welsh), and the positioning of relative clauses (which follow the noun in English and most European languages but precede in Turkish, Japanese, and Korean).

Perhaps the most important focus of research in generative syntax has been the various kinds of non-local dependencies which natural languages exhibit, which were largely unnoticed in pre-generative work. An important example is the dependency between a clause-initial interrogative element like ‘who’ and the associated gap which is a feature of many languages. A simple example is (21) in which there is a gap in object position.

Who did Kim annoy? (23)

It has been known since the 1960s and especially since Ross (1967) that these dependencies are subject to various constraints. For example, while (23) is fine, (24) with a gap following ‘and’ is ungrammatical.

* Who did Kim annoy Lee and? (24)

There are important differences between languages in this area. English allows a gap in prepositional object position. Hence both the following are possible:

Who did you talk to? (25a)

To whom did you talk? (25b)

However, most languages do not allow a gap in prepositional object position. The following Polish examples are typical.

* Kim rozmawiałes z? (Polish) (26a)

whom you-talked with

Z kim rozmawiałes? (Polish) (26b)

with whom you-talked

In contrast, English and many other languages do not allow a gap in subject position in a subordinate clause introduced by a complementizer. Hence, the following is ungrammatical:

* Who did you say that wrote this book? (27)

However, some languages do allow this, Thus, the following Italian example is grammatical:

Chi hai detto che ha scritto questo libro?

(Italian) (28)

who have-you said that has written this book

‘Who did you say wrote this book?’

As the translation makes clear, an English example is grammatical if there is no complementizer. See Roberts (1997, Chap. 4) for discussion of the extensive work on these matters.

Another important dependency is that between a reflexive pronoun such as English ‘himself’ and its antecedent, the expression from which it derives its interpretation. In English, while (29a) is acceptable, (29b) is not.

John criticized himself. (29a)

*John said Mary criticized himself (29b)

Thus, the antecedent must be quite close to the reflexive. In other languages, however, a reflexive can be further from its antecedent than in English. For example, in the following Japanese sentence, the antecedent of the reflexive ‘zibun’ can be either ‘Ziro’ or ‘Taro’.

Taroo-ga Hanako-ni[Ziroo-ga zibun-o

(Japanese) (30)

Taro-NOMHanako-toZiro-NOMself-ACC

hihan-sita]-to itta.

criticized-COMP said

‘Taro said to Hanako that Ziro criticized self.’ Hanako is not a possible antecedent because it is not a subject. For discussion of these matters, see Roberts (1997, Chap. 3).

Non-local dependencies like these are likely to remain a major focus of research in generative syntax for the foreseeable future.

7. Acquisition And Processing

As has been noted, it is generally accepted within generative work that syntax is concerned with Ilanguage, the cognitive system underlying ordinary use of language. It follows that a variety of data are relevant, for example, facts about acquisition, facts about processing, and facts about pathology.

Acquisition has been a major concern within TG. Since about 1980, it has been argued that the language faculty consists of a set of principles to which all languages conform and a set of parameters which allow a limited amount of variation between languages. For example, it is commonly assumed that there is a parameter distinguishing languages like English, in which interrogative elements appear in initial position, from those like Japanese, in which they appear in the same position as corresponding non-interrogative elements. The following examples illustrate:

John gave the book to Mary. (31a)

Who did John give the book to? (31b)

John-wa Mary-ni sonohon-o

John-TOP Mary-DATthat book-ACC

agemasi-ta-ka. (Japanese) (32a)

give-PAST-Q

‘John gave the book to Mary.’

John-wa dare-ni sono hon-o

John-TOP who-DATthat book-ACC

agemasi-ta-ka? (Japanese) (32b)

give-PAST-Q

‘Who did John give the book to?’

Within this framework, language acquisition is largely a matter of setting parameters. A great deal of work on the acquisition of syntax has drawn on these ideas. However, some are skeptical. There are two reasons for this. First, relatively little progress has been made towards a plausible set of parameters even for the central or ‘core’ phenomena of syntax, and languages have a wide range of noncore phenomena, which are also acquired (Culicover 1999). Second, it is clear that setting a parameter is a much more complex matter than it was originally thought to be (Fodor 1998). Nevertheless the idea remains a potentially important one.

Other frameworks have been more concerned with language use. It has been argued that HPSG receives some support from what is known about human language processing. HPSG is a constraint-based framework, in which all rules and principles are on a par and none takes precedence over any other. Pollard and Sag (1994, pp. 11–12) argue that this permits an explanation for some important features of human language processing. They highlight four features. First, it is highly incremental in the sense that ‘speakers are able to assign partial interpretations to partial utterances.’ Second, it is highly integrative in the sense that ‘information about the world, the context, and the topic at hand is skillfully woven together with linguistic information whenever utterances are successfully decoded.’ Third, ‘… there is no one order in which information is consulted which can be fixed for all language use situations.’ Finally, it consists of various kinds of activity: ‘comprehension, production, translation, playing language games, and the like.’ They argue that these features are unproblematic for constraint-based grammars but pose serious problems for other sorts of grammars.

Facts about acquisition and processing are likely to be of continuing importance for the evaluation of proposals within generative syntax.

Bibliography:

- Abney S 1996 Statistical methods and linguistics. In: Klavans J L, Resnick P (eds.) The Balancing Act: Combining Symbolic and Statistical Approaches to Language. MIT Press, Cambridge, MA, pp. 1–26

- Blake B J 1990 Relational Grammar. Routledge, London

- Bresnan J 2000 Optimal syntax. In: Dekkers J, van der Leeuw F, van de Weijer J (eds.) Optimality Theory: Phonology, Syntax and Acquisition, Oxford University Press, Oxford, UK, pp. 334–85

- Bresnan J 2001 Lexical-Functional Grammar. Blackwell, Oxford, UK

- Chomsky N A 1957 Syntactic Structures. Mouton, The Hague, The Netherlands

- Chomsky N A 1965 Aspects of the Theory of Syntax. MIT Press, Cambridge, MA

- Chomsky N A 1970 Remarks on nominalization. In: Jacobs R, Rosenbaum P S (eds.) Readings in English Transformational Grammar. Ginn, Waltham, MA

- Chomsky N A 1995 The Minimalist Program. MIT Press, Cambridge, MA

- Culicover P W 1999 Syntactic Nuts: Hard Cases, Syntactic Theory and Language Acquisition. Oxford University Press, Oxford, UK

- Culicover P W, Jackendoff R S 1999 The view from the periphery: The English comparative correlative. Linguistic Inquiry 30: 543–71

- Culy C 1996 Null objects in English recipes. Language Variation and Change 8: 91–124

- Fodor J D 1998 Unambiguous triggers. Linguistic Inquiry 36: 1–36

- Grimshaw J 1997 Projection, heads and optimality. Linguistic Inquiry 28: 373–422

- Hudson R A 1996 Sociolinguistics, 2nd edn. Cambridge University Press, Cambridge, UK

- Jackendoff R S 1997 The Architecture of the Language Faculty. MIT Press, Cambridge, MA

- Kay P, Fillmore C 1999 Grammatical constructions and linguistic generalizations: The What’s X doing Y? construction. Language 75: 1–33

- Koenig J-P 1999 Lexical Relations. CSLI Publications, Stanford, CA

- Newmeyer F J 1986 Linguistic Theory in America, 2nd edn. MIT Press, Cambridge, MA

- Paolillo J C 2000 Formalizing formality: An analysis of register variation in Sinhala. Journal of Linguistics 36: 215–59

- Pollard C, Sag I A 1994 Head-driven Phrase Structure Grammar. University of Chicago Press, Chicago, IL

- Radford A 1997 Syntactic Theory and the Structure of English: A Minimalist Approach. Cambridge University Press, Cambridge, UK

- Roberts I 1997 Comparative Syntax. Arnold, London

- Ross J R 1967 Constraints on Variables in Syntax. PhD dissertation, MIT

- Sag I A 1997 English relative clauses. Journal of Linguistics 33: 431–83