Sample Lexical Semantics Research Paper. Browse other research paper examples and check the list of research paper topics for more inspiration. If you need a research paper written according to all the academic standards, you can always turn to our experienced writers for help. This is how your paper can get an A! Feel free to contact our research paper writing service for professional assistance. We offer high-quality assignments for reasonable rates.

The ‘core’ areas of linguistics are generally agreed to be: phonology, the study of the sounds and sound systems of language; syntax, the study of grammar; and semantics, the study of linguistic meaning. Words figure in all three areas, but it is in their semantic aspect that they offer the most distinctive field of inquiry. This research paper presents a survey of the topics which form the principal foci of interest within the domain of lexical semantics.

Academic Writing, Editing, Proofreading, And Problem Solving Services

Get 10% OFF with 24START discount code

1. Introduction

To the layman, words are the prime bearers of meaning in language, and formulating a message is essentially a matter of choosing the right words. This view is of course an oversimplification, and seriously understates the roles of grammar and of context, but there is more than a germ of truth in it. The branch of linguistics whose domain is the systematic study of word-meanings is lexical semantics. But before proceeding any further, it is necessary, first, to mark off an area of the slippery notion of ‘meaning’ which is appropriate for our purposes, and second, since other people, notably lexicographers, also concern themselves with the meanings of words, something needs to be said about what is distinctive about the activities of lexical semanticists.

If John says to Mary Ha e you been to the bank and seen the manager? and Mary replies Yes, there is an obvious and intuitively valid sense in which Mary’s reply ‘means’ ‘I have been to the bank and seen the manager.’ Yet it is also fairly obvious that ‘I have been to the bank and seen the manager’ would not be a satisfactory definition of the meaning of the word Yes. It is therefore necessary to make a distinction between the ‘inherent meaning’ of a word and its ‘meaning-in-context.’ (The inherent meaning of Yes might be glossed: ‘The proposition you present to me for confirmation is true.’) A similar point (less dramatic) can be made about The child ran to his mother. Here it is clear that the child refers to a boy; but this, too, is meaning-in-context—the inherent meaning of child is something like ‘immature human being.’ As a first approximation, it can be said that lexical semantics focuses on inherent meanings of words: meanings-in-context are the concern of pragmaticians.

Lexicographers also are interested mainly in the inherent meanings of words. So what is the difference between a lexicographer’s interest in word meaning and that of a lexical semanticist? Basically, this is a matter of theoretical vs. practical concerns, parallel in many ways to the relation between physicist and engineer, or botanist and farmer gardener. A lexicographer aims to impart the maximum of information useful to the dictionary-user with maximum economy; a lexical semanticist wants to understand the principles underlying lexical semantic phenomena. In an ideal world there would be considerable cross-fertilization between the two fields: in reality, the cross-domain traffic has been somewhat disappointing.

2. Approaches To Word Meaning

There are numerous ways of approaching the study of word meaning, depending on certain basic assumptions one makes about it.

2.1 The Componential Atomistic Approach

One influential approach, much criticized but constantly reborn, is to think of the meaning of a word as being a more or less complex structure, built up out of combinations of simpler, or more primitive, units of meaning, a simple example would be the analysis of woman as [ADULT] [HUMAN] [FEMALE]. Such ‘componential’ or ‘atomistic’ approaches come in a variety of types, depending on what is claimed regarding the nature of the analysis, the identity and nature of the units, how they are combined, and what aspects of word meaning are to be explained by them.

Some componential analyses (probably the majority) aim at a reduction in the number of combinatorial elements, that is to say, they aim to explain the large number of word meanings in terms of combinations of units drawn from a smaller set, parallel to the way that the phonological forms of all words can be described in terms of combinations from a much smaller set of phonemes. To achieve a reduction, the basic elements must be highly reusable.

Not all analyses are reductive in this sense. One version aims only to distinguish the meaning of a word from that of all other items in the vocabulary, so each contrast potentially creates a new component. An example is the analysis of chair as [FURNITURE] [FOR SITTING] [FOR ONE PERSON] [WITH BACK]. This is certainly distinctive, but there is no guarantee that one will end up with fewer components than lexical items analyzed.

In the earliest versions of componential analysis, the components were the meanings of words, and the aim of the analysis was to extract a basic vocabulary, in terms of which all nonbasic meanings could be expressed. Generally speaking, the features recognized by earlier scholars had no pretensions to universality, and indeed were often avowedly language-specific. Later scholars aimed at uncovering universals of human cognition, a finite ‘alphabet of thought.’ Accessible introductions to componential analysis can be found in Nida (1975) and Wierzbicka (1996).

2.2 Holistic Contextual Approaches

An atomistic approach to word meaning is predicated on the assumption that the meaning of a word can in principle be specified in isolation, without taking into account the meanings of other words in the language’s vocabulary. Holistic approaches, on the other hand, maintain that words do not have meaning in isolation, but only in relation to the rest of the vocabulary.

A typical holistic approach is that of Lyons (1963, 1968, 1977), who espouses and develops the basic Saussurean notion that the ‘value’ of any linguistic element, whether phonological, syntactic or semantic, is essentially contrastive, that is to say, its identity is constituted by its difference from other elements with which it potentially contrasts. So, for instance, the essence of the meaning of dog is that it is ‘not-cat,’ ‘not-wolf,’ ‘not-horse,’ and so on. An important consequence of this is that it is not possible to learn the meaning of any word in isolation from other members of its contrast set; or, to put it another way, it is not enough to be able, for instance, to assign the label dog to any canine animal—one must also know to with-hold the label from nondogs, such as foxes, wolves and so on. Lyons expounds an extended version of this, making it truly holistic, and defines the sense of a word as its position in a network of sense relations, direct or indirect, with all other items in the vocabulary. This network includes not only contrastive relations like that between dog and cat, but also relations of inclusion like that between animal and dog, and dog and collie.

2.3 Conceptual Approaches

Conceptual approaches locate meaning in the mind, rather than in language or in the extralinguistic world. Much discussion has had to do with the meanings of words in relation to conceptual categories (for general discussion, see Taylor 1989, and Ungerer and Schmid 1996).

The currently dominant view of what natural conceptual categories (concepts) are like is that they cannot in general be defined by any finite set of necessary and sufficient features, but are somewhat indeterminate in nature. One important theory, prototype theory, holds that natural categories are organized around ideal examples (prototypes), and that other items belong to the category to the extent that they resemble the prototype. There is normally no definite cut-off point dividing members from non-members: category boundaries are typically fuzzy, or vague.

A major difference of opinion exists over whether words map directly onto conceptual categories, or whether there is an intermediate level of semantic structure which is purely linguistic in nature. Those, like the cognitive linguistics, who argue for direct mapping, claim that there is no theoretical work for a linguistic level of semantics to do: all semantic phenomena can be given a conceptual explanation. Those who believe in an independent linguistic level of semantics argue that the fuzziness of conceptual distinctions contrasts with the sharpness of linguistic distinctions, and indicates a separate level of structure. (Think of the uncertain dividing line between ‘alive’ and ‘dead’ in ‘real life’ in relation to the fact that The rabbit is dead logically entails The rabbit is not alive.) They also point to cases like book, which (it is claimed) has a single sense (at the linguistic level), but corresponds to two different concepts, a concrete physical object (The book fell from the shelf ) and an abstract text (It is a very difficult book).

A conceptual approach is not necessarily incompatible with a componential approach. Many prototype theorists characterize categories concepts in terms of a set of prototype features, which may or may not have the status of semantic primitives. Such features are not of the necessary-and-sufficient sort, however, but have the property that the more of them something has, the better an example of the relevant category it is.

3. Core Problems Of Lexical Semantics

Certain areas of investigation can be regarded as the defining core of the discipline.

3.1 Possible Words

An important question in lexical semantics is whether there are any restrictions on possible word meanings: is there anything which can be conceived and described, but which could not constitute the meaning of a word? This is a difficult question, and there are a number of ways of approaching it.

It might be thought that there could not possibly be a verb which meant, for instance, ‘to eat popcorn on a Wednesday morning while standing facing west,’ or a noun denoting, collectively, a computer mouse, a used condom, and a map of the world. These are admittedly unlikely, but not logically impossible, provided they can be conceptually unified, and there is no apparent limit to what disparate events, properties, or things can be conceived as a unity in some belief system or other. (The first example above, for instance, could conceivably be part of the ritual practices of an eccentric religious cult.) Of course, prototypically, a noun denotes some spatiotemporally continuous entity, and prototypical events have a similar unity, and such tendencies are an important part of the semantic nature of words.

An apparently universal constraint on word meaning is ‘structural continuity.’ A simple illustration of this will have to suffice, as a precise statement of the constraint is quite complex. Take the phrase a very old man. A word meaning ‘very old,’ or ‘old man,’ or ‘very old man’ would occasion no surprise. But one meaning ‘very—man,’ such that any adjective applied to it would be automatically intensified (e.g., an old veryman = ‘a very old man,’ a poor veryman = ‘a very poor man,’ etc.) is inconceivable.

There are certain language-specific constraints on what can be packaged inside a single word. A famous example is the inability of, for instance, a French verb to simultaneously encode movement and manner information. In the English sentence John ran up the stairs, movement and manner are encoded in ran and direction in up. However, a French translation of this must package the information differently: Jean monta l’escalier en courant. Here, movement and direction are encoded in monta, and manner separately in en courant. The extent of such restrictions is at present insufficiently researched.

3.2 Polysemy

The meanings of words are notoriously affected by context: it is only a slight exaggeration to say that the semantic contribution a word makes is different for every distinct context in which it occurs. But the difference between two contextually-induced readings can range from relatively slight, as in My cousin married a policeman and My cousin married an actress (‘male cousin’ vs. ‘female cousin’), to rather major, as in elastic band and wind band, with perhaps a coat of paint and a coat of many colors as intermediate examples.

A distinction can be made between cases where a word has more than one meaning and different contexts select different meanings, and cases where only one meaning is involved, which can be enriched in different ways in different contexts. The first type of case in general involves ambiguous words. These have a number of distinctive properties. Think of bank in We finally reached the bank. The two readings of bank are in competition, and only one can win: it is not possible to ‘mean’ both readings at once, except when punning. Nor is it possible to remain uncommitted with respect to the two meanings: with cousin, for instance, whether the cousin in question is male or female can be left open, but with bank one meaning or the other must be intended. In the case of cousin it can be said that there is a general meaning which covers both of the more specific possibilities; with bank there is no such ‘superordinate’ meaning. Contextual enrichment without polysemy may involve a type-to-subtype specification as with cousin, or fly like a bird and sting like a bee, where reference is to a prototypical instance of the mentioned category (i.e., not an ostrich or a drone), or it may be whole-to-part, as in My car has a puncture.

The distinct readings of ambiguous words may be totally unrelated, as with the two senses of bank and band: this is called homonymy. Lexicographers normally treat such cases as separate words that happen to have the same phonetic form. In other cases, there may be an intelligible semantic relation between the readings, in which case we speak of polysemy: lexicographers treat polysemy as involving a single word (with one main entry) which has two or more meanings. It is important to realize that there is no sharp dichotomy between ‘related’ and ‘unrelated’ meanings, but a continuous scale of relatedness. Some relationships are intuitively clear, such as the metaphorical relation between branch of a tree and branch of a bank, or the metonymic relation between the old oak tree and It’s made of solid oak. Speakers are less sure whether they would mark try in try a jacket on and try someone for murder as related. Lexicographers often use common etymological origin as a criterion for polysemy, but this may run counter to current speakers’ intuitions (usually more important for linguistics. Famous cases are ear of corn and human ear, which do not have a common origin, but are nonetheless felt by most people to be related, and a glass of port and the ship entered port, which have a common origin, but no relationship accessible to unaided intuition.

Some polysemic relationships are ‘one-off’; others are highly recurrent, in which case the term regular polysemy may be used. Many metonymic relations are at least to some extent recurrent: for instance, th ‘tree’ ‘wood’ example mentioned above, or the difference between Mary planted some roses and Mary picked some roses. Metaphorical relations are typically not recurrent. For instance, both head and foot are often metaphorically extended, but the fact that one occurs is no guarantee that the other does. Hence, for instance, foot of mountain occurs but not head of mountain, head of school but not foot of school, and so on.

Where there is a set of polysemous readings, it is often possible to identify one which is an intuitively plausible source from which the others can be derived by extension. For instance, given the three meanings of position in: The house is in a wonderful position, John holds an important position in the company and What is your position on the euro?, it is fairly clear that only the first is a plausible metaphorical source for the other two. This is commonly called the literal meaning. (For a general discussion of polysemy and related matters, see Cruse 2000, Chap. 6).

3.3 Specifying Word Meanings

How (or whether) one goes about the enterprise of specifying the content of a single sense depends very much on one’s favored theoretical approach, and also to some extent on the purpose of the exercise. (Only a few linguistic semanticists have made anything beyond very small-scale attempts to provide full descriptions of real lexical senses.) A componentialist will seek to provide a finite set of necessary and sufficient semantic features; most meaning descriptions by cognitive linguists are also in the form of a set of features, but these are often weighted for importance, and are not necessary and sufficient. For any sort of holist, the full specification of a sense will necessarily be somewhat cumbersome. Lexicographers usually try (with varying degrees of success) to provide a watertight definition of the necessary-and-sufficient variety. This is one area where there is little in the way of consensus.

3.4 Sense Relations

The observation that the vocabularies of natural languages are at least partially structured has led to a lively interest in the underlying structuring principles—in particular, recurrent relations between meanings (usually referred to as ‘sense relations’ or ‘lexical relations’). These are of two main sorts, paradigmatic and syntagmatic. It is the former that have been the most studied. (Cruse 1986 discusses sense relations in some detail.)

3.4.1 Paradigmatic Relations. Paradigmatic sense relations represent sets of choices at particular points in the structure of sentences, and reflect the way a language articulates extra-linguistic reality. A limited number of sense-relations have figured prominently in discussions; they are: synonymy, hyponymy, meronymy, incompatibility, and oppositeness.

(a) Synonymy. Synonymy, or sameness of meaning, is not as simple as it appears. Absolute synonymy, which we can define as having the same degree of normality in all contexts, is extremely rare (if it occurs). Propositional synonyms, such as begin and commence, which have the same logical properties, are more common, but would not serve to fill a ‘Dictionary of Synonyms.’ Lexicographers’ synonyms, such as bra e and heroic, have an adequate intuitive basis, but are hard to pin down: they may be used contrastively (He was bra e, but I wouldn’t say he was heroic) but their differences must be of a minor nature.

(b) Hyponymy. Hyponymy is one of two important relations of inclusion. Examples are: alligator: reptile, aunt: relative, truck: vehicle. In the last pair, truck is the hyponym and vehicle the superordinate: the hyponym denotes a subclass of the class denoted by the superordinate (alternatively, the meaning of the superordinate is included in that of the hyponym). Notice that the terms hyponym and superordinate are relational, not absolute: dog is a hyponym of animal but a superordinate of collie.

(c) Meronymy. The second important inclusion relation is meronymy, the ‘part–whole’ relation, exemplified by finger: hand. (Hyponymy and meronymy must not be confused: a finger is not a type of hand, nor is a dog a part of an animal.) True parts are interestingly different from ‘mere’ pieces, in that they prototypically have clear boundaries, a characteristic form recognizable in other wholes of the same type, and a determinate function with respect to their wholes. (Think of the difference between the parts of a teapot—handle, spout, lid, and so on—and what typically results if a china teapot is dropped onto a hard floor.)

(d) Incompatibility. Incompatibility is related closely to hyponymy, being the typical relation between sister hyponyms of the same superordinate—like dog, cat, (animal ); apple, peach ( fruit). Incompatibility is not simply difference of meaning (sometimes termed ‘heteronymy’): incompatibles have a relation of mutual exclusion. For instance, dog: cat and dog: pet both represent ‘difference of meaning.’ But while it follows logically from the fact that something is a dog, that it is not a cat, hence dog and cat are incompatibles, it does not follow that it is not a pet, so dog and pet are not incompatibles. There is a parallel relationship between sister parts of a whole (co-meronymy): sister incompatibles denote classes with no members in common; co-meronyms denote sister parts that do not overlap physically (like upper arm, lower arm, and hand).

(e) Opposites (Antonyms). Opposites can be briefly characterized as binary incompatibles, that is, incompatibles that necessarily occur in two-member sets, several types can be recognized.

(i) Complementaries divide some domain into two, with no ‘sitting on the fence.’ For instance, organisms must be either dead or alive; those that are not dead are alive, and those that are not alive are dead. Other examples are open: shut, true: false, stationary: moving.

(ii) Gradable contraries (in some systems, simply antonyms) differ from complementaries, first, in that they are gradable, that is, they occur normally with intensifiers such as very, slightly, a bit, etc., and in the comparative degree, and second, they are contraries, in that denying one term of a pair does not commit one to the other. A typical example is the pair long: short.

(iii) Reversives are opposites which denote counterdirectional processes or actions; examples are rise: fall, open: close, dress: undress.

(iv) Converses present the same situation or event from different viewpoints: The painting is abo e the photograph The photograph is below the painting; John sold the car to Bill/Bill bought the car from John.

3.4.2 Syntagmatic Relations. Syntagmatic relations hold between items in the same larger unit, such as phrase, clause or sentence. They represent not choice but restrictions on choice, and control discourse cohesiveness. The effects of putting two words together in a grammatical construction can be described as ‘normality’ (The cat purred ), ‘clash’ (The cat barked broke), or ‘pleonasm’ (He kicked it with his foot). Syntagmatic relations are described usually in terms of ‘selectional restrictions,’ which specify the conditions for the normality of a sequence. So, for instance, for a pregnant X to be normal, X must denote a female mammal; for Mary drank the Y to be normal, Y must denote a liquid, and so on.

3.5 Lexical Structures

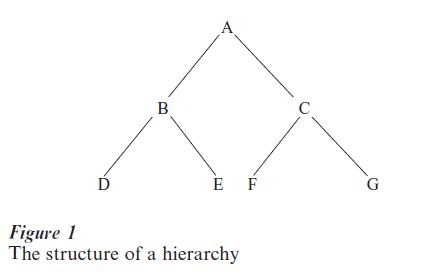

Sense relations form the basic structuring principles of various lexical configurational patterns in the vocabulary of a language. An important type is the lexical hierarchy, structured on the following pattern (which in principle can continue indefinitely in a downward direction, and can have indefinitely many items at each level except the first; see Fig. 1).

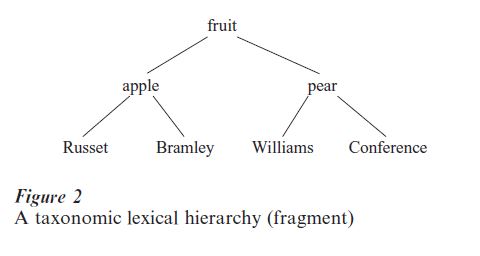

The two main types of lexical hierarchy are the classificatory, or taxonomic, variety, and the part–whole, or meronomic type. An important feature of a hierarchy is that, as we move down the structure, the branches proliferate, but never converge. In a taxonomic hierarchy, the successive levels represent successive subdivisions into mutually exclusive classes of items: each item is related to items higher up the hierarchy by the relation of hyponymy (as shown by the lines), and to items at the same level by incompatibility (see Fig. 2).

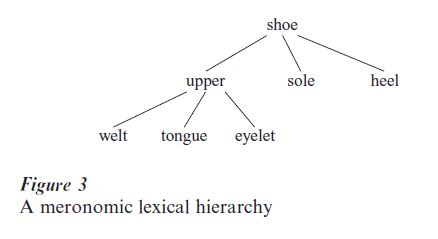

The structuring relations for a meronomic hierarchy are meronymy (vertical) and co-meronymy (horizontal) (see Fig. 3).

Other common lexical structures are nonbranching chains of various sorts:

Monday—Tuesday—Wednesday (etc.)

puddle—pond—lake—sea—ocean

freezing—cold—cool—lukewarm—warm—hot—

scorching

millimetre—centimetre—metre—kilometre

second—minute—hour—day—week—month

—year—decade—century—millennium

and ‘grids’ or correlated series:

sheep mutton mare stallion

pig pork ewe ram

cow beef doe buck

deer venison lioness lion

3.6 Historical Change Of Meaning

Words are being used continually in ways which transform, stretch, broaden, or narrow their established senses. This flexibility is one of the strengths of language, enabling it to adapt and remain an effective tool of communication through ever changing circumstances. If a word is used repeatedly with a particular extended meaning, this may well, over time, become established, either as a new polysemous sense, or it may be incorporated as a result of a shift in the boundaries of the old sense. Many new senses can be found in the domain of personal computers: for example, mouse, scroll, icon, alias, tool, save, back-up. An example of changing boundaries is the incorporation of the legs of tables, chairs, etc., into the general category of LEGS, without any intuition of figurative use. Most speakers regard the current use of pet in cyberpet/electronic pet as metaphorical extension, but in time this may well go the way of leg. The creation of a new polysemic sense is a sudden event (although the process of establishment is gradual). Some diachronic changes, however, are gradual. If one accepts a prototype account of meaning, then the prototype of, say, car has changed gradually over the last century or so, as cars themselves have changed.

4. General Questions

4.1 Dictionary vs. Encyclopedia

Many theorists (but by no means all) draw a distinction between an (ideal) dictionary of a language, and an encyclopedia. The former purports to contain information about the meaning of a word, while the latter incorporates general knowledge about the word’s referents. The general assumption is that only the former is truly linguistic in nature; the latter concerns not semantics, but pragmatics. The distinction has some intuitive support: on the basis of our experience with dictionaries and encyclopedias, we have fairly clear expectations of what to find in the two types of publication. However, making the step from the likely contents of familiar publications to the contents of a ‘lexical entry,’ either in a theoretical model of word-semantics, or in a psychologically real ‘mental lexicon,’ is far from straightforward, and it has in fact proved difficult to provide a sound theoretical basis for the distinction. One proposal is that only truth-conditional features of meaning should form part of the lexical entry—that is, features which determine whether a statement containing the word in question is true or false of a particular situation.

However, this assumes that word meanings can in general be specified in terms of truth-conditional features, an assumption which is challenged by prototype theorists. Also, it is not intuitively clear why, for instance, ‘can bark’ should not be part of the meaning of ‘dog,’ even though X is a dog does not entail X barks. However, a claim that everything we know about dogs is legitimately part of the meaning of dog would also be intuitively suspect. One solution for a conceptualist is to say that the dictionary lexical entry simply indicates which concept a word maps onto, but does not specify the contents of the concept.

4.2 Lexical Semantics And Syntax

An important and controversial topic is the relation between the meaning of a word and its grammatical properties. Historically, a wide spectrum of views can be noted, from proponents of the autonomy of syntax, to those who claim that all syntactic properties are semantically motivated. Probably the majority of linguists would take up some sort of intermediate position on this question: recent work by, for instance, Levin (see, e.g., Levin and Rapaport Hovav 1992), reveals a considerable amount of semantic determination of syntactic patterning; on the other hand, examples of differing syntactic behavior like the following are hard to see as semantically motivated:

I’ve finished the job.

I’ve completed the job.

I’ve finished.

I’ve completed.

A typical example of semantically motivated syntactic behavior is the following alternation:

John broke the stick.

The stick broke.

Verbs which show this behaviour must (a) denote a change of state in their transitive object intransitive subject (John saw the dog /* The dog saw), and (b) must not encode any information other than the change of state, such as the nature or manner of the action performed by the causing agent (John washed the car/* The car washed).

5. Future Prospects

There is no doubt that considerable advances in the understanding of lexical semantics have been made in recent decades. But there remain nonetheless, areas where understanding is far from complete, and in which future progress can be anticipated. One such area is in a way the most fundamental of all, namely, what is it that distinguishes lexical semantics from phrasal or clausal semantics? An understanding of this issue might be expected to lead to a proper typology of word meanings, understood both interlingually and intralingually. This is currently in its infancy. Another vital area is that of polysemy, or more generally, variation in context. The varieties and mechanisms of contextual variation have received a great deal of attention; what is less well understood is how and why a reading crystallizes as a new, autonomous sense, with independent relational, combinatorial, and truthconditional properties. Mention should also be made of the eternal problem of how to specify what an individual sense of a word ‘means.’

The study of lexical semantics is less ‘autonomous’ than that of, say, phonology, or syntax. Especially if one takes a cognitive linguistic view, there is no clear dividing line between lexical semantics and the study of conceptual categories within cognitive psychology; and advances in one field tend to have repercussions in the other. Advances in certain areas of psycholinguistics can also be expected to throw light on word meaning. For instance, there is currently a developing body of work on the time course of semantic activation. The meaning of a word is not activated all at once when a word is recognized, and the details of the activation process cannot fail to have relevance to our understanding of the internal structure of a word’s meaning.

One area of practical concern, which is poised for a major take-off, but is currently held back by lexical semantic problems, is the automatic processing of natural language by computational systems. The main problems are the complexity of natural meanings and their contextual variability. The work currently being done in this area can be expected to spill over, not only into general lexicography, but also into the linguistic study of word meanings.

Bibliography:

- Cruse D A 1986 Lexical Semantics. Cambridge University Press, Cambridge, UK

- Cruse D A 2000 Meaning in Language: An Introduction to Semantics and Pragmatics. Oxford University Press, Oxford. UK

- Levin B, Hovav Rappaport M 1992 Wiping the slate clean: A lexical semantic exploration. In: Levin B, Pinker S (eds.) Lexical and Conceptual Semantics. Blackwell, Oxford, UK, pp. 123–52

- Lyons J 1963 Structural Semantics. Cambridge University Press, Cambridge, UK

- Lyons J 1968 Introduction to Theoretical Linguistics. Cambridge University Press, Cambridge, UK

- Lyons J 1977 Semantics. Cambridge University Press, Cambridge, UK

- Nida E A 1975 Componential Analysis of Meaning: An Introduction to Semantic Structures. Mouton, The Hague, The Netherlands

- Taylor J R 1989 Linguistic Categorization: Prototypes in Linguistic Theory. Clarendon Press, Oxford, UK

- Ungerer F, Schmid H-J 1996 An Introduction to Cognitive Linguistics. Longman, London

- Wierzbicka A 1996 Semantics: Primes and Universals. Oxford University Press, Oxford, UK