This sample education research paper on performance assessment features: 6100 words (approx. 20 pages) and a bibliography with 38 sources. Browse other research paper examples for more inspiration. If you need a thorough research paper written according to all the academic standards, you can always turn to our experienced writers for help. This is how your paper can get an A! Feel free to contact our writing service for professional assistance. We offer high-quality assignments for reasonable rates.

This research paper begins with an introduction to performance assessments. Performance assessments mirror the performance that is of interest, require students to construct or perform an original response, and use predetermined criteria to evaluate students’ work. The different uses of performance assessments will then be discussed, including the use of performance assessments in large-scale testing as a vehicle for educational reform and for making important decisions about individual students, schools, or systems and the use of performance assessments by classroom teachers as an instructional tool. Following this, there is a discussion on the nature of performance assessments as well as topics related to the design of performance assessments and associated scoring methods. The research paper ends with a discussion on how to ensure the appropriateness and validity of the inferences we draw from performance assessment results.

Academic Writing, Editing, Proofreading, And Problem Solving Services

Get 10% OFF with 24START discount code

Introduction

Educational reform in the 1980s was based on research suggesting that too many students knew how to repeat facts and concepts, but were unable to apply those facts and concepts to solve meaningful problems. Because assessment plays an integral role in instruction, it was not only instruction that was the target of change but also assessment. Proponents of the educational reform argued that assessments needed to better reflect students’ competencies in applying their knowledge and skills to solve real tasks. Advances in the 1980s in the study of both student cognition and measurement also prompted individuals to think differently about how students process and reason with information and, as a result, how assessments can be designed to capture meaningful aspects of students’ thinking and learning. Additionally, advocates of curriculum reform considered performance assessments a valuable tool for educational reform in that they were considered to be useful vehicles to initiate changes in instruction and student learning. It was assumed that if large-scale assessments incorporated performance assessments it would signal important goals for educators and students to pursue.

Performance assessments are well-suited to measuring students’ problem-solving and reasoning skills and the ability to apply knowledge to solve meaningful problems. Performance assessments are intended to “emulate the context or conditions in which the intended knowledge or skills are actually applied” (American Educational Research Association, [AERA], American Psychological Association [APA], & National Council on Measurement in Education [NCME], 1999, p. 137). The unique characteristic of a performance assessment is the close similarity between the performance on the assessment and the performance that is of interest (Kane, Crooks, & Cohen, 1999). Consequently, performance assessments provide more direct measures of student achievement and learning than multiple-choice tests (Frederiksen & Collins, 1989). Direct assessments of writing that require students to write persuasive letters to the local newspaper or the school board provide instances of the tasks we would like students to perform. Most performance assessments require a student to perform an activity such as conducting a laboratory experiment or constructing an original report based on the experiment. In the former, the process of solving the task is of interest, and in the latter, the product is of interest.

Typically, performance assessments assess higher-level thinking and problem-solving skills, afford multiple solutions or strategies, and require the application of knowledge or skills in relatively novel real-life situations or contexts (Baron, 1991; Stiggins, 1987). Performance assessments like conducting laboratory experiments, performing musical and dance routines, writing an informational article, and providing explanations for mathematical solutions may also provide opportunities for students to self-reflect, collaborate with peers, and have a choice in the task they are to complete (Baker, O’Neil, & Linn, 1993; Baron, 1991). Providing opportunities for self-reflection, such as asking students to explain in writing the thinking process they used to solve a task, allows students to evaluate their own thinking. Some believe that choice allows examinees to select a task that has a context they are familiar with, which may lead to better performance. Others argue that choice introduces an irrelevant feature into the assessment because choice not only measures a student’s proficiency in a given subject area but also their ability to make a smart choice (Wainer & Thissen, 1994).

Performance assessments require carefully designed scoring procedures that are tailored to the nature of the task and the skills and knowledge being assessed. The scoring procedure for evaluating the performance of students when conducting an experiment would necessarily differ from the scoring procedure for evaluating the quality of a report based on the experiment. Scoring procedures for performance assessments require some judgment because the student’s response is evaluated using predetermined criteria.

Uses of Performance Assessments

Performance Assessments for use in Large-scale Assessment and Accountability systems

The use of performance assessments in large-scale assessment programs, such as state assessments, has been a valuable tool for standards-based educational reform (Resnick & Resnick, 1992). A few appealing assumptions underlie the use of performance assessments: (a) they serve as motivators in improving student achievement and learning, (b) they allow for better connections between assessment practices and curriculum, and (c) they encourage teachers to use instructional strategies that promote reasoning, problem solving, and communication (Frederik-sen & Collins, 1989; Shepard, 2000). It is important, however, to ensure that these perceived benefits of performance assessments are realized.

Performance assessments are used for monitoring students’ progress toward meeting state and local content standards, promoting standards-based reform, and holding schools accountable for student learning (Linn, Baker, & Dunbar, 1991). Most state assessment and accountability programs include some performance assessments, although in recent years there has been a steady decline in the use of performance assessments in state assessments due, in part, to limited resources and the amount of testing that had to be implemented in a short period of time under the No Child Left Behind (NCLB) Act of 2001. As of the 200506 school year, NCLB requires states to test all students in reading and mathematics annually in Grades 3 through 8 and at least once in high school. By 2007-08, states must assess students in science annually in one grade in elementary, middle, and high school. While the intent of NCLB is admirable—to provide better, more demanding instruction to all students with challenging content standards, to provide the same educational opportunities to all students, and to strive for all students to reach the same levels of achievement—the burden put on the assessment system has resulted in less of an emphasis on performance assessments. This is partly due to the amount of time and resources it takes to develop performance tasks and scoring procedures, and the time it takes to administer and score performance assessments.

The most common state performance assessment is a writing assessment, and in some states performance tasks have been used in reading, mathematics, and science assessments. Typically, performance tasks are used in conjunction with multiple-choice items to ensure that the assessment represents the content domain and that the assessment results allow for inferences about student performance within the broader content domain. An exception was the Maryland School Performance Assessment Pro-gram (MSPAP), which was entirely performance-based and was implemented from 1993 to 2002. On MSPAP, students developed written responses to interdisciplinary performance tasks that required the application of skills and knowledge to real-life problems (Maryland State Board of Education, 1995). Students worked collaboratively on some of the tasks, and then submitted their own written responses to the tasks. MSPAP provided school-level scores, not individual student scores. Students received only a small sample of performance tasks, but all of the tasks were administered within a school. This allowed for the school score to be based on a representative set of tasks, allowing one to make inferences about the performance of the school within the broader content domain. The goal of MSPAP was to promote performance-based instruction and classroom assessment. The National Assessment of Educational Progress (NAEP) uses performances tasks in conjunction with multiple-choice items. As an example, NAEP’s mathematics assessment is composed of multiple-choice items as well as constructed-response items that require students to explain their mathematical thinking.

Many of the uses of large-scale assessments require reporting scores that are comparable over time, which require standardization of the content, administration, and scoring of the assessment. Features of performance assessments, such as extended time periods, collaborative work, and choice of task, pose challenges to the standardization and administration of performance assessments.

Performance Assessments for Classroom Use

Educators have argued that classroom instruction should, as often as possible, engage students in learning activities that promote the attainment of important and needed skills (Shepard et al., 2005). If the goal of instruction is to help students reason with and use scientific knowledge, then students should have the opportunity to conduct experiments and use scientific equipment so that they can explain how the process and outcomes of their investigations relate to theories they learn from textbooks (Shepard et al., 2005). The use of meaningful learning activities in the classroom requires that assessments be adapted to align to these instructional techniques. Further, students learn more when they receive feedback about particular qualities of their work, and are then able to improve their own learning (Black & Wiliam, 1998). Performance assessments allow for a direct alignment between important instructional and assessment activities, and for providing meaningful feedback to students regarding particular aspects of their work (Lane & Stone, 2006). Classroom performance assessments have the potential to better simulate the desired criterion performance as compared to large-scale assessments because the time students can spend on performance assessments for classroom use may range from several minutes to sustained work over a number of days or weeks.

Classroom performance assessments provide information about students’ knowledge and skills to guide instruction, provide feedback to students so as to monitor their learning, and can be used to evaluate instruction. In addition to eliciting complex thinking skills, classroom performance assessments can assess processes and products that are important across subject areas, such as those involved in writing a position paper on an environmental issue (Wiggins, 1989). The assessment of performances across disciplines allows teachers of different subjects to collaborate on projects that ask students to make connections across content. Students who are asked to use scientific procedures to investigate the effects of toxicity levels on fish populations in different rivers may also be asked to use their social studies knowledge to describe what these levels mean for different communities whose economy is dependent on the fishing industry (Taylor & Nolen, 2005). Teachers may ask students to write an article for the local newspaper informing the community of the effects of toxicity levels in rivers. The realistic nature of these connections and tasks themselves may help motivate students to learn.

Similarity between simulated tasks and real-world performances is not the only characteristic that makes performance assessments motivational for students. Having the freedom to choose how they will approach certain problems and engage in certain performances allows students to use their strengths while examining an assignment from a variety of standpoints. For example, students required to write a persuasive essay may be allowed to choose the topic they wish to address. Furthermore, if one of the tasks is to gather evidence that supports one’s claims, students could choose the means by which they obtain that information. Some students may feel more comfortable researching on the Internet, while others may choose to interview individuals whose comments support their position (Taylor & Nolen, 2005). Allowing choice ensures that most students will perceive at least some freedom and control over their own work, which is inherently motivational. When designing performance assessments, however, teachers need to ensure that the choices they provide will allow for a fair assessment of all students’ work (Taylor & Nolen, 2005). Tasks that students choose should allow them to demonstrate what they know and can do. Providing a clear explanation of each task, its requirements or directions, and the criteria by which students will be evaluated will help ensure a fair assessment of all students. Students’ understanding of the criteria and what constitutes successful performance will provide an equitable opportunity for students to demonstrate their knowledge. Providing appropriate feedback at different points in the assessment will also help ensure a fair assessment.

Although performance assessments can be beneficial when incorporated into classroom instruction, they are not always necessary. If a teacher is interested in assessing a student’s ability to recall factual information, a different form of assessment would be more appropriate. Before designing any assessment, teachers need to ask themselves two questions: What do I want my students to know and be able to do, and what is the most appropriate way for them to demonstrate what they know and can do?

Nature of Performance Tasks

Performance tasks may assess the process used by students to solve the task or a product, such as a sculpture. They may involve the use of hands-on activities, such as building a model or using scientific equipment, or they may require students to produce an original response to a constructed-response item, write an essay, or write a position paper. Constructed-response items and essays provide students with the opportunity to “give explanations, articulate reasoning, and express their own approaches toward solving a problem” (Nitko, 2004, p. 240). Constructed-response items can be used to assess the process that students employ to solve a problem by requiring them to explain how they solved it.

The National Assessment of Educational Progress (NAEP) includes hands-on performance tasks in their science assessment. These tasks require students to conduct experiments, and to record their observations and conclusions by answering constructed-response items and multiple-choice items. As an example, a public release eigth-grade task provides students with a pencil with a thumb tack in the eraser, red marker, paper towels, a plastic bowl, graduated cylinder, and bottles of fresh water, salt water, and mystery water. Students are asked to perform a series of investigations that allow them to investigate the properties of freshwater and salt water, and are asked to determine whether a bottle of mystery water is freshwater or salt water. After each step, students respond to questions regarding the investigation. Some of the constructed-response items require students to provide their reasoning and to explain their answers (U.S. Department of Education, 1996).

NAEP also includes constructed-response items in their mathematics assessment. An eighth-grade sample NAEP constructed-response item requires student to use a ruler to determine how many boxes of tiles are needed to cover a diagram of a floor. The diagram of the floor is represented as a 3 //- x 5 H-inch rectangle with a given scale of 1 inch equals 4 feet. The instructions are:

The floor of a room shown in the figure above is to be covered with tiles. One box of floor tiles will cover 25 square feet. Use your ruler to determine how many whole boxes of these tiles must be bought to cover the entire floor. (U.S. Department of Education, 1997)

Performance tasks may also require students to write an essay, story, poem, or other piece of writing. On the Maryland School Performance Assessment Program (MSPAP), the assessment of writing would have taken place over a number of days. First the student would engage in some prewriting activity; then the student would write a draft; and finally the student would revise the draft, perhaps considering feedback from a peer. Consider this example of a 1996 Grade 8 writing prompt from MSPAP:

Suppose your class has been asked to create a literary magazine to be placed in the library for you schoolmates to read. You are to write a story, play, poem, or any other piece of creative writing about any topic you choose. Your writing will be published in the literary magazine. (Maryland State Department of Education, 1996)

This task allows student to choose both the form and topic of writing. Only the revised writing was evaluated using a scoring rubric.

Design and Scoring of Performance Assessments

The design of classroom performance assessments follows the same guidelines as the design of performance assessments for large-scale purposes (see for example, Lane & Stone, 2006). Because of differences in the stakes associated with performance assessments that are used for classroom purposes, however, they do not need to meet the same level of standardization and reliability as do large-scale performance assessments. In large-scale assessments, performance is examined over time so the content of the assessment and procedures for administration and for scoring need to be the same across students and time—they need to be standardized. This ensures that the interpretations of the results are appropriate and fair.

Design of Performance Assessments

Performance assessment design begins with a description of the purpose of the assessment; construct or content domain (i.e., skills, knowledge, and their applications) to be measured; and the intended inferences to be drawn from the results. Delineating the domain to be measured ensures that the performance tasks and scoring procedures are aligned appropriately with the content standards. Whenever possible, assessments should be grounded in theories and models of student cognition and learning. Researchers have suggested that the assessment of students’ understanding of matter and atomic-molecular theory can draw on research on how students learn and develop understandings of the nature of matter and materials, how matter and materials change, and the atomic structure of matter (National Research Council, 2006). An assessment within this domain may be able to reflect students’ learning progressions—the path that students may follow from a novice understanding to a sophisticated understanding of atomic molecular theory. Assessments that are designed to capture the developmental nature of student learning can provide valuable information about a student’s level of understanding.

To define the domain to be assessed, test specifications are developed that provide information regarding the assessment. For large-scale assessments, test specifications provide detailed information regarding the content and cognitive processes, format of tasks, time requirements for each task, materials needed to complete each task, scoring procedures, and desired statistical characteristics of the tasks (AERA, APA, & NCME, 1999). Explicit specifications of the content of the tasks are essential in designing performance assessments because they include fewer tasks and they tend to be more unique than multiple-choice items. Test specifications will help ensure that the tasks being developed are representative of the intended domain. Specifications for classroom assessments may not be as comprehensive as those for large-scale assessments; however, they are a valuable tool to classroom teachers for aligning their assessment with instruction. There are excellent sources for step-by-step guidelines for designing performance tasks and scoring rubrics, including Nitko (2004), Taylor and Nolen (2005), and Welch (2006).

Scoring Procedures for Performance Assessments

The evaluation of student performance requires scoring procedures based on expert judgment. Clearly defined scoring procedures are essential for developing performance assessments from which valid interpretations can be made. The first step is to delineate the performance criteria. Performance criteria reflect important characteristics of successful performance. At the classroom level, teachers use performance criteria developed by the state or may develop their own criteria. Defining the performance criteria deserves careful consideration, and informing students of the criteria safeguards a fair evaluation of student performance.

For classroom performance assessments, scoring rubrics, rating scales, or checklists are used to evaluate students. Scoring rubrics are rating scales that consist of pre-established performance criteria at each score level. The criteria specified at each score level are linked to the construct being assessed and depend on a number of factors, including whether a product or process is being assessed, the demands of the tasks, the nature of the student population, and the purpose of the assessment and the intended score interpretations (Lane & Stone, 2006). The number of score levels used depends on the extent to which the criteria across the score levels can meaningfully distinguish among student work. The performance reflected at each score level should differ distinctly from the performance at other score levels.

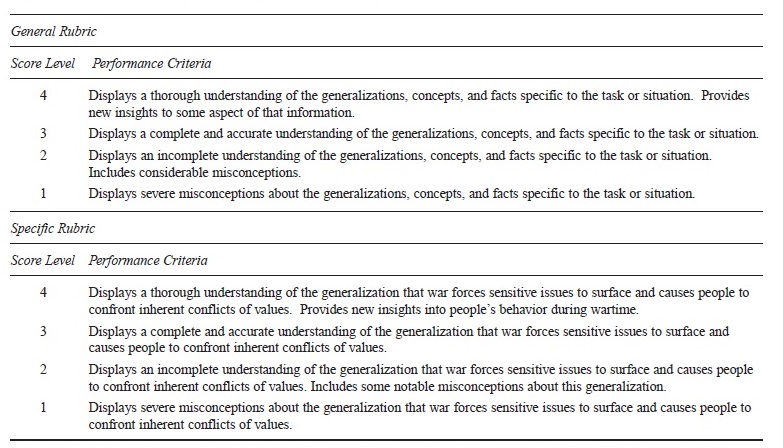

Designing scoring rubrics is an iterative process. A general scoring rubric that serves as a conceptual outline of the skills and knowledge underlying the given performance may be developed first. Performance criteria are specified for each score level. The general rubric can then be used to guide the design of each task-specific rubric. The performance criteria for the specific rubrics would reflect the criteria of the general rubric but also include criteria representing unique aspects of the individual task. Typically, the assessment of a particular genre of writing (e.g., persuasive writing) may have only a general rubric because the performance criteria at each score level are very similar across writing prompts within a genre. In mathematics, science, or history, performance assessment may have a general scoring rubric in addition to specific rubrics for each task. Specific rubrics guarantee accuracy in applying the criteria to student work, and facilitate generalizing from the performance on the assessment to the larger content domain of interest. Figure 1 shows a general and a specific rubric for a history performance task that assesses declarative knowledge. The specific rubric was designed for item 3 in the following performance task:

President Harry S. Truman has requested that you serve on a White House task force. The goal is to decide how to force the unconditional surrender of Japan, yet provide a secure postwar world. You are now a member of a committee of four and have reached the point at which you are trying to decide whether to drop the bomb.

- Identify the alternatives you are considering and the criteria you are using to make the decision.

- Explain the values that influence the selection of the criteria and the weights you placed on each.

- Explain how your decision has helped you better understand this statement: War forces people to confront inherent conflicts of values. (Marzano, Pickering, & McTighe, 1993, p. 28, note that numbers 1, 2, and 3 are added)

Figure 1 General and Specific Scoring Rubric for a History Standard

The specific rubric in Figure 1 could be expanded to provide examples of student misconceptions that are unique to question 3.

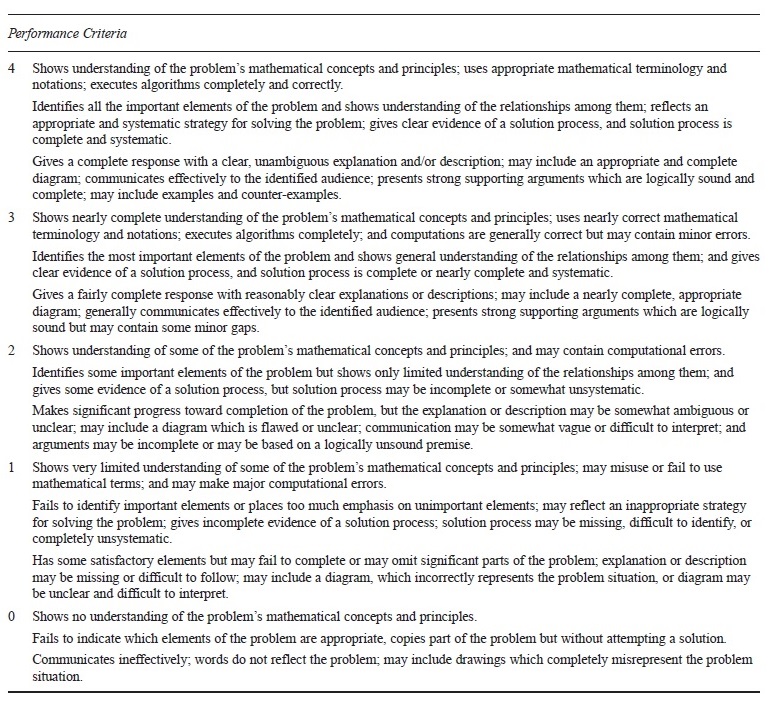

In addition to the distinction between general and specific rubrics, scoring rubrics may be either holistic or analytic. A holistic rubric requires the evaluation of the process or product as a whole. In other words, raters make a single, holistic judgment regarding the quality of the work, and they assign a single score using a rubric rather than evaluating the component parts separately. Figure 2 provides a holistic general scoring rubric for a mathematics performance assessment.

Analytic rubrics require the evaluation of component parts of the product or performance, and separate scores are assigned to those parts. If students are required to write a persuasive essay, rather than evaluate all aspects for their work as a whole, students may be evaluated separately on a few components such as strength of argument, organization, and writing mechanics. Each of these components would have a scoring rubric with distinct performance criteria at each score level. Analytic rubrics have the potential to provide meaningful information about students’ strengths and weaknesses and the effectiveness of instruction on each of these components. Individual scores from an analytic rubric can also be summed to obtain a total score for the student.

Figure 2 Holistic General Scoring Rubric for Mathematics Constructed-Response Items Performance Criteria

Although rubrics are most commonly associated with scoring performance assessments, checklists and rating scales are sometimes used by classroom teachers. Checklists are lists of specific performance criteria expected to be present in a student’s performance (Taylor & Nolen, 2005). Checklists only allow the option of indicating whether each criterion was present or absent in the student’s work. They are best used to provide students with a quick and general indication of their strengths and weaknesses. Checklists tend to be easy to construct and simple to use; however, they are not appropriate for many performance assessments because no judgment is made as to the quality of the observation. If a teacher indicates that the criterion “grammar is correct” is present in a student’s response, there is no indication of the degree to which the student’s grammar was correct (see Taylor & Nolen, 2005).

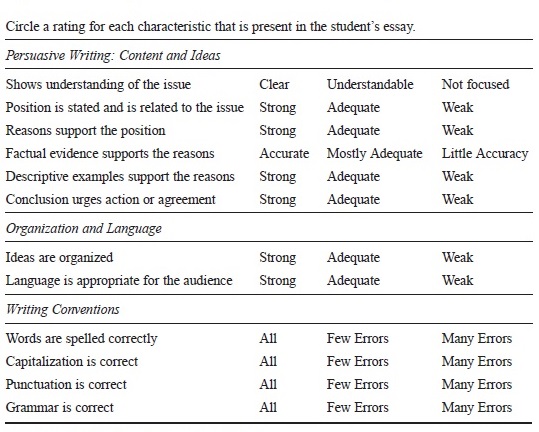

Rating scales allow teachers to indicate the degree to which a student exhibits a particular characteristic or skill. A rating scale can be used if the teacher wants to differentiate the degree to which students use grammar correctly or incorrectly. Figure 3 provides an example of a rating scale for a persuasive essay.

Figure 3 Rating Scale for the First Draft of a Persuasive Essay Circle a rating for each characteristic that is present in the student’s essay.

In this rating scale, the rating labels are not the same across the performance criteria (e.g., clear, understand able, and unfocused versus strong, adequate, and weak). This may pose a problem if teachers are hoping to combine the various ratings into a single, meaningful score for students. For some tasks, rating scales can be developed that have the same rating labels across items, which would allow for combining the ratings to produce a single score. When using rating scales, teachers must make explicit distinctions between the rating labels, such as few errors and many errors. If these distinctions are not made, scoring will be inconsistent and, as a result, accurate inferences about students’ learning will be jeopardized. Teachers should strive to use scoring procedures that have explicitly defined performance criteria. Often, this is best achieved with using scoring rubrics to evaluate student performance.

Evaluating the Validity of Performance Assessments

Assessments are used in conjunction with other information to make important inferences and decisions about students, schools, and districts; and it is important to obtain evidence about the appropriateness of those inferences and decisions. Validity refers to the appropriateness, meaningfulness, and usefulness of the inferences drawn from assessment results (AERA, APA, & NCME, 1999). Evidence is needed therefore to help support the interpretation and use of assessment results. Validity criteria that are specific to performance assessments have been proposed, including, but not limited to, content representation, cognitive complexity, meaningfulness and transparency, transfer and generalizability, fairness, and consequences (Linn et al., 1991; Messick, 1995). A brief discussion of these validity criteria is presented in this section.

Content Representation

Because performance assessments consist of a small number of tasks, the ability to generalize from the student’s performance on the assessment to the broader domain of interest may be hindered. It is important to consider carefully which tasks will com-pose a performance assessment. Test specifications will contribute to the development of tasks that systematically represent the content domain. For large-scale assessments that have high stakes associated with individual student

scores, multiple-choice items are typically used in conjunction with performance assessments to better represent the content domain and to help ensure accurate inferences regarding student performance. Classroom assessments can be tailored to the instructional material, allowing for a wide variety of assessment formats to capture the breadth of the content.

Cognitive Complexity

Some performance tasks may not require complex thinking skills. If the performance of interest is whether students can use a ruler accurately, then a performance task aligned to this skill requires the use of a ruler for measuring and does not require students to engage in complex thinking. Many performance assessments, however, are intended to assess students’ reasoning and problem-solving skills. In fact, one of the promises of performance assessments is that they can assess these higher-order, complex skills. One cannot assume that complex thinking skills are being used by students when working on performance assessments; validity evidence is needed to establish that performance assessments do indeed evoke complex thinking skills (Linn et al., 1991). First, in assessment design it is important to consider how the task’s format, content, and context may affect the cognitive processes used by students to solve the task. Second, the underlying cognitive demands of performance assessments, such as inference, integration, and reasoning, can be verified. Analyses of the strategies and processes that students use in solving performance tasks can provide such evidence. Students may be asked to think aloud as they respond to the task, or they may be asked to explain, in writing, reasons for their responses to tasks. If the purpose of a mathematics task is to determine whether students can solve it with a nonroutine solution strategy, but most students apply a strategy that they recently learned in instruction, then the task is not eliciting the intended cognitive skills and should be revised. The cognitive demands of performance tasks need to be considered in their design and then verified.

Meaningfulness And Transparency

If performance assessments are to improve instruction and student learning, both teachers and students should consider the tasks meaningful and of value to the instructional and assessment process (Frederiksen & Collins, 1989). Performance assessments also need to be transparent to both teachers and students—each party needs to know what is being assessed, by what methods, the criteria used to evaluate performances, and what constitutes successful performance. For large-scale assessments that have high-stakes associated with them, students need to be familiar with the task format and the performance criteria of the general scoring rubric. This will help ensure that all students have the opportunity to demonstrate what they know and can do. Throughout the instructional year, teachers can use performance tasks with their students, and engage them in discussions about what the tasks are assessing and the nature of the criteria used for scoring student performances. Teachers can also engage students in using scoring rubrics to evaluate their own work and the work of their peers. These activities will provide opportunities for students to become familiar with the nature of the tasks and the criteria used in evaluating their work. It is best to embed these activities within the instructional process rather than treat them as test preparation activities. Research has shown that students who have had the opportunity to become familiar with the format of the performance assessment and the nature of the scoring criteria perform better than those who were not provided with such opportunities (Fuchs et al., 2000).

Transfer And Generalizability

An assessment is only a sample of tasks that measure some portion of the content domain of interest. Results from the assessment are then used to make inferences about student performance within that domain. The intent is to generalize from student performance on a sample of tasks to the broader domain of interest. The better the sample represents the domain, the more accurate the generalizations of student performance on the assessment to the broader domain of interest. It is necessary, then, to consider which tasks and how many tasks are needed within an assessment to give us confidence that we are making accurate inferences about student performance. For a writing assessment, generalizations about student performance across the writing domain may be of interest. Consequently, the sample of writing prompts should elicit various types of writing, such as narrative, persuasive, and expository. A student skilled in narrative writing may not be as skilled in persuasive writing. To generalize performance on an assessment to the entire writing domain, students would need to write essays across various types of writing. This is more easily accomplished in classroom performance assessments because samples of students’ writing can be collected over a period of weeks.

An investigation of the generalizability of a performance assessment also requires examining the extent to which one can generalize the results across trained raters. Raters affect the extent to which accurate generalizations can be made about the assessment results because raters may differ in their appraisal of the quality of a student’s response. Generalizability results across trained raters are typically much better than generalizability results across tasks in science, mathematics, and writing (e.g., Hieronymus & Hoover, 1987; Lane, Liu, Ankenmann, & Stone, 1996; Shavelson, Baxter, & Gao, 1993). To help achieve accuracy among raters in large-scale assessments, care is needed in designing precise scoring rubrics, selecting and training raters, and checking rater performance throughout the scoring process. Classroom performance assessments that are accompanied by scoring rubrics with clearly defined performance criteria will help ensure the accuracy of teachers’ assigned scores to student work.

Fairness

Some proponents of performance assessments in the 1980s believed that performance assessments would help reduce the performance differences between subgroups of students—students with different cultural, ethnic, and socioeconomic backgrounds. As Linn et al. (1991) cautioned, however, it would be unreasonable to assume that group differences that are exhibited on multiple-choice tests would be smaller or alleviated by using performance assessments. Differences among groups are not necessarily due to test format, but are due to differences in learning opportunities, differences with the familiarity of the assessment format, and differences in student motivation. Closing the achievement gap for subgroups of students regardless of the format of the assessment requires that all students have the opportunity to learn the subject matter covered by the assessment (AERA, APA, & NCME, 1999). An integrated instruction and assessment system, as well as highly qualified teachers, are requisite for closing the achievement gap. Performance assessments can play an important role in closing the performance gap only if all students have opportunities to be engaged in meaningful learning.

Consequences

An assumption underlying the use of performance assessments is that they serve as motivators in improving student achievement and learning, and that they encourage the use of instructional strategies that promote students’ reasoning, problem-solving, and communication skills. It is particularly important to obtain evidence about the consequences of performance assessments because particular intended consequences, such as fostering reasoning and thinking skills in instruction, are an essential part of the assessment system’s rationale (Linn, 1993; Messick, 1995). Performance assessments are intended to promote student engagement in reasoning and problem solving in the classroom, so evidence of this intended consequence would support the validity of their use. Evaluation of both the intended and unintended consequences of assessments is fundamental to the validation of test use and the interpretation of the assessment results (Messick, 1995). To identify potential negative consequences, one needs to examine whether the purpose of the assessment is being compromised, such as teaching only the content standards that are on state assessments rather than to the entire set of content standards deemed important.

When some states relied more heavily on performance assessments in the early 1990s, there was evidence that many teachers revised their own instruction and classroom assessment accordingly. Teachers used more performance tasks and constructed-response items for classroom purposes. In a study examining the consequences of Washington’s state assessment program, approximately two-thirds of teachers reported that the state’s content standards and extended-response items on the state assessment were influential in promoting better instruction and student learning (Stecher, Barron, Chun, & Ross, 2000). Further, observations of classroom instruction in exemplary schools in Washington revealed that teachers were using reform-oriented strategies in meaningful ways (Borko, Wolf, Simone, & Uchiyama, 2003). Teachers and students were using scoring rubrics similar to those on the state assessment in the classroom, and their use of these rubrics promoted meaningful learning. In a study examining the consequences of Maryland’s state performance assessment (MSPAP), teacher’s reported use of reform-oriented instructional strategies was associated with positive changes in school performance on MSPAP in reading and writing over time (Stone & Lane, 2003). Schools in which teachers indicated that they had used more reform-oriented instructional strategies in reading and writing were associated with greater rates of change in school performance on MSPAP over a 5-year period. Further, teacher perceived effect of MSPAP on math and science instructional practices was also found to explain differences in changes in MSPAP school performance in math and science. The more impact MSPAP had on science and math instruction, the greater the gains in MSPAP school performance in math and science over a 5-year period.

Recently, however, many states have relied more heavily on multiple-choice items and short-answer formats, and as a consequence, teachers in some of these states are using fewer constructed-response items for classroom purposes (Hamilton et al., 2007). If extended constructed-response items that require students to explain their reasoning are not on the high-stakes state assessments, instruction may focus more on computation and less on complex math problems and teachers may rely less on constructed-response items on their classroom assessments.

Conclusion

Performance assessments are useful tools for initiating changes in instruction and student learning. Large-scale assessments that incorporate performance tasks can signal important goals for educators and students to pursue, which can have a positive effect on instruction and student learning. A balanced, coordinated assessment and instructional system is needed to help foster student learning. Coherency among content standards, instruction, large-scale assessments, and classroom assessments is necessary if we are committed to the goal of enhancing student achievement and learning. Because an important role for both large-scale and classroom performance assessments is to serve as models of good instruction, performance assessments should be grounded in current theories of student cognition and learning, be capable of evoking meaningful reasoning and problem-solving skills, and provide results that help guide instruction.

Bibliography:

- American Educational Research Association, American Psychological Association, & National Council on Measurement in Education. (1999). Standards for educational and psychological testing. Washington, DC: American Educational Research Association.

- Baker, E. L., O’Neil, H. F., & Linn, R. L. (1993). Policy and validity prospects for performance-based assessment. American Psychologist, 1210-1218.

- Baron, J. B. (1991). Strategies for the development of effective performance exercises. Applied Measurement in Education, 4(4), 305-318.

- Black, P., & Wiliam, D. (1998). Inside the black box: Raising standards through classroom assessment. Phi Delta Kappan, 80(2), 139-148.

- Borko, H., Wolf, S. A., Simone, G., & Uchiyama, K. (2003). Schools in transition: Reform efforts in exemplary schools of Washington. Educational Evaluation and Policy Analysis, 25(2), 171-202.

- Frederiksen, J. R., & Collins, A. (1989). A systems approach to educational testing. Educational Researcher, 18(9), 27-32.

- Fuchs, L. S., Fuchs, D., Karns, K., Hamlett, C. L., Dutka, S., &

- Katzaroff, M. (2000). The importance of providing background information on the structure and scoring of performance assessments. Applied Measurement in Education, 13(1), 1-34.

- Hamilton, L. S., Stecher, B. M., Marsh, J. A., McCombs, J. S.,

- Robyn, A., Russell, J. L., et al. (2007), Standards-based accountability under No Child Left Behind. Pittsburgh, PA: RAND.

- Hieronymus, A. N., & Hoover, H. D. (1987). Iowa tests of basic skills: Writing supplement teacher’s guide. Chicago: Riverside.

- Kane, M., Crooks, T., & Cohen, A. (1999). Validating measures of performance. Educational Measurement: Issues and

- Practice, 18(2), 5-17.

- Lane, S. (1993). The conceptual framework for the development of a mathematics performance assessment instrument. Edu-cational Measurement: Issues and Practice, 12(3), 16-23.

- Lane, S., Liu, M., Ankenmann, R. D., & Stone, C. A. (1996). Generalizability and validity of a mathematics performance assessment. Journal of Educational Measurement, 33(1), 71-92.

- Lane, S., & Stone, C. A. (2006). Performance assessments. In B. Brennan (Ed.), Educational Measurement (pp. 387-432). Westport, CT: Praeger.

- Linn, R. L. (1993). Educational assessment: Expanded expectations and challenges. Educational Evaluation and Policy Analysis, 15, 1-16.

- Linn, R. L., Baker, E. L., & Dunbar, S. B. (1991). Complex performance assessment: Expectations and validation criteria. Educational Researcher, 20(8), 15-21.

- Maryland State Board of Education. (1995). Maryland school performance report: State and school systems. Baltimore: Author.

- Maryland State Department of Education. (1996). 1996 MSPAP public release task: Choice in reading and writing. Baltimore: Author.

- Marzano, R. J., Pickering, R. J., & McTighe, J. (1993). Assessing student outcomes: Performance assessment using the dimensions of learning model. Alexandria, VA: Association for Supervision and Curriculum Development.

- Messick, S. (1995). Standards of validity and the validity of standards in performance assessment. Educational Measurement: Issues and Practice, 14(4), 5-8.

- National Research Council. (2006). Systems for state science assessment. In M. R. Wilson & M. W. Bertenthal (Eds.), Committee on test design for K-12 science achievement. Washington, DC: National Academies Press.

- Nitko, A. J. (2004). Educational assessment of students (4th ed.). Upper Saddle River, NJ: Pearson.

- Resnick, L. B., & Resnick, D. P. (1992). Assessing the thinking curriculum: New tools for educational reform. In B. G. Gif-ford & M. C. O’Conner (Eds.), Changing assessment: Alternative views of aptitude, achievement, and instruction (pp. 37-55). Boston: Kluwer Academic.

- Schmeiser, C. B., & Welch, C. J. (2007). Test development. In B. Brennan (Ed.), Educational Measurement (pp. 307-354). Westport, CT: Praeger.

- Shavelson, R. J., Baxter, G. P., & Gao, X. (1993). Sampling variability of performance assessments. Journal of Educational Measurement, 30(3), 215-232.

- Shepard, L. A. (2000). The role of assessment in a learning culture. Educational Researcher, 29(7), 4-14.

- Shepard, L., Hammerness, K., Darling-Hammond, L., Rust, F., with Snowdon, J. B., Gordon, E., Gutierrez, C., & Pacheco, A. (2005). Assessment. In L. Darling-Hammond & J. Bransford (Eds.), Preparing teachers for a changing world: What teachers should learn and be able to do. San Francisco: Jossey-Bass.

- Stecher, B., Barron, S., Chun, T., & Ross, K. (2000, August). The effects of the Washington state education reform in schools and classrooms (CSE Tech. Rep. NO. 525). Los Angeles: University of California, National Center for Research on Evaluation, Standards and Student Testing.

- Stiggins, R. J. (1987). Design and development of performance assessments. Educational Measurement: Issues and Practice, 6(1), 33-42.

- Stone, C. A., & Lane, S. (2003). Consequences of a state accountability program: Examining relationships between school performance gains and teacher, student and school variables. Applied Measurement in Education, 16(1), 1-26.

- Taylor, C. S., & Nolen, S. B. (2005). Classroom assessment: Supporting teaching and learning in real classrooms. Upper Saddle River, NJ: Pearson.

- U.S. Department of Education. (1996). NAEP 1996 Science Report Card for the Nation and the States. Washington, DC: Author. Retrieved from https://nces.ed.gov/nationsreportcard/pubs/main1996/

- U.S. Department of Education. (1997). NAEP 1996 Mathematics Report Card for the Nation and the States. Washington, DC: Author. Retrieved from https://nces.ed.gov/nationsreportcard//pdf/main1996/97488.pdf

- Wainer, H., & Thissen, D. (1994). On examinee choice in educational testing. Review of Educational Research, 64, 159-195.

- Welch, C. (2006). Item and prompt development in performance testing. In S. M. Downing & T. M. Haladyna (Eds.), Hand-book of test development (pp. 303-329). Mahwah, NJ: Lawrence Erlbaum Associates.

- Wiggins, G. (1989, April). Teaching to the (authentic) test. Educational Leadership, 41-47.