Sample Expert Systems In Cognitive Science Research Paper. Browse other research paper examples and check the list of research paper topics for more inspiration. If you need a research paper written according to all the academic standards, you can always turn to our experienced writers for help. This is how your paper can get an A! Feel free to contact our custom research paper writing service for professional assistance. We offer high-quality assignments for reasonable rates.

Expert Systems (ES), also called Knowledge-based Systems (KBS), Intelligent Agent Systems or in short Knowledge Systems (KS), are computer programs that exhibit a similar high level of intelligent performance as human experts. By performing inference processes on explicitly stated knowledge, these computer programs can solve problems within a given domain that would previously have required a human expert.

Academic Writing, Editing, Proofreading, And Problem Solving Services

Get 10% OFF with 24START discount code

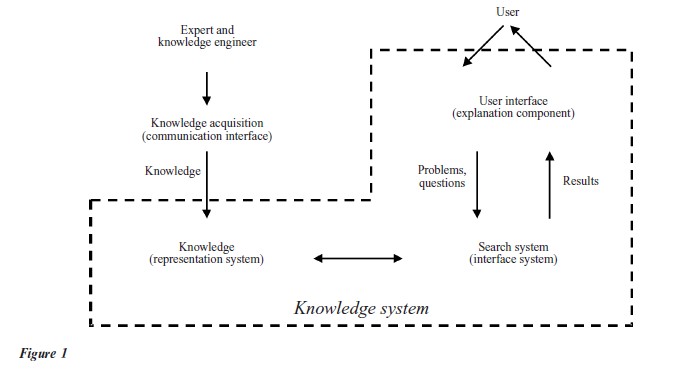

Figure 1 shows the classical architecture of an expert system. According to its classical characterization, an expert or knowledge system consists of (a) a knowledge base where knowledge is encoded in some fixed representational form, (b) the search system (also referred to as inference system or inference engine), which derives solutions for the posed problems and questions from the knowledge base, (c) the knowledge acquisition system, which allows human experts to enter their knowledge into the knowledge system, and (d) the user interface, possibly including an explanation component, by which the users of the knowledge system may present their problems and questions and obtain solutions from the system. The knowledge base contains symbols and symbol structures (Newell and Simon 1976) to embody the knowledge about some given domain, given tasks, as well as their interdependences. The search system performs inference processes by manipulating these symbols. The symbol manipulation processes may be performed by the rules of formal logic or by heuristic processes. With the assistance of the knowledge acquisition system and the knowledge engineer, who knows the representational formats of the knowledge system and assumes the role of a scribe, the knowledge of a human expert can thus be coded into the knowledge system. Knowledge acquisition is, on the one hand, driven by the target knowledge which the human expert wants to publish in the expert system. On the other hand, it is constrained by the formalism of the representation and inference systems. Successful knowledge acquisition is therefore dependent on productive interactions between the knowledge engineer and the domain expert as well as upon the adequacy of the representation and search formalisms of the expert system.

The static and dynamically generated symbol structures as well as the inference processes of expert systems are highly complex. This already applies to the MYCIN system (Buchanan and Shortliffe 1984), which is one of the earliest expert systems to perform at the level of a human expertise. MYCIN was developed at Stanford University in the mid-1970s and provided medical consultations about the diagnosis and treatment of certain infections (bacterial blood infections and meningitis) by reasoning about the symptoms and general characteristics of a patient in combination with the laboratory results of body fluid analyses. MYCIN’s knowledge base contained about 500 high-level production rules. A production rule encodes knowledge by IF–THEN rules, which describe which conditions must be satisfied (specified by the IF segment) in order to derive some conclusion or to perform some action (specified by the THEN segment). One of MYCIN’s production rules had the following knowledge encoded:

IF the infection is primary bacteremia

AND the site of the culture is one of the sterile sites

AND the suspected portal of entry is gastrointestinal tract

THEN there is suggestive evidence (0.7) that the infection is bacteroid.

To obtain a diagnosis, MYCIN would build a sequence (or a tree) of several such rules, so that the preconditions of the rules (i.e., the IF parts) of some given inferencing episode would be provided as the conclusions (i.e., the THEN part) of the subsequent inferencing episode. In other words, MYCIN performed a backward construction of a kind of proof tree. This means that the tree is constructed by first considering a set of possible conclusions. Second, evidence for these conclusions is collected and assessed. By backward chaining rules in this fashion, MYCIN could recognize about 100 causes of bacterial infections and recommend effective drug prescriptions. The chaining of the rules was thereby guided by the search system and the user’s request to find a diagnosis and a therapy. In its reasoning, MYCIN also used probability considerations, which were encoded by so called certainty weights (e.g., suggestive evidence: 0.7). During its time of application, the MYCIN system was very successful and performed at the level of the best experts in its specific domain of medical expertise.

Other expert systems at the time of the first generation systems (mid-1970s to mid-1980s) were similarly successful in different application domains, such as chemistry, geology, mathematics, engineering, speech, and language understanding as well as for different tasks such as diagnosis, configuration, and comprehension tasks. Together with MYCIN, these systems have become well known as legacy expert systems. These legacy systems include Dendral (Lindsay et al. 1980), a chemistry system, which examines a spectroscopic analysis of an unknown molecule and predicts the molecular structures that account for the particular analysis, INTERNIST CADUCEUS (Miller et al. 1982, Wolfram 1995), a diagnosis system for internal medicine, PROSPECTOR (Duda and Reboh 1984), a classification and geological site evaluation system, MACSYMA (Engelman 1971, Moses 1975) which assists mathematicians, scientists, and engineers in solving complex algebraic problems, and XCON R1 (McDermott 1982), a knowledge system for configuring computers. Hearsay (Erman et al. 1980) is a computer program for speech understanding and ELIZA (Weizenbaum 1965) is a computer program that mimics the answers of a client-centered psychotherapist in a dialog with her or his client. For most of these systems, the area of applicability was limited to specific tasks and specific content domains.

The successes and shortcomings of these firstgeneration systems spurred an in-depth analysis and a better understanding of knowledge representations, search systems (cf., Clancey 1985) and knowledge acquisition, yielding the more advanced second generation systems, with corresponding knowledge models and development tools (Boose and Gaines 1989). The second-generation systems (Breuker and Wielinga 1989, Marcus and McDermott 1989) are usually termed knowledge-based systems rather than expert systems. In the subsequent third generation, knowledge systems are no longer stand-alone systems, that may only interact with a single user at a given time, but instead are systems that interact, negotiate and communicate in multiagent systems (Huhns and Singh 1997) are accessible over the internet (Ambite and Knoblock 1997), represent and reason with common-sense knowledge (Lenat and Guha 1990), and which have brought their developers and users back into the loop (Fischer et al. 1994) to be cooperatively involved in solving problems and evolving new knowledge.

Over the period of three decades, the relatively specific goal of replicating the functionality of human expertise for specific tasks in narrowly defined domains has progressed to the broad field of knowledge systems, which is beginning to integrate the academic research on human knowledge and intelligence, application-oriented developments of the computer and engineering sciences, and the business applications of knowledge systems. Now in the twenty-first century, there are many significant business applications of knowledge systems, for instance in the form of knowledge-management and workflow-management systems, or multiagent systems in intra-and internets that are applied for electronic commerce.

Knowledge systems are viewed as artificial agents that have (assigned or attributed) goals, knowledge, and may interact with other agents in a rational manner. As an alternative to this anthropomorphic view of knowledge systems, similar systems may also be characterized not only as a new sophisticated medium that allows humans to publish static knowledge (such as in a traditional book) but also knowledge that becomes active and via the computer performs actions in an environment that may be remote to the environment of its author (i.e., the human expert of a given subject domain). The human expert may nevertheless remain responsible for these actions. The area of expert system research has become much larger over the years, and philosophical and methodological

variety has been added. In combination with the mobility and omnipresence of computers and related new information technologies, such as multimedia systems, inter-and intranets as well as telematics, the field of expert system research continues to enjoy a broad interest from different disciplines and application areas. Expert systems, intelligent artificial agents, or knowledge-management systems, in short knowledge systems, have thus not only become an integral part of modern work environments with businesses attempting to leverage their knowledge by artificial reasoning systems, but may indeed be one of the key factors that is shaping the knowledge society of the twenty-first century.

1. Changes In Focus And Emphasis Over Time

In the development of knowledge systems there have been two substantial changes in scientific research, development, empirical tests and practical applications in medicine and business since the 1970s. These two changes define three different generations of expert systems. In the pioneering years, expert systems were developed by rapid prototyping. These systems were typically rule-based systems with a rule interpreter and a set of rules for a given application domain. The developers of the first expert systems were interested in what they saw to be a practical problem. They took professional reasoning in medicine or other areas and practical problem solving, as it had been researched in psychology as their central examples of intelligent behavior. For obtaining the information that was required to build an operational computer program, the developers then adopted interview techniques, protocol analysis (Ericsson and Simon 1984) and information processing psychology (Bruner et al. 1956). Although these systems were fairly successful in a narrow task and application domain, such expert systems were brittle and could hardly be maintained or updated with additional knowledge. The attempts to reuse the inference engine of these systems for different fields of applications (Hayes-Roth et al. 1983) also indicated the rigidity and the lack of potential to accommodate changes in these systems. The hope of building new expert systems by simply reusing the inference engine of a successfully developed expert system and eliciting the rules of a different domain from respective experts did not yield many successes but instead demonstrated the drawbacks of rapid prototyping. By the method of rapid prototyping, the knowledge base and the inference engine had become attuned to and intertwined with one another, thus limiting the reuse of either of the two components. The inference engines were therefore not of much use for the efficient development of expert systems in other application domains. This experience led to the conclusion that knowledge acquisition is the bottleneck in expert system development. Knowledge acquisition needed to be more than simply asking human experts for their professional guidelines and operating procedures. This research had shown that the power of an expert system was due to the knowledge it possessed rather than to the particular formalisms and inference schemes which it employed. Therefore, knowledge acquisition had to be viewed as a complex modeling activity, where task and domain models had to be developed as the blueprints for expert systems that could subsequently be implemented.

The second-generation knowledge systems were consequently developed in a top-down fashion, where the modeling of an application domain preceded the implementation of the system itself. Top-down implementation and standard methods of software engineering were now utilized for building knowledge systems. The activities which are involved in the knowledge acquisition for second-generation expert systems are similar to developing a cognitive model of human problem solving, decision making or comprehension. The main difference between knowledge acquisition for expert systems and cognitive modeling concerns the empirical information and data which are analyzed. Whereas data from psychological laboratories are employed for cognitive modeling, professional real-world domains and the respective professional performance are analyzed when developing a knowledge-based system.

The modeling approach to knowledge systems yielded a taxonomy of generic tasks, task structures and problem-solving methods (Breuker and Wielinga 1989, Chandrasekaran and Johnson 1993, Benjamins and Fensel 1998) which include heuristic classification (the implicit task model of MYCIN), cover and differentiate, or skeletal-plan refinement. By applying the task structure of heuristic classification, a diagnosis is obtained by abstracting the available data, associating the abstract data with abstract solutions and refining an abstract solution to a specific solution (i.e., the specific diagnosis which best suits the specific data). In skeletal plan refinement, an abstract description of the application of several operators and a description of the application condition becomes instantiated to a specific problem. Such task structures were collected in libraries of expert models that are maintained in a uniform notation (Breuker and van de Velde 1994). Although knowledge systems using first- and second-generation technology continue to be successful in smallor medium-sized applications, the second-generation research unveiled the frame problem (Clancey 1991) and also problems that concern the handling of complexity. The frame problem shows that any sound knowledge system is a closed system and therefore cannot accommodate those novel circumstances which were not foreseen (explicitly or implicitly) by its designers. Depending on the degree of stability of an application area, unforeseeable changes may occur more or less frequently (new diseases or medication in medicine; new manufacturing materials in engineering, etc.). With the words of Karl Popper one might say that any knowledge is preliminary and can be falsified by future observations. The competence of a knowledge-based system is therefore bound by the predictability or rate of unforeseen changes of the given application area, in other words by the quality of the predictions which the designers have indirectly (and mostly unknowingly) built into the system. The processes and conceptual structures of reasonably sized application fields have furthermore been shown to be too complex, so that a knowledge system cannot calculate an answer in due time. Although heuristic processing is frequently used to bypass this problem, the quality of such responses is uncertain.

The third-generation knowledge systems are consequently focused on issues of stability and change over time and across various communities of practice. Knowledge sharing, knowledge reuse and the knowledge evolution that occur over the years in any field of application have become central topics of research. The technologies and theories that are applied as well as the underlying philosophical assumptions have become more diverse. There are knowledge systems that represent a large body of common sense knowledge such as the CYC system (Lenat and Guha 1990) and are represented in formal logic, as well as multiagent systems, where each agent is a small expert system that is able to communicate with other agents. In addition, there are cooperative knowledge systems where the seeding, evolutionary growth and reseeding of a knowledge base (Fischer et al. 1994) is used to accommodate the changes that occur in an environment and where a human user is back in the loop of solving problems and evolving new knowledge. Not all systems subscribe to the assumptions of the symbol level hypothesis (Newell and Simon 1975) but may instead view a knowledge system as a medium for communication among humans. Despite their diversity in architecture and philosophical assumptions, ranging from the representational view of an objective world to the more relativistic assumptions of situated actions (Suchman 1987, Clancey 1997), the ideas and techniques of such systems are already playing a pivotal role in the knowledge society and the emerging global markets of the future. In sum, research on knowledge systems started out with the assumption of human expertise being best modeled by intelligent computation and progressed through a phase which emphasized modeling and knowledge representation to the issues of context, interaction, change, and multiagent systems.

2. Intellectual Context

Expert system research stands in the intellectual tradition of the cognitive revolution (cf., Gardner 1987) that substantially changed the research paradigms of the behavioral and social sciences in the middle of the twentieth century. By conceptualizing thinking as information processing instead or researching behavioral conditioning, the area of behaviorism was succeeded by information processing psychology. As the newly emerging field of artificial intelligence demonstrated, computer systems were consequently believed to execute complex cognitive functions. Language understanding (Kintsch 1974, Schank and Abelson 1977) and language production were similarly in reach of becoming mechanized by symbol manipulation processes. The assumption of humans and computer systems having, in principle, the same mental capacities, as human information processing is crisply stated by Newell and Simon’s (1975) symbol level hypothesis: ‘a physical symbol has the necessary and sufficient means for general intelligent action.’ A physical symbol system is thereby a machine such as a computer that operates on symbol structures. The development of knowledge systems for real-world applications amounts to a practical test of this hypothesis (cf., Schmalhofer et al. 1995). From a scientific point of view, expert system research can be understood as an empirical test of the main hypothesis of the cognitive revolution and the cognitive sciences. Although the assumptions of the physical symbol system hypotheses have been criticized in general, it was not before the late-1980s that alternative views were developed and formalized precisely enough for being considered serious competitors.

The paradigm of situated cognition and parallel distributed processing (subsymbolic or connectionist models) challenged the representational assumptions (or the expert systems of the first and second generation). Situated cognition asserted that the world would be its own best representation and that representations do not come into existence prior to action and will therefore not guide the actions of a natural system in the manner in which knowledge systems do. Instead, human representations would be more like ossified structures that result from and document previous interactions of an agent with its environment. The connectionist approach, on the other hand, emphasized that human information processing, as it is indicated by the neural structures of the brain and the activity patterns that become visible by brainimaging procedures (fMRI, etc.), does not occur in a serial and discrete manner, which is characteristic of symbol processing systems, but instead occurs in a parallel and continuous manner. The software systems that were developed under these paradigms thus differ in their fundamental assumptions, architectures, and representational assumptions. As such systems attempted to achieve similar but somewhat different functionality to the traditional expert systems, they became competitors as well as a supplementation for the weaknesses of the traditional expert systems. They also yielded an additional fruitful method for modeling human intelligence.

Knowledge system research is also closely related to cognitive modeling, and generally to research in cognitive psychology, where knowledge systems are built so that they would explain and mimic human processes, as they are reified in the results of psychological experiments (cf., Schmalhofer 1998). Cognitive architectures, as they have been proposed by Anderson (1993), Kintsch (1998), and others, provide general but different frameworks for describing complex human information processes in a precise manner. Cognitive psychology has adopted many of the representational assumptions which resulted from the expert and knowledge systems research as psychological constructs for explaining human behavior. Examples are semantic nets and production systems which represent the declarative and procedural knowledge in human information processing.

The discussion of how symbols are and become grounded in the environment (Harnad 1990) has further led to the distinction of knowledge systems as an agent architecture and knowledge systems as a document architecture or medium for communicating (rather than emulating human intelligence). Another scientific debate, initiated by the philosopher John Searle, asserted that the manipulation of symbols is not sufficient for intelligence but instead requires intrinsic intentionality (and that the symbol structures need to be grounded in its environment). Brooks (1991) suggested that intelligent systems need not have representations. By developing systems whose behavior was grounded in the environment he could develop an intelligent creature that did not contain an explicit knowledge representation. Minsky (1990), on the other hand, sees ‘symbolness’ as a matter of degree. When comparing connectionist and symbolic formalisms, Minsky concludes that mental architectures need both kinds.

Minsky (1986) has proposed modeling mind as a society of agents, composed of simpler agents all the way down. The society of mind blurs together all of the issues of communication and computation. In a society model the interactions among high-level agents may be more akin to communication between separate beings while symbolic interactions between low-level agents may be more akin to computation. This model also challenges another sharp distinction, namely the distinction between symbols used externally between agents (such as in natural language) and the symbols used internally within the mind of each agent. Indeed, people build knowledge bases collaboratively. They discuss and agree about the meanings of symbols in the knowledge bases. They design the behavior of the systems around the agreed upon meanings of the symbols. Viewed in this way, knowledge bases are like blackboards or paper. They are a medium, a place for writing symbols. They augment human memory and human processing with external memory and external processing. Whereas traditional expert systems are very knowledge centric, in the real world knowledge is distributed. In a university or in a business firm, knowledge is distributed across different schools or different departments. The knowledge to perform a task is therefore often spread out among the people who are involved in these tasks.

3. Issues And Problems

Putting knowledge into computers raises many fundamental questions, some of which have already been treated by philosophers for more than 2,000 years: What is knowledge? Where does it come from? How is it created? How is it held by computers? How is it updated, disseminated, and managed? Through their practical proof of existence, expert systems gave a very specific answer to these questions that is based on a representational theory of mind and more specifically on the physical symbol system hypothesis. As Stefik (1995, p. 21) points out, the essence of this hypothesis is that ‘intelligent systems are subject to natural laws and that the natural laws of intelligence are about symbol processing.’ A symbol can be read, recognized, and written by a recognizer. A physical symbol system is a machine such as a computer that operates on symbol structures. What a symbol stands for is, however, not determined by a symbol’s encoding or by its location because designation is not a physical property of the markings but occurs relative to an observer, namely in the mind of the observer (cf., Kidd 1994). Such a connection between a phenomenon at a specific place and time and a distant symbol is called a causal coupling. Because of the existing distance, it is important to distinguish the symbol system from its environment. From a psychological perspective one may ask under what conditions two people may disagree about what a symbol represents. When certain patterns are said to be physical symbols, there must always be a recognizer that identifies the symbols. ‘Physical’ means that a symbol has a tangible existence. But giving an account of what a symbol represents requires a vantage point, usually outside the symbol system. The tangible knowledge is thus to a certain degree subjective. Although the explicit representations and reasoning methods used in knowledge systems make knowledge tangible, some knowledge may also be intangible and bound to individuals (cf., Nonaka and Takeuchi 1995).

4. Emphasis In Current Research And Future Directions

After research on expert and knowledge systems since the 1970s, there are now at least three distinct facets in research. Technological-oriented research is focused on the development on domain-specific knowledge representations and formal ontologies (Gruber 1994), multiagent systems (Huhns and Singh 1997), and collaborative environments for knowledge work (Domingue and Scott 1998). An ontology is thereby defined as an agreement about a shared conceptualization, which includes frameworks for modeling domain knowledge and agreements about the representation of particular domain theories. The denotations of entities in some universe of discourse (e.g., the denotations of classes, relations, functions, or objects) are thereby associated with human-readable text describing what they mean. Formal axioms may furthermore constrain the interpretation and enforce a well-formed use of these denotations. A shared ontology (cf., van Heijst 1995) will thus help to develop a shared virtual world (e.g., the world-wide web, or the intra extranets of a business enterprise) in which knowledge systems can ground their beliefs and actions. These knowledge systems may jointly form multiagent systems and have a basis for cooperating and competing with one another (Schmalhofer and van Elst 1999). Knowledge systems, intelligent agents, or Webbots may therefore live and be grounded in a (virtual) environment. The actions performed by one agent constrain and are constrained by the actions of other agents. The issues of organizations are thereby becoming more important: Organizations are systems that constrain the actions of member agents by imposing mutual obligations and permissions. Typically, each agent has incomplete knowledge and capabilities for solving a problem, has its own goals and viewpoint, knowledge thus becomes decentralized, and computation becomes asynchronous. To allow agents to interoperate, communication languages, such as KQML (Finin et al. 1997) have been developed.

The application of knowledge systems is focused on the management of knowledge in businesses and industries. Knowledge-management systems provide the mechanisms to distribute the right knowledge to the right people at the right time. Intelligent agents and multiagent systems carry much promise for the application of knowledge systems in electronic commerce. There is now a larger emphasis on the human and/organizational issues related to knowledge. For the emerging knowledge society, knowledge systems will undoubtedly play a pivotal role: Imagine thousands of individuals and groups preparing and making available by gift or sale portions of a large, common distributed knowledge base or knowledge, in the same way as people write textbooks. Knowledge systems will probably become a central component of the blending of computers, televisions, and books into new, integrated forms that are changing the ways in which we work, play, and learn. For the psychological research on human cognition and human intelligence, knowledge systems finally provide the promise of unifying the various empirical and experimental findings across different research paradigms (Newell 1990). This research will continue to unravel the similarities and differences between artificial and biological intelligence, resulting in a deeper understanding of the logical and biological essence of intelligence.

Bibliography:

- Ambite J L, Knoblock C A 1997 Agents for information gathering. IEEE Expert 12: 2–4

- Anderson J R 1993 Rules of the Mind. Erlbaum, Hillsdale, NJ

- Benjamins V R, Fensel D 1998 Special Issue on Problem Solving Methods. International Journal of Human–Computer Studies 49(4): 305–650

- Boose J H, Gaines B R 1989 Knowledge acquisition for knowledge-based systems: notes on the state-of-the-art. Machine Learning 4: 377–94

- Breuker J A, van de Velde W (eds.) 1994 The Common KADS Library of Expertise Modeling. IOS, Amsterdam

- Breuker J A, Wielinga W J 1989 Model driven knowledge acquisition. In: Guida P, Tasso G (eds.) Topics in the Design of Expert Systems. North-Holland Elsevier, Amsterdam, pp. 265–96

- Brooks R A 1991 Intelligence without representation. Artificial Intelligence 47: 139–59

- Bruner J S, Goodnow J J, Austin G A 1956 A Study of Thinking. Wiley, New York

- Buchanan B G, Shortliffe E H 1984 Rule-based Expert Systems: The MYCIN Experiments of the Stanford Heuristic Programming Project. Addison-Wesley, Reading, MA

- Chandrasekaran B, Johnson T R 1993 Generic tasks and task structures: history, critique and new directions. In: David J M, Krivine J P, Simmons R (eds.) Second Generation Expert Systems. Springer, Berlin

- Clancey W J 1985 Heuristic classification. Artificial Intelligence 27: 289–350

- Clancey W J 1991 The frame of reference problem in the design of intelligent machines. In: VanLehn K (ed.) Architectures for Intelligence. The Twenty-second Carnegie-Mellon Symposium on Cognition. Erlbaum, Hillsdale, NJ, pp. 357–423

- Clancey W J 1997 Situated Cognition: on Human Knowledge and Computer Representations. Cambridge University Press, New York

- Domingue J, Scott P 1998 KMI Planet: putting the knowledge back into media. In: Eisenstadt M, Vincent T (eds.) The Knowledge Web: Learning and Collaborating on the Net. Kogan, pp. 173–84

- Duda R O, Reboh R 1984 Artificial intelligence and decision making: the PROSPECTOR experience. In: Reitman W (ed.) Artificial Intelligence Applications for Business. Ablex, Norwood, NJ, pp. 111–47

- Engelman C 1971 The legacy of MATHLAB 68. In: Proceedings of the Second Symposium on Symbolic and Algebraic Manipulation, Los Angeles

- Ericsson K A, Simon H A 1984 Protocol Analysis. Verbal Reports as Data. MIT Press, Cambridge, MA

- Erman L D, Hayes-Roth F, Lesser V R, Reddy D 1980 The HEAR-SAY-II speech-understanding system: integrating knowledge to resolve uncertainty. Computing Surveys 12(2): 213–53

- Finin T, Labrou Y, Mayfiled J 1997 KQML as an Agent Communication Language. In: Bradshaw J (ed.) Software Agents. MIT Press, Cambridge, MA

- Fischer G, McCall R, Ostwald J, Reeves B, Shipman R 1994 Seeding, evolutionary growth and reseeding: supporting the incremental development of design environments. In: Proceedings of CHI-94: Human Factors in Computing Systems, Boston, MA, April 24–28 1994. ACM, New York, pp. 292–8

- Gardner H 1987 The Mind’s New Science: a History of the Cognitive Revolution. Basic Books, New York

- Gruber Th 1993 Towards principles for the design of ontologies used for knowledge sharing. In: Guarino N, Poli R (eds.) Formal Ontologies in Conceptual Analysis and Knowledge Representation. Kluwer, Boston

- Harnad S 1990 The symbol grounding problem. Physica 42: 335–46

- Hayes-Roth F, Waterman D A, Lenat D B 1983 Building Expert Systems. Addison-Wesley, Reading, MA

- Huhns M N, Singh M P 1997 Readings in Agents. Morgan Kaufman, San Francisco

- Kidd A 1994 The marks are on the knowledge worker. In: Proceedings of CHI-94: Human Factors in Computing Systems, Boston, MA, April 24–28 1994. ACM, New York, pp. 186–91

- Kintsch W 1974 The Representation of Meaning in Memory. Erlbaum, Hillsdale, NJ

- Kintsch W 1998 Comprehension: A Paradigm for Cognition. Cambridge University Press, Cambridge, UK

- Lenat D B, Guha R V 1990 Building Large Knowledge-Based Systems. Representation and Inference in the Cyc Project. Addison-Wesley, Reading, MA

- Lindsay R, Buchanan B G, Feigenbaum E A, Lederberg J 1980 DENDRAL. McGraw-Hill, New York

- Marcus S, McDermott J 1989 SALT: a knowledge-acquisition language for propos-and-revies systems. Artificial Intelligence 39: 1–37

- McDermott J 1982 R1: A rule-based configurer of computer systems. Artificial Intelligence 19: 39–88

- Miller R A, Pople H E, Myers J D 1982 Internist-1: an experimental computer-based diagnostic consultant for general internal medicine. New England Journal of Medicine 307: 468–76

- Minsky M 1986 Society of Mind. Simon and Schuster, New York

- Minsky M 1990 Logical vs. analogical or symbolic vs. connectionist or neat vs. scruff In: Winston P H, Shellard S A (eds.) Artificial Intelligence at MIT: Expanding Frontiers. MIT Press, Cambridge, MA, pp. 218–43

- Moses J 1975 A MACSYMA Primer. Mathlab Memo No. 2, Computer Science Laboratory. Massachusetts Institute of Technology, Cambridge, MA

- Newell A 1982 The knowledge level. Artificial Intelligence 18: 87–127

- Newell A 1990 Unified Theories of Cognition. Harvard University Press, Cambridge, MA

- Newell A, Simon H A 1976 Computer science as empirical enquiry: symbols and search. Communications of the ACM 19: 113–26

- Nonaka I, Takeuchi H 1995 The Knowledge-creating Company. Oxford University Press, Oxford, UK

- Schank R C, Abelson R 1977 Scripts, Plans, Goals and Under- standing. Erlbaum, Hillsdale, NJ

- Schmalhofer F 1998 Constructive Knowledge Acquisition: A Computational Model and Experimental Evaluation. Erlbaum, Mahwah, NJ

- Schmalhofer F, Reinartz R, Tschaitschian B 1995 A unified approach to learning in complex real world domains. Applied Artificial Intelligence 9: 127–56

- Schmalhofer F, van Elst L 1999 Anoligo-agents system with shared responsibilities for knowledge management. In: Fensel D, Studer R (eds.) Knowledge Acquisition, Modeling and Management. Springer, Berlin, pp. 379–84

- Stefik M 1995 Introduction to Knowledge Systems. Morgan Kaufman, San Francisco

- Suchman L 1987 Plans and Situated Actions: The Problem of Human–Machine Communication. Cambridge University Press, Cambridge

- van Heijst G 1995 The role of ontologies in knowledge engineering. Doctoral dissertation, University of Amsterdam

- Weizenbaum J 1965 ELIZA—a computer program for the study of natural language communication between man and machine. Communications of the ACM 9(1): 36

- Wolfram D A 1995 An appraisal of Internist-1. Artificial Intelligence in Medicine 7: 93–116