Sample Computational Neuroscience Research Paper. Browse other research paper examples and check the list of research paper topics for more inspiration. iResearchNet offers academic assignment help for students all over the world: writing from scratch, editing, proofreading, problem solving, from essays to dissertations, from humanities to STEM. We offer full confidentiality, safe payment, originality, and money-back guarantee. Secure your academic success with our risk-free services.

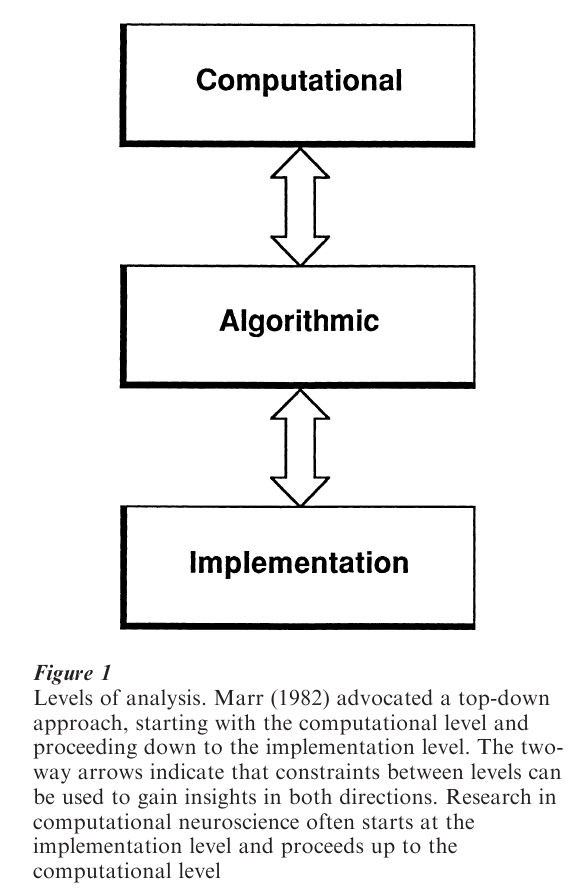

The term ‘computation’ in computational neuroscience refers to the way that brains processes information. Many different types of physical systems can solve computational problems, including slide rules and optical analog analyzers as well as digital computers, which are analog at the level of transistors and must settle into a stable state on each clock cycle. What these have in common is an underlying correspondence between an abstract computational description of a problem, an algorithm that can solve it, and the states of the physical system that implement it (Fig. 1). This is a broader approach to computation than one that is based purely on symbol processing (Churchland and Sejnowski 1992, Arbib 1995).

Academic Writing, Editing, Proofreading, And Problem Solving Services

Get 10% OFF with 24START discount code

There is an important distinction between general-purpose computers, which can be programmed to solve many different types of algorithms, and special-purpose computers, which are designed to solve only a limited range of problems. Most neural systems are specialized for particular tasks; an example is the retina, which is dedicated to visual transduction and image processing. As a consequence of the close coupling between structure and function, the anatomy and physiology of a brain area can provide important clues to the algorithms that it implements and its computational function (Fig. 1); in contrast, the hardware of a general-purpose computer may not reveal its function, which also depends on software.

Another major difference between brains and general-purpose digital computers is that computers are hardwired, but the connectivity between neurons and their properties is shaped by the environment during development and remains plastic even in adulthood. Thus, as the brain processes information, it changes its own structure in response to the information that is being processed. Adaptation and learning are important mechanisms that allow brains to respond flexibly as the world changes—on a wide range of time scales, from milliseconds to years. The flexibility of the brain has survival advantage when the environment is nonstationary, and the evolution of some cognitive skills may depend deeply on genetic processes that have extended the time scales for brain plasticity.

Brains are complex, nonlinear dynamical systems with feedback loops, and brain models provide intuition about the possible behaviors of such systems. The predictions of a model make explicit the consequences of the underlying assumptions, and comparison with experimental results can lead to new insights and discoveries. Emergent properties of neural systems, such as oscillatory behaviors, depend on both the intrinsic properties of the neurons and the pattern of connectivity between them. For example, the large-scale coherent brain rhythms that accompany different states of alertness and sleep arise from intrinsic properties of thalamic and cortical neurons that are reciprocally connected (Destexhe and Sejnowski 2001).

1. Models At Different Levels Of Detail

1.1 Realistic Models

Perhaps the most successful model at the level of the neuron has been the classic Hodgkin–Huxley model of the action potential in the giant axon of the squid (Koch and Segev 1998). Data were first collected under a variety of conditions, and a model was later constructed to integrate the data into a unified framework. This type of model requires that most of the variables in the model have been measured experimentally, and only a few unknown parameters need to be fitted to the data. Detailed models can be used to distinguish between different explanations of the data. In the classic model of a neuron, information flows from the dendrites, where synaptic signals are integrated, to the soma of the neuron, where action potentials are initiated and carried to other neurons through long axons. In these models, the dendrites are passive cables, but recently, voltage-dependent sodium, calcium, and potassium channels have been observed in the dendrites of cortical neurons, which greatly increase the complexity of synaptic integration (Stuart et al. 1999). Experiments and models have shown that these active currents can carry information in a retrograde direction from the cell body back to the distal synapses tree. Thus, it is possible for spikes in the soma to affect synaptic plasticity through the mechanisms discussed in Sect. 2.

Realistic models with several thousand cortical neurons can be explored on the current generation of workstation. The first model for the orientation specificity of neurons in the visual cortex was the ‘feed-forward’ model proposed by Hubel and Wiesel, which assumed that the orientation preference of cortical cells was determined primarily by converging inputs from thalamic relay neurons. Although there is solid experimental evidence for this model, local cortical circuits have been shown to be important in amplifying weak signals and suppressing noise, as well as performing gain control to extend the dynamic range. These models are governed by the type of attractor dynamics that was analyzed by John Hop-field (1982), who provided a conceptual framework for the dynamics of feedback networks.

Although the spike train of cortical neurons is highly irregular, and is typically treated statistically, information may be contained in the timing of the spikes in addition to the average firing rate. This has already been established for a variety of sensory systems in invertebrates and peripheral sensory systems in mammals (Rieke et al. 1996), but whether spike timing carries information in cortical neurons is an open research issue. In addition to representing information, spike timing could also be used to control synaptic plasticity through Hebbian mechanisms for synaptic plasticity, as discussed in Sect. 2.

1.2 Signal Processing Models

Other types of models have been used to analyze experimental data in order to determine whether they are consistent with a particular computational assumption. For example, a ‘vector averaging’ technique has been used to compute the direction of arm motion from the responses of cortical neurons (Georgopoulos et al. 1986), and signal detection theory was used to analyze the information from cortical neurons responding to visual motion stimuli (Movshon and Newsome 1996). Bayesian methods can effectively decode the position of a rat in a maze from place cell recorded in the hippocampus (Zhang et al. 1998). In these examples, the computational model was used to explore the information in the data but was not meant to be a model for the actual cortical mechanisms. Nonetheless, these models have been highly influential and have provided new ideas for how populations of neurons may represent sensory information and motor commands.

Neural network or ‘connectionist’ models that simplify the intrinsic properties of neurons can be helpful in understanding the computational consequences of information contained in large populations of neurons. An example of this approach is a recent model of parietal cortex based on the response properties of cortical neurons (Pouget and Sejnowski 2001). The parietal cortex is involved in representing the spatial location of objects in the environment and computing transformations from sensory to motor coordinates. The model examined the issue of which reference frames are used in the cortex for performing these transformations. The model predicted the out-comes of experiments performed on patients with spatial neglect following lesions of the parietal cortex.

Cognitive functions such as attention have also been modeled. Francis Crick (1994) proposed that the relay cells in the thalamus may be involved in attention, and has provided an explanation for how this could be accomplished based on the anatomy of the thalamus. Models of competition between neurons in cortical circuits can explain many properties of single cortical neurons in awake, behaving monkeys during attention tasks (Reynolds et al. 1999).

Finally, small neural systems have been analyzed with dynamical systems theory (Harris-Warrick et al. 1995). This approach is feasible when the numbers of parameters and variables are small. Most models of neural systems involve a large number of variables, such as membrane potentials, firing rates, and concentrations of ions, with an even greater number of unknown parameters such as synaptic strengths, rate constants, and ionic conductances. Where the number of neurons and parameters is very large, techniques from statistical physics become applicable in predicting the average behavior of large systems (Van Vreeswijk and Sompolinsky 1998). There is a midrange of system sizes where neither type of limiting analysis is possible, but where simulations can be performed (Bower and Beeman 1998). One danger of relying solely on computer simulations is that they may be as complex and difficult to interpret as the biological system itself.

2. Learning And Memory

One of the goals of computational neuroscience is to understand how long-term memories are formed through experience and learning. There is increasing experimental evidence that the strengths of inter-actions between neurons can be altered by activity, called synaptic plasticity. For example, high-frequency trains of stimuli at synapses in the hippocampus induce a form of long-term potentiation (LTP) that can last for days (Bliss and Lomo 1973). Moreover, these synapses require simultaneous presynaptic activity and postsynaptic depolarization (Kelso et al. 1986), as suggested by Hebb (1949). Models of associative memory that incorporate Hebbian synaptic plasticity have been widely explored (Anderson and Hinton 1981).

Hebbian synaptic plasticity can also be used to form maps and has been used to model the early development of the projection from the thalamus to the visual cortex. In particular, these models can explain why inputs from the right and left eyes form alternating stripes in primary visual cortex of cats and monkeys, called ocular dominance columns (Miller et al. 1989). Specific mappings arise in the cortex because temporal contiguity in axonal firing is translated into spatial contiguity of synaptic contacts.

The change in the strength of synapses in the hippocampus and neocortex depends on the relative timing of spikes in the presynaptic neuron and the postsynaptic neuron. Reliable LTP occurs when the presynaptic stimulus precedes the postsynaptic spike, but there is long-term depression (LTD) when the presynaptic stimulus immediately follows the post-synaptic spike (Markram et al. 1997, Bi and Poo 1998). This temporal asymmetry in synaptic plasticity solves the problem of balancing LTD and LTP, since chance coincidences should occur about equally with positive and negative relative time delays. When sequences of inputs are repeated in a network of neurons with recurrent excitatory connections, temporally asymmetric synaptic plasticity will learn the sequence and the pattern of activity in the network will tend to predict future inputs. There is evidence for this in the hippocampus where place cells representing nearby locations in a maze may be linked together (Blum and Abbott 1996), and in visual cortex where simulations of cortical neurons can become directionally selective when exposed to moving visual stimuli (Rao and Sejnowski 2000).

The temporally asymmetric Hebbian learning rule can be used to implement the temporal difference learning algorithm in reinforcement learning and classical conditioning (Montague and Sejnowski 1994, Sutton and Barto 1998, Rao and Sejnowski 2000). The unconditioned stimulus in a classical conditioning experiment must occur before the reward for the stimulus–reward association to occur. This is reflected in the temporal difference learning algorithm by a postsynaptic term that depends on the time derivative of the postsynaptic activity level. The goal is for the synaptic input to predict future reward: if the reward is greater than predicted the postsynaptic neuron is depolarized and the synapse strengthens, but if the reward is less than predicted, the postsynaptic neuron is hyperpolarized and the synapse decreases in strength. There is evidence that in primates the transient output from dopamine neurons in the ventral tegmental area carries information about the reward predicted from a sensory stimulus (Schultz et al. 1997), and in bees, an octopaminergic neuron has a similar role (Hammer and Menzel 1995).

The temporal window for classical conditioning is several seconds—much longer than the window for LTP LTD observed at cortical and hippocampal synapses. A circuit of neurons in the basal ganglia and frontal cortex may be needed to extend the computation of temporal differences to these long time intervals (Berns and Sejnowski 1998). It is surprising to find the same learning algorithm in different types of learning systems in different parts of the brain. This suggests that the temporal order of input stimuli is a useful source of information about causal dependence in many different learning contexts and over a range of time scales.

3. Future Directions

3.1 Molecular Synaptic

Almost all of the proteins that make up synapses are now known, and the sequencing of the human genome will make it possible to complete that list within the next few years. Coupled with high-voltage electron microscopy and methods for labeling these proteins, it should be possible to develop a reasonably complete model for the neuromuscular junction and central synapses in a few more years. This will allow us to understand the synapses as a molecular machine and to understand the mechanisms that are involved in synaptic plasticity (building on a large existing body of research, for which the Nobel Prize in Medicine was given to Arvid Carlsson, Paul Greengard and Eric Kandel in 2000). It is highly likely that advances in modeling and computational theory at this level will have direct impact on the pharmaceutical industry through the design of new drugs and new approaches to mental disorders.

3.2 Neuron Network

Progress in this area has been slower because experimental technique for recording from many neurons simultaneously still lags behind. However, the advent of large electrode arrays and optical recording techniques will make rapid progress possible so before long we should have a reasonable understanding of neural circuits in simple creatures and some parts of the vertebrate brain. Computer models are being used to understand how the intrinsic properties of neurons and the synapses that join them produce complex spatiotemporal patterns of activity and how these networks are used to encode, store and retrieve information in large neural systems such as cortical columns. Advances at this level could lead to a new generation of pattern recognition systems and sensorimotor control systems for robotic devices.

3.3 Map System

The major breakthrough that has occurred recently in human brain imaging, primarily with the development of functional magnetic resonance imaging, is allowing rapid progress to be made in localizing cognitive functions within the human brain. Magnetic resonance technology is still at an early stage of development and new approaches, such as magnetic resonance spectroscopy, should make it possible to observe biochemical reactions occurring within the brain. However, until such time as resolution of these new techniques is improved, or they can be integrated with older techniques such as electroencephalography (EEG) and magnetoencephalography (MEG), it will not be possible to uncover the mechanisms that underlie the activity that is observed with functional imaging. It may take decades before techniques mature to the point where we can begin to understand the large-scale organization of primate brains. However, even before this, significant progress will be made using invasive techniques in nonhuman primates and other mammals. Understanding of brains at this level will provide the ultimate insights into major philosophical questions about consciousness and autonomy (Crick 1994).

3.4 Technology For Brain Modeling

Do new properties emerge as the number of neurons in a neural system becomes large? This question can be explored with large simulations of millions of neurons. Parallel computers have become available that permit massively parallel simulations, but the difficulty of programming these computers has limited their usefulness. An approach to massively parallel models introduced by Carver Mead is based on subthreshold cMOS VLSI (Very Large Scale Integrated) circuits with components that directly mimic the analog computational operations in neurons. Several large silicon chips have been built that mimic the visual processing found in retinas. Analog VLSI cochleas have also been built that can analyze sound in real time. Analog VLSI chips have been built that mimic the detailed biophysical properties of neurons, including dendritic processing and synaptic conductances (Douglas et al. 1995). These chips use analog voltages and currents to represent the signals, and are extremely efficient in their use of power compared to digital VLSI chips. A new branch of engineering called neuromorphic engineering has arisen to exploit this technology.

4. Conclusions

Although brain models are now routinely used as tools for interpreting data and generating hypotheses, we are still a long way from coming up with explanatory theories of brain function. For example, despite the relatively stereotyped anatomical structure of the cerebellum, we still do not understand its computational functions. Recent evidence from functional imaging of the cerebellum suggests that the cerebellum is involved in higher cognitive functions and is not just a motor controller. Modeling studies may help in exploring these competing hypotheses. This has already occurred in the oculomotor system, which has a long tradition of using control theory models to guide experimental studies.

Digital computers have been increasing in power exponentially from around 1950 when the first machines, built from vacuum tubes and mercury delay lines, were introduced. The number of operations per second roughly doubles every three years. This vast increase in computer power, or, conversely, decrease in the cost of computing and data storage, has had a major impact on the study of the brain and on the development of brain models and theories. For ex-ample, functional magnetic resonance imaging, which has made it possible to study human brain activity non-invasively, would not have been feasible without fast digital computers that direct the pulse sequences, data acquisition and data analysis. The increasing power of computers is transforming our ability to analyze complex neural systems at many levels of investigation and will have far-reaching consequences on society.

It is very important to note that, at this stage in our understanding of the brain, a model should only be considered a provisional framework for organizing thinking. Many partial models need to be explored at many different levels of investigation, each model focusing on a different scientific question. As computers become faster, and as software tools become more flexible, computational models should proliferate. Close collaborations between modelers and experimentalists, facilitated by the internet, should lead to an increasingly better understanding of the brain as a computational system.

Bibliography:

- Anderson J A, Hinton G E (eds.) 1981 Parallel Models of Associative Memory. Lawrence Erlbaum, Hillsdale, NJ

- Arbib A M 1995 The Handbook of Brain Theory and Neural Networks. MIT Press, Cambridge, MA

- Berns G S, Sejnowski T S 1998 A computational model of how the basal ganglia produce sequences. Journal of Cognitive Neuroscience 10(1): 108–21

- Bi G-Q, Poo M-m 1998 Activity-induced synaptic modifications in hippocampal culture: dependence on spike timing, synaptic strength and cell type. Journal of Neuroscience 18: 10464–72

- Bliss T V P, Lomo T 1973 Long-lasting potentiation of synaptic transmission in the dentate area of the anaesthetized rabbit following stimulation of the perforant path. Journal of Physiology 232: 331–56

- Blum K I, Abbott L F 1996 A model of spatial map formation in the hippocampus of the rat. Neural Computation 8: 85–93

- Bower J M, Beeman D 1998 The Book of GENESIS: Exploring Realistic Neural Models with the GEneral NEural SImulation System, 2nd edn. TELOS, Santa Clara, CA

- Churchland P S, Sejnowski T J 1992 The Computational Brain. MIT Press, Cambridge, MA

- Crick F H C 1994 The Astonishing Hypothesis: The Scientific Search for the Soul. Charles Scribner’s Sons, New York

- Destexhe A, Sejnowski T J 2001 Thalamocortical Assemblies: How Ion Channels, Single Neurons and Large-Scale Networks Organize Sleep Oscillations. Oxford University Press, Oxford, UK

- Douglas R, Mahowald M, Mead C 1995 Neuromorphic analogue VLSI. Annual Review of Neuroscience 18: 255–81

- Georgopoulos A P, Schwartz A B, Kettner R E 1986 Neuronal population coding of movement direction. Science 243: 1416–19

- Hammer M, Menzel R 1995 Learning and memory in the honeybee. Journal of Neuroscience 15: 1617–19

- Harris-Warrick R M, Coniglio L M, Barazangi N, Gucken-heimer J, Gueron S 1995 Dopamine modulation of transient potassium current evokes phase shifts in a central pattern generator network. Journal of Neuroscience 15(1): 342–58

- Hebb D O 1949 Organization of behavior: A neuropsychological theory. John Wiley and Sons, New York

- Hopfield J J 1982 Neural networks and physical systems with emergent collective computational abilities. Proceedings of the National Academy of the Sciences USA 79: 2554–8

- Kelso S R, Ganong A H, Brown T H 1986 Hebbian synapses in hippocampus. Proceedings of the National Academy of the Sciences USA 83: 5326–30

- Koch C, Segev I 1998 Methods in Neuronal Modeling: From Synapses to Networks, 2nd edn. MIT Press, Cambridge, MA

- Markram H, Lubke J, Frotscher M, Sakmann B 1997 Regulation of synaptic efficacy by coincidence of postsynaptic APs and EPSPs. Science 275: 213–15

- Marr D 1982 Vision. Freeman, New York

- Miller K D, Keller J B, Stryker M P 1989 Ocular dominance column development: analysis and simulation. Science 245: 605–15

- Montague P R, Sejnowski T J 1994 The predictive brain: temporal coincidence and temporal order in synaptic learning mechanisms. Learning and Memory 1: 1–33

- Movshon J A, Newsome W T 1996 Visual response properties of striate cortical neurons projecting to area MT in macaque monkeys. Journal of Neuroscience 16: 7733–41

- Pouget A, Sejnowski T J 2001 Lesioning a basis function model of spatial representations in the parietal cortex: Comparison with hemineglect. Psychological Review (in press)

- Rao R P N, Sejnowski T J 2000 Predictive sequence learning in recurrent neocortical circuits. In: Solla S A, Lee T K, Muller K R (eds.) Advances in Neural Information Processing Systems 12. MIT Press, Cambridge, MA, pp. 164–70

- Reynolds J H, Chelazzi L, Desimone R 1999 Competitive mechanisms subserve attention in macaque areas V2 and V4. Journal of Neuroscience 19: 1736–53

- Rieke F, Warland D, de Ruyter van Steveninck R, Bialek W 1996 Spikes: Exploring the Neural Code. MIT Press, Cam-bridge, MA

- Schultz W, Dayan P, Montague P R 1997 A neural substrate of prediction and reward. Science 275: 1593–9

- Stuart G, Spruston N, Hausser M (eds.) 1999 Dendrites. Oxford University Press, Oxford, UK

- Sutton R S, Barto A G 1998 Reinforcement Learning: An Introduction. MIT Press, Cambridge, MA

- Van Vreeswijk C, Sompolinsky H 1998 Chaotic balanced state in a model of cortical circuits. Neural Computation 10(6): 1321–71

- Zhang K, Ginzburg I, McNaughton B L, Sejnowski T J 1998 Interpreting neuronal population activity by reconstruction: unified framework with application to hippocampal place cells. Journal of Neurophysiology 79: 1017–44