Sample Computerized Test Construction Research Paper. Browse other research paper examples and check the list of research paper topics for more inspiration. iResearchNet offers academic assignment help for students all over the world: writing from scratch, editing, proofreading, problem solving, from essays to dissertations, from humanities to STEM. We offer full confidentiality, safe payment, originality, and money-back guarantee. Secure your academic success with our risk-free services.

Like many other areas of psychology, the availability of cheap, plentiful computational power has revolutionized the technology of educational and psychological testing. It is no longer necessary to restrict testing to the use of items with a paper-and-pencil format in a group-based session. We now have the possibility to build multimedia testing environments to which test takers respond by manipulating objects on a screen, working with application programs, or manipulating devices with built-in sensors. Moreover, such tests can be assembled from banks with items stored in computer memory and immediately delivered to examinees who walk in when they are ready to take the test.

Academic Writing, Editing, Proofreading, And Problem Solving Services

Get 10% OFF with 24START discount code

Computerized assembly of tests from an item bank is treated as an optimization problem with a solution that has to satisfy a potentially long list of statistical and non-statistical specifications for the test. The general nature of this optimization problem is out-lined, and applications to the problems of assembling tests with a linear, sequential, and adaptive format are reviewed.

1. Test Assembly As An Optimization Problem

The formal structure of a test assembly problem is known as a constrained combinatorial optimization problem. It is an optimization problem because the test should be assembled to be best in some sense. The problem is combinatorial because the test is a combination of items from the bank and optimization is over the space of admissible combinations. Finally, the problem is constrained because only those combinations of items that satisfy the list of test specifications are admissible.

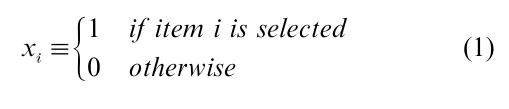

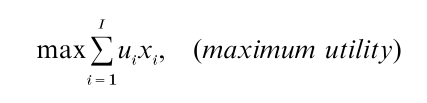

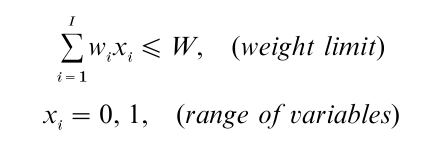

The quintessential combinatorial optimization problem is the knapsack problem (Nemhauser and Wolsey 1988). Suppose a knapsack has to be filled from a set of items indexed by i=1,…, I. Each item has utility ui and weight wi. The optimal combination of items is required to have maximum utility but should not exceed weight limit W. The combination is found defining decision variables

and solving the problem

subject to

for an optimal set of values for variables xi.

Problems with this structure are known as 0–1 linear programming (LP) problems. Several test assembly problems can be formulated as a 0–1 LP problem; others need integer variables or a combination of integer and real variables. In a typical test assembly model, the objective function is used to maximize a statistical attribute of the test whereas the constraints serve to guarantee its content validity.

1.1 Objective Functions In Test Assembly Problems

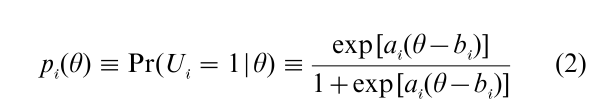

Suppose the items in the bank are calibrated using an item response theory (IRT) model, for example, the two-parameter logistic (2PL) model:

where θ ϵ(-∞, ∞) is the ability of the examinee, and bi ϵ (-∞, ∞) and ai ϵ [0,∞] are parameters for the difficulty and discriminating power of item i, respectively.

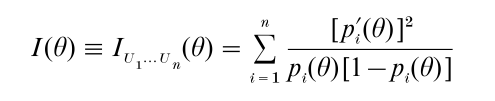

A common objective function in IRT-based test assembly is based on the test information function, which is Fisher’s measure of information on the unknown ability θ in the response vector U1,…, Un, where n is the number of items in the test. For the 2-PL model the test information function is given by

with pi (θ) = (δ/δθ)pi (θ). Test information functions are additive in the contributions by the individual items, which are denoted by Ii(θ).

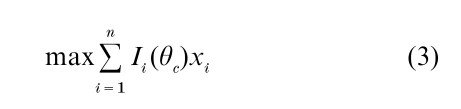

The first step in IRT-based test assembly is to formulate a target for the test information function. The next step is to assemble the test to have its actual information function as closely as possible to the target. Examples of popular targets are a uniform function over an ability interval in diagnostic testing and a peaked function at a cutoff score θc in admission testing. An objective function to realize the former is presented in Eqns. (4) and (5) (Sect. 2). The latter can be realized using objective function

under the condition of an appropriate set of constraints on the test. Other possible objective functions are maximization of classical test reliability and minimization of the length of the test. For a review of these and other examples, see van der Linden (1998).

1.2 Constraints In Test Assembly Problems

Formally, test specifications can be viewed as a series of upper and/or lower bounds on numbers of item attributes in the tests or on functions thereof. An important distinction is between constraints on (a) categorical item attributes, (b) quantitative item attributes, and (c) logical relations between the items in the test. Categorical attributes are attributes such as item content, cognitive level, format, and use of graphics. Examples of quantitative attributes are statistical item parameters, expected response times, word counts, and readability indices. Logical (or Boolean) constraints deal with such issues in test assembly as items that cannot figure in the same test because they have clues to each other’s solution or items that are organized as sets around common stimuli.

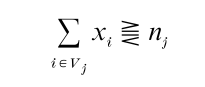

Let Vj be a set of items in the bank with a common value for an attribute. The general shape of a constraint on a categorical item attribute in a test assembly model with 0–1 decision variables is

where nj is a bound on the number of items from Vj. This type of constraint can also be formulated on intersections or unions of sets of items.

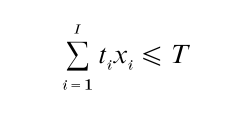

Constraints on quantitative attributes are typically on a function of their values for a set of items. For example, if a typical test taker has response time ti on item i and the total testing time available is T (both in seconds), a useful constraint on the test is:

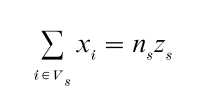

As an example of a logical constraint, suppose that Ws represents a set of items in the bank with a common stimulus s, and that ns items have to be selected if and only if stimulus s has. This requirement leads to the following constraint

with zs being an auxiliary 0–1 decision variable for the selection of stimulus s.

In a full-fledged test assembly problem, constraints may also be needed to deal, for instance, with stimulus attributes or with relations between different test forms if a set of forms is to be assembled simultaneously. For a review of these and other types of constraints, see van der Linden (1998, 2000a).

2. Linear-Test Assembly

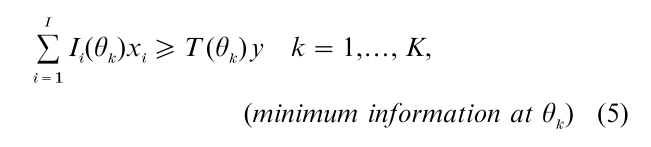

Linear tests have a fixed number of items presented in a fixed order. For measurement over a larger ability interval, it is customary to choose a discrete set of target values for the information function, T(θk), k=1,…, K. In practice, because information functions are well-behaved continuous functions, target values at three to five equally-spaced θ values suffice. The need to match more than one target value simultaneously creates a multiobjective decision problem.

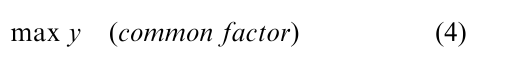

An effective way to deal with multiple target values is to apply a maximin criterion. This criterion leads to the following core of a test assembly model

subject to

where y is a common factor in the right-hand side bounds in (5) that is maximized and coefficients T (θk) control the shape of the information function (van der Linden and Boekkooi-Timminga 1989). Constraints to deal with the remaining content specifications should be added to this model. For a large-scale testing program in education, it is not unusual to have hundreds of those constraints.

Methods to solve test assembly models can be distinguished into algorithms that have been proven to lead to optimality and intuitively plausible heuristics which typically select one item at a time. Well-known heuristics are those that pick the items with the largest impact on the test information function (Luecht 1998) or with the smallest weighted average deviation from all bounds in the model (Swanson and Stocking 1993). Optimal solutions can be found using a branch-and-bound algorithm (Nemhauser and Wolsey 1988), or, if the structure of the models boils down to a network-flow problem, a simplified version of the simplex algorithm (Armstrong et al. 1995). Several algorithms and heuristics are implemented in the test assembly package ConTest (Timminga et al. 1996).

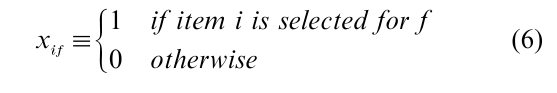

Sometimes it is necessary to build a set of linear test forms, for instance, parallel forms to support different testing sessions or forms of different difficulties for use in an evaluation study with a pretest-posttest design. Sequential application of a model of linear-test assembly is bound to show a decreasing quality of the solutions. A simultaneous approach balancing the quality of the individual test forms can be obtained by replacing the decision variables in Eqn. (1) by

using these variables to model the test specifications for all forms f =1,…, F, and solving the model for all variables simultaneously. If no overlap between forms is allowed, logical constraints must be added to the model to prevent the variables from taking the value of one more than once. Efficient combinations of sequential and simultaneous approaches are presented in van der Linden and Adema (1998).

3. Sequential Test Assembly

Sequential test assembly is used in testing for selection or mastery with a cutoff score on the test that represents the level beyond which the test taker is accepted or considered to master the domain of knowledge tested, respectively. An obvious linear approach is to assemble a test using the objective function in Eqn. (3), but a more efficient procedure is to assemble the test sequentially, sampling one item from the bank at a time and stopping when the test taker is classified with enough precision.

If the items are dichotomous, the number-correct score of a test taker follows a binomial distribution

![]()

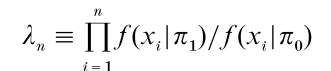

with π being the success parameter and n the (random) number of items sampled. In a sequential probability ratio test (SPRT) for the decision to reject test takers with π<π0 and accept those with π ≥ π1, with (π0, π1) being a small interval around cutoff score πc, the decision rule is based on the likelihood ratio

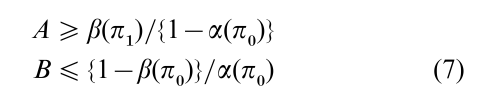

If λn <A or λn ≥ B the decision is to reject or accept, respectively, whereas sampling of items is continued otherwise. The constants A and B are known to satisfy

with α(π0) and β(π1) being the probabilities of a false positive and false negative decision for test takers with π=π0 and π=π1, respectively.

Alternative sequential approaches to test assembly follow an IRT-based SPRT (Reckase 1983) or a Bayesian framework (Kingsbury and Weiss 1983).

4. Adaptive Test Assembly

If the items in the pool are calibrated using a model as in (2), adaptive test assembly becomes possible. In adaptive test assembly, the items are selected to be optimal at the ability estimate of the test taker, updated by the computer after each new response. Adaptive testing leads to much shorter tests; savings are typically over 50 percent relative to a linear test with the same precision.

To show the principle of adaptive testing, let i=1,…, I denote the items in the pool and k=1,…, K the items the test. It follows that ik is the index of the item in the pool administered as the kth item in the test. The set Sk={i ,…, ik−1} contains the first k-1 items in the test. These items involve responses variables Uk−1 = (Ui ,…, Uik−1). The update of the ability estimate after k-1 responses is denoted as θuk−1. Item k in the test is selected to be optimal at θuk−1 among the items in the set Rk ={1,…, I}/Sk−1.

A popular criterion of optimality in adaptive testing is maximization of information at the current ability estimate, that is, selection of item ik according to objective function

Alternative objective functions are based on Kullback-Leibler information or on Bayesian criteria that use the posterior distribution of θ after k-1 items. These functions are reviewed in van der Linden and Pashley (2000).

Several procedures have been suggested to realize content constraints on adaptive tests. The four major approaches are: (a) partitioning the bank according to the main item attributes and spiraling item selection among the classes in the partition to realize a desired content distribution; (b) building deviations from content constraints into the objective function (Swanson and Stocking 1993); (c) testing from a pool with small sets of items build according to content specifications; and (d) using a shadow test approach in which prior to each item a full linear tests is assembled that contains all previous items, meets all content constrains, and is optimal at the ability estimate, and from which the most informative item is selected for administration (van der Linden 2000b.)

Adaptive testing is currently one of the dominant modes of computerized testing. Several aspects of computerized adaptive testing not addressed in this research paper are reviewed in Wainer (1990).

Bibliography:

- Armstrong R D, Jones D H, Wang Z 1995 Network optimization in constrained standardized test construction. In: Lawrence K D (ed.) Applications of Management Science: Network Optimization Applications. JAI Press, Greenwich, CT, Vol. 8, pp. 189–212

- Kingsbury G G, Weiss D J 1983 A comparison of IRT-based adaptive mastery testing and a sequential mastery testing procedure. In: Weiss D J (ed.) New Horizons in Testing: Latent Trait Theory and Computerized Adaptive Testing. Academic Press, New York, pp. 257–83

- Luecht R M 1998 Computer-assisted test assembly using optimization heuristics. Applied Psychological Measurement 22: 224–36

- Nemhauser G, Wolsey L 1988 Integer and Combinatorial Optimization. Wiley, New York

- Reckase M D 1993 A procedure for decision making using tailored testing. In: Weiss D J (ed.) New Horizons in Testing: Latent Trait Theory and Computerized Adaptive Testing. Academic Press, New York, pp. 237–55

- Swanson L, Stocking M L 1993 A model and heuristic for solving very large item selection problems. Applied Psycho-logical Measurement 17: 151–66

- Timminga E, van der Linden W J, Schweizer D A 1996 Con-TEST 2.0: A Decision Support System for Item Banking and Optimal Test Assembly [Computer Program and Manual]. viec ProGAMMA, Groningen, The Netherlands

- van der Linden W J 1998 Optimal assembly of educational and psychological tests, with a Bibliography:. Applied Psychological Measurement 22: 195–211

- van der Linden W J 2000a Optimal assembling of tests with item sets. Applied Psychological Measurement 24: 225–40

- van der Linden W J 2000b Constrained adaptive testing with shadow tests. In: van der Linden W J, Glas C A W (eds.) Computerized Adaptive Testing: Theory and Practice. Kluwer Academic Publishers, Norwell, MA, pp. 27–52

- van der Linden W J, Adema J J 1998 Simultaneous assembly of multiple test forms. Journal of Educational Measurement 35: 185–98

- van der Linden W J, Boekkooi-Timminga E 1989 A maximin model for test design with practical constraints. Psychometrika 54: 237–47

- van der Linden W J, Pashley P J 2000 Item selection and ability estimation in adaptive testing. In: van der Linden W J, Glas C A W (eds.) Computerized Adaptive Testing: Theory and Practice. Kluwer Academic Publishers, Norwell, MA, pp. 1–25

- Wainer H (ed.) 1990 Computerized Adaptive Testing: A Primer. Erlbaum, Hillsdale, NJ