Sample Psychology Of Conditioning Research Paper. Browse other research paper examples and check the list of research paper topics for more inspiration. iResearchNet offers academic assignment help for students all over the world: writing from scratch, editing, proofreading, problem solving, from essays to dissertations, from humanities to STEM. We offer full confidentiality, safe payment, originality, and money-back guarantee. Secure your academic success with our risk-free services.

Research on conditioning and habit broadly is concerned with how organisms learn the what, where, and when of their niche: what prey and threats they have to deal with, where they may be found, and when they are most likely to be encountered. These are complex problems but their solutions can be surprisingly simple, so long as they are clever.

Academic Writing, Editing, Proofreading, And Problem Solving Services

Get 10% OFF with 24START discount code

Conditioning and habit involve learning about contingencies—if-then relationships—between biologically important stimuli and behavior. Conditioning involves learning signals for stimuli, stimulus–stimulus contingencies, such as between the odor of a lemon and acidity; habits involve learning about the consequences of behavior, response–stimulus contingencies, such as learning how to crack open an oyster to extract its flesh. The mechanisms of learning serve the organism by selecting the best signals for stimuli and the effective actions leading to reward. In terms of adaptation, conditioning helps the organism to pre-pare for forthcoming stimuli and habit learning allows the organism to learn from experience. Despite its apparent plasticity, learning is highly biologically constrained, every species is differently affected by particular stimuli and consequences as defined by its ecological niche, while the processes of learning themselves remain identical across species.

Learning is studied by arranging environmental operations, such as response–stimulus contingencies, and analyzing the behavioral process they generate. Behavior is sensitive to many interacting variables. For this reason, much research in learning has been carried out with animal subjects for whom stricter control of operations is possible, but most findings have been replicated with human subjects. Meanwhile, however, people with pragmatic interests in managing human behavior in educational, clinical, industrial, and correctional facilities, have benefited greatly from our understanding of learning.

1. History

Psychological investigation has been responsible for unraveling the intricacies of learning, but the central questions were first recognized by philosophers and biologists. The philosopher Descartes proposed two sources of behavior, a reflexive–mechanical body that connected the body to the physical world and a rational–spiritual mind that explained language, memory, and intellect. Intuitively, the Cartesian dualism captures the striking contrast between reflexes we all share, and the many idiosyncratic differences that make each unique. The first half of the Cartesian dichotomy was supported in the eighteenth century when Whytt and others isolated the spinal reflexes, but the assumption of native mental knowledge was harder to endorse. Empiricist philosophers such as Locke supplied a viable alternative position, that complex knowledge could be explained by the accumulated experience of associated ideas. By the mid nineteenth century Alexander Bain offered an associationist theory of action. Prompted by speculation of the physiologist, Johannes Muller, Bain proposed that in addition to reflexes, organisms randomly emitted spontaneous nonpurposive movements. When one of these movements produced a pleasurable outcome, it would be repeated because the ‘muscle sense’ produced by the action would be associated with pleasure. This early conception of habit acquisition gained acceptance amidst the rising tide of Darwinism by providing a mechanistic alternative to the creationist spirit implicit in Descarte’s mentalism.

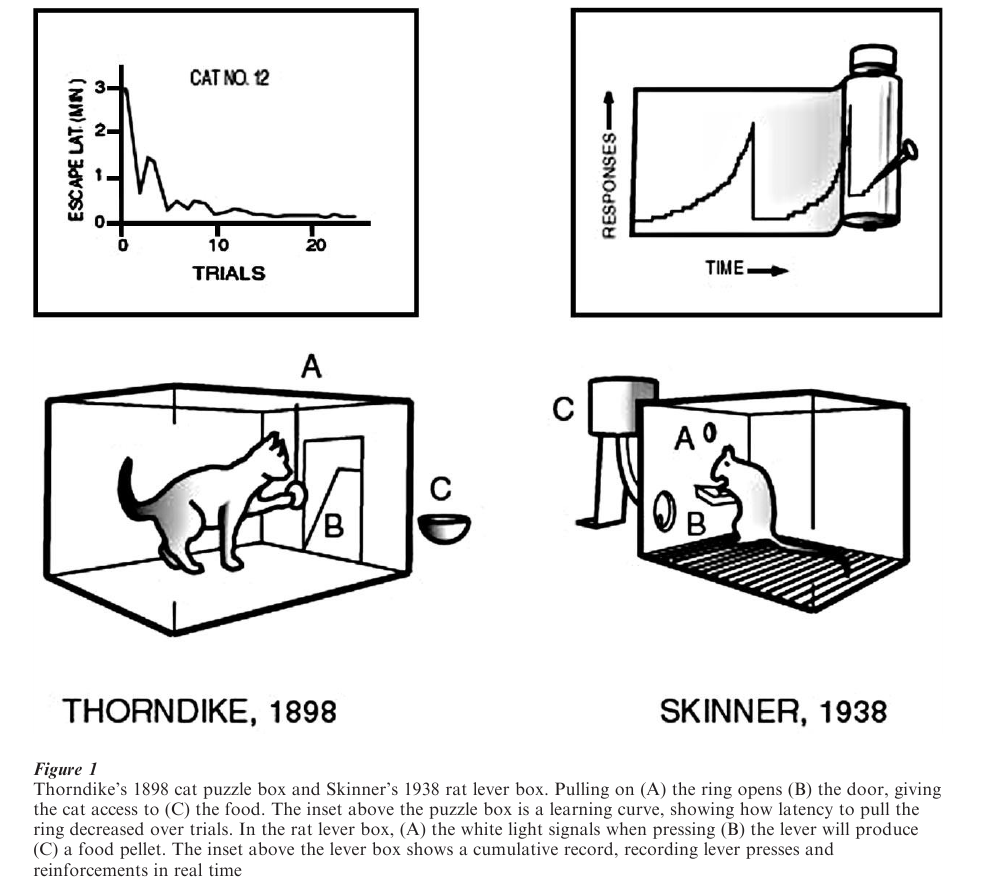

Approximately 40 years later, Edward L. Thorndike published the first experimental demonstrations showing how behavior could be affected by its consequences. One of his cat ‘puzzle boxes’ is shown in Fig. 1. Latency to escape from the box was long initially but gradually shortened. Thorndike called the behavior he observed in the puzzle-box, ‘trial-and-error learning’ and formulated the Law of Effect, stating that pleasurable consequences ‘stamped in’ responses and annoying consequences ‘stamped out’ responses.

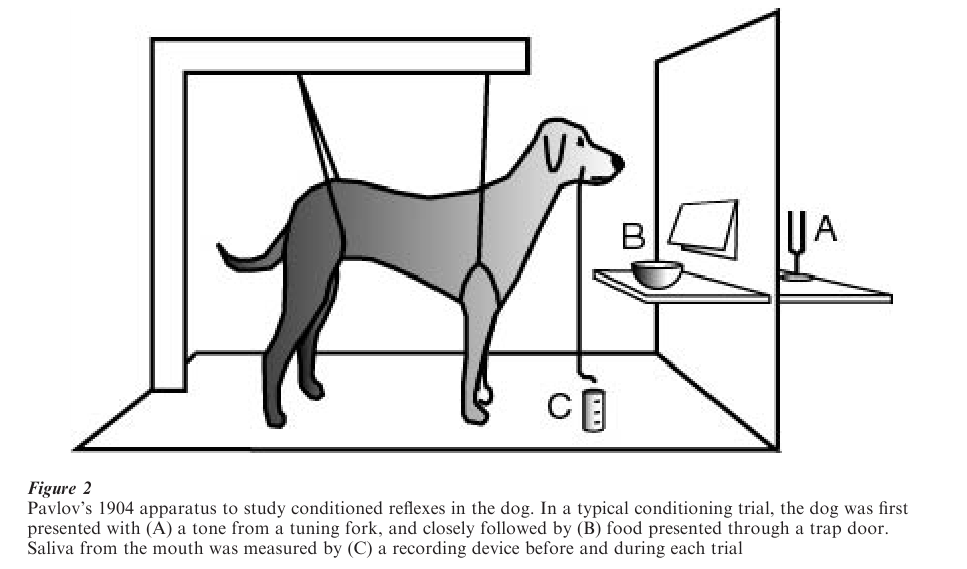

Coincident with Thorndike’s research, the Russian physiologist Ivan P. Pavlov accidentally discovered the conditioned salivation reflex in the dog. In the course of studying digestive reflexes, Pavlov noted that his dogs began to salivate while their food was being prepared, before the food made contact with the dog’s mouth. He realized that more than a simple reflex was involved—the dog had learned a signal for food— initiating an extensive research program to study conditioned reflexes. Fig. 2 shows the setup he used to study stimulus–stimulus contingencies. Pavlov demonstrated conditioning with many other reflexes, leading him to claim that conditioned reflexes could explain all complex behavior including language.

Initially Pavlov’s work eclipsed Thorndike’s be-cause of arguments that the Law of Effect might be explained by conditioned reflexes. But in the 1930s, B. F. Skinner endorsed the present view that Thorndike and Pavlov had discovered different processes. In time, conditioned reflexes became identified with Pavlovian conditioning and habit with operant conditioning (emphasizing that responses operate on the environment). Skinner made a number of important contributions to the study of operant behavior, including an automated experimental chamber to study reinforcement with rats and a cumulative recorder to record behavior in time (Fig. 1). His simplest box contained a lever, a food dispenser, and a light to signal the availability of food. Unlike puzzle boxes and mazes that studied learning in a trial-by-trial format, Skinner’s automated apparatus permitted analysis of ‘free-operant behavior,’ the real-time interaction between behavior and the environment. He used his apparatus to conduct numerous critical experiments on reinforcement, including studies of discrimination learning and reinforcement schedules. The genesis of experimental psychology in the early twentieth century was accompanied by disputes over the proper methods of investigation and the role of inference from behavior to mechanism. John B. Watson in 1913 proposed a ‘Behaviorist’ program, arguing that psychology should reject the introspective methods popular in his day and instead be founded only on publicly observable behavior. Watson’s program is now called methodological behaviorism, and to the extent that psychological concepts are founded on observable behavior, his ideas have been widely accepted. Thorndike, Pavlov, and Watson, among many others, were committed to physiological reductionism, explaining behavior by inferring its underlying physiology. Skinner pushed for a truly independent science of behavior based entirely on the study of behavior–environment interactions, a program he called radical behaviorism. Behaviorism in turn was countered by cognitivism and neo-behaviorism. Both argue that behavior can be used to make reductionist inferences about nonphysiological mechanisms that mediate between the environment and observable behavior, such as memory and associative processes. The later half of the twentieth century has witnessed the rise of mathematical, dynamic models of behavior, called quantitative behaviorism, that describe mediators of behavior in terms of mathematical functions. This alternative to cognitivism seeks to model behavior–environment interactions mathematically, but the models are seen only as analytical tools to determine the minimum set of mechanisms required to explain behavior, and not to make mediational inferences. Despite philosophical differences, all of these views continue to have proponents and make contributions to the understanding of behavior.

Researchers and theorists have since broadened the relevance of Pavlovian and operant conditioning to economics, language, problem solving, and other varieties of complex behavior. At the same time, the science of learning has come increasingly to recognize that behavior is constrained by biological endowment. Despite repeated attempts to reduce Pavlovian and operant conditioning to a single process, they differ in essential ways. Although strong parallels occur in the effects of contingencies and contiguity, and in stimulus functions, response functions differ markedly.

2. Contingency And Contiguity

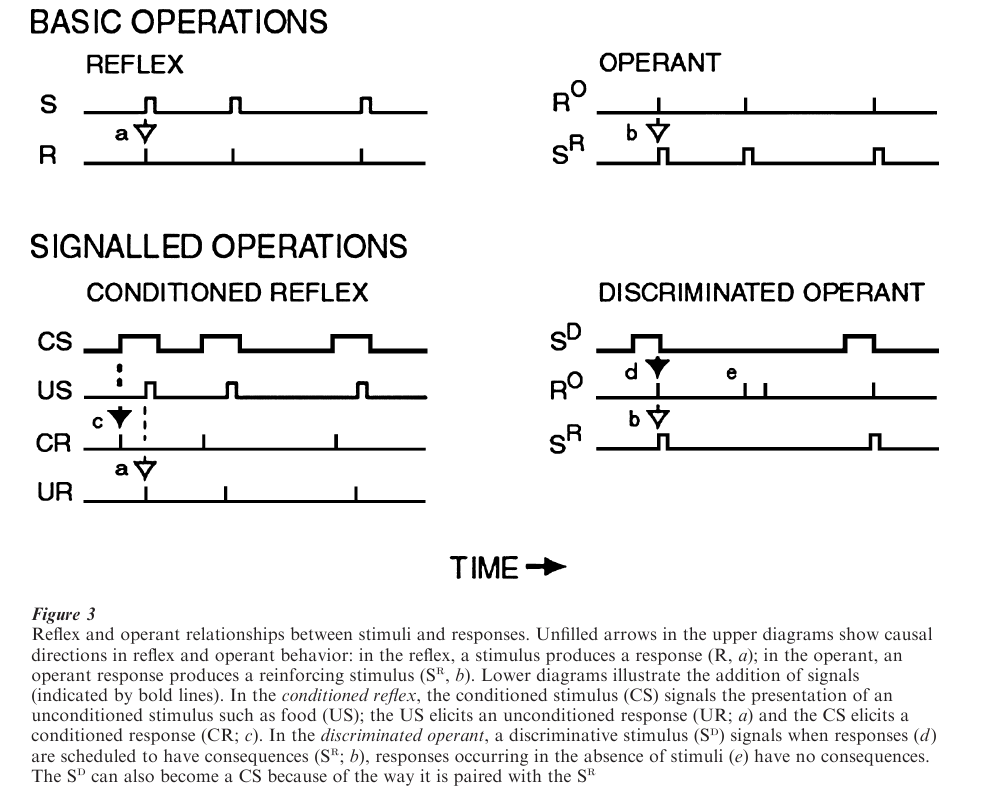

In a reflex, a stimulus elicits a response; in an operant, an emitted behavioral response produces a stimulus and the behavior becomes more or less likely to occur in the future, depending on whether the consequence is a reinforcer or a punisher. Fig. 3 illustrates these basic relationships.

Organisms are very sensitive to stimulus situations, or signals, present when stimuli occur. Signals impart enormous complexity in behavior by giving rise to the conditioned reflex and discriminated operant (Fig. 3). In Pavlov’s conditioned reflex experiments with dogs, food was paired with a tone, and the dog began to salivate upon hearing the tone. In Skinner’s discriminated operant experiments with rats, lever presses could produce food pellets when a stimulus light was on but not when the light was off, and the rats came to press the lever only when the light was on. For a conditioned reflex, the conditioned stimulus (CS) elicits a conditioned response (CR); for a D discriminated operant, the discriminative stimulus (SD) occasions an emitted response.

2.1 Contingency

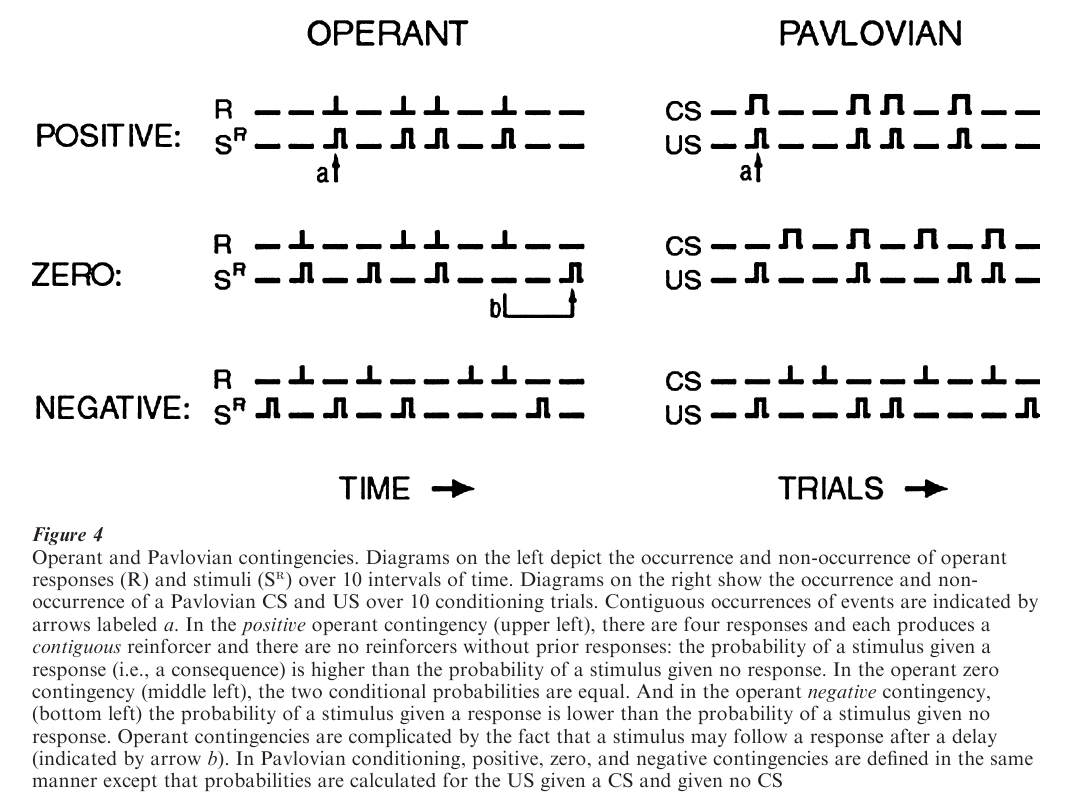

Effects of operant and Pavlovian operations depend on contingencies, the conditional probabilities between stimuli and responses shown in Fig. 4. Operant behavior and Pavlovian conditioning require a positive contingency in which a consequence or a US is most likely after a response or a CS, respectively. A zero contingency, in which the occurrence of a stimulus is independent of other events, will not support operant behavior or Pavlovian conditioning. The inadequacy of a zero contingency shows that contiguity, the concurrence between an operant response and a consequence or between a CS and US, is not sufficient for learning; there must also be a positive contingency. While zero contingencies do not generate reinforcement effects, prior exposure to noncontingent, un-predictable stimuli can significantly retard subsequent learning of reinforcement contingencies.

2.2 Contiguity

Operant consequences are most effective if they are contiguous with responses and less effective if they are delayed—contingency is necessary but not sufficient. In animals, delays of just a few seconds can severely weaken a reinforcer; and reinforcers that are delivered independently of responses, as in a zero contingency, diminish the effect of contiguous reinforcers. Negative contingencies, also called differential reinforcement of other behavior, are an effective way to reduce problem behavior in applied settings.

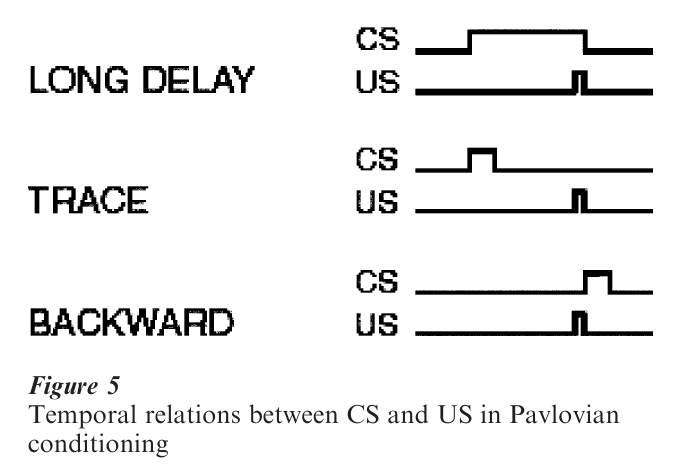

Pavlovian conditioning is also sensitive to the temporal relation between the US and CS—contingency is not sufficient. Of the five possible temporal relations shown in Fig. 5, the most effective is short-delay conditioning in which the onset of the CS precedes the onset of the US by no more than a second. Simultaneous conditioning is least likely to produce conditioning; backward conditioning tends to make the CS an inhibitory stimulus because it is correlated with the absence of the CS; and long-delay and trace produce intermediate levels of conditioning. Taken together, these findings indicate that Pavlovian conditioning is strongest when the CS is a good predictor of the US in time. The one exception is taste a version learning, an example of ecological prepared-ness—illness can produce aversion to a food even if it follows consumption by several hours.

2.3 Extinction

Conditioned reflexes and operant responses can be weakened by extinction. Operant behavior maintained by reinforcement can be reduced in frequency by terminating the reinforcement contingency or by delivering reinforcers independently of responses. Discontinuing a punishment contingency almost al-ways results in the recovery of the punished behavior. A CS can be extinguished by presenting it without the US or by removing the correlation with the US. Extinction procedures do not erase the effects of contingencies but instead produce additional learning. In the case of operant behavior, organisms stop responding because they learn that the operant contingency is broken—extinguished responses reappear without additional training when a reinforcement contingency is reinstated. In the case of Pavlovian extinction, the organism learns that the CS now signals the absence of the US.

2.4 Operant–Pavlovian Interactions

Conditioned reinforcers and conditioned punishers can be generated by Pavlovian procedures. For example, if an odor is paired with food, the odor will elicit salivation as a CS but it can also serve as a conditioned reinforcer for an operant response. Conditioned punishers are created in the same way. Conditioned stimuli greatly enhance delayed con-sequences, for example, a delayed reinforcer will still be very effective as long as a stimulus bridges the gap between the response and the reinforcer (as in long-delay conditioning in Fig. 5). However, the effects are transitory unless the association between the conditioned stimulus and the reinforcer is maintained.

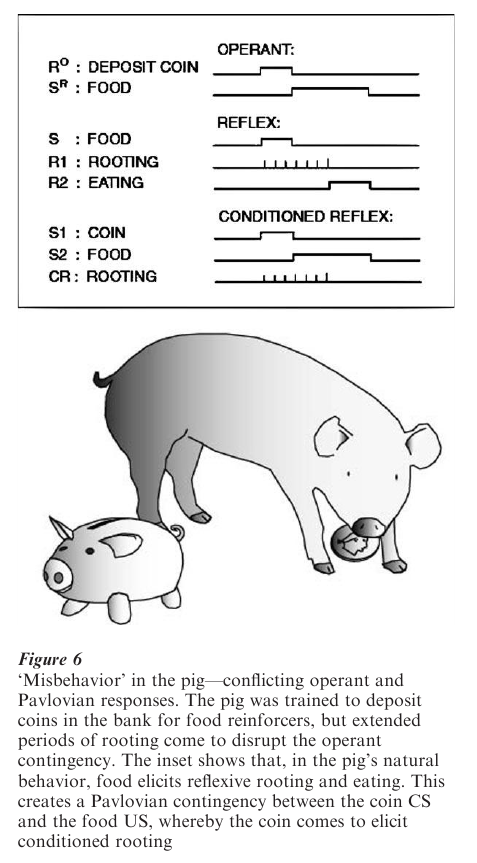

An operant SD can become a Pavlovian CS for operant consequences such as food. The SD then serves two functions that may produce competition between elicited and emitted responses. An example from the animal-training literature is shown in Fig. 6. A pig was reinforced with food for depositing coins in a bank. Shortly after learning the operant, the pig began ‘rooting’ the coins—pushing them along the ground, the pig’s species-typical food handling behavior. As a result of the operant contingency the coin enters into a positive Pavlovian contingency with food and elicits rooting.

Punishment can reduce the frequency of operant behavior for a similar reason—a painful, punishing consequence reduces behavior because it elicits species-typical defensive behavior such as escape and avoidance. The result is that ‘punisher’ reduces the target behavior but not just by being a consequence of that behavior; it would still reduce the operant behavior even if arranged in a zero contingency. A conditioned aversive stimulus, such as a tone paired with shock, will similarly interfere with operant behavior. As a rule, reinforcers are most effective when the responses they elicit are identical to or compatible with a reinforced response and punishers are most effective when the responses they elicit are incompatible with the punished response. For example, it is easy to reinforce pecking in the pigeon with food because food elicits pecking.

3. Response Functions

Consequences in operant behavior and US’s in Pavlovian conditioning can be appetitive, like food, or aversive, like a very loud noise. The same stimulus can generate different kinds of adaptive behavior depending on the contingency, the kind of organism, and the organism’s previous experience.

3.1 Operant Responses

In operant behavior, responses can accrue or remove stimuli, and the stimuli can be appetitive or aversive. In positive reinforcement and negative reinforcement response rate increases by addition of appetitive stimuli or removal of aversive stimuli; positive punishment and negative punishment decreases response rate by addition of aversive stimuli or removal of appetitive stimuli. Negative reinforcement includes escape, removal of an already present aversive stimulus, and avoidance, prevention or postponement of an aversive stimulus.

Operant responses can include everything from a rat’s lever press maintained by food, an infant’s crying maintained by maternal attention, and small talk maintained by social companionship. The form of an operant response depends on (a) environmental constraints, such as the height of a reinforcement lever and force required to operate it, (b) the behavior emitted by the organism, and (c) behavior elicited by the consequence (illustrated by Fig. 6). Once a response is reinforced, its form tends to be repeated in a stereotyped manner. Reinforcement will ‘capture’ whatever behavior the organism emits that is contiguous with the reinforcer; even if some features are unessential to the reinforcement contingency.

Reinforcement narrows the range of variability in emitted behavior by selecting its successful forms (not necessarily the most efficient behavior), making them more frequent and thereby displacing less effective forms. Predatory species, for example, show adjustments in hunting behavior as a result of their effective-ness. The differential reinforcement of some responses to the exclusion of others is called shaping. Shaping is analogous to natural selection in that different responses function like the phenotypic variations required for evolutionary change. Without variation, changes in reinforcement contingencies could not select new forms of behavior. Despite the tendency for reinforcement to produce stereotyped behavior, it never reduces variations in behavior entirely. Variability returns to emitted behavior in extinction, a potentially adaptive reaction to dwindling resources. Creativity can also serve as the basis for reinforcement (e.g., if reinforcers are contingent on the emission of novel behavior.)

Restriction operations, such as food deprivation, are necessary for reinforcement—the response elicited by the reinforcer, such as feeding, must have some probability of occurring for the reinforcer to be effective. In rats, restricting an activity such as running in a wheel will make the activity a reinforcer for drinking, even thought the rat is not water restricted. Findings like this suggest that reinforcers may best be understood in terms of the activities they produce and that the reinforcement process involves the organism’s behaving in order to maintain set levels of activities.

Once an organism learns a response-reinforcer contingency, the likelihood of the response will reflect the value of the reinforcer. For example, if a rat drinks sugar water and becomes ill, it will no longer emit behavior previously reinforced with sugar water.

A great deal of behavior is maintained by negative reinforcement, including avoidance in which responses prevent the occurrence of aversive stimuli, and escape in which responses terminate an existing aversive stimulus. Much research has been directed to avoidance because it is maintained in the absence of contiguity—responses prevent reinforcement. In some cases, avoidance is maintained by deletion of an immediately present conditioned aversive (warning) stimulus, an avoidance condition that preserves contiguous reinforcement. But organisms will work to prevent or delay aversive events without the benefit of warning stimuli, showing that avoidance does not require a Pavlovian aversive contingency. Avoidance is closely linked to punishment because organisms frequently learn to avoid places associated with punishment, a pragmatic concern for advocates of punishment.

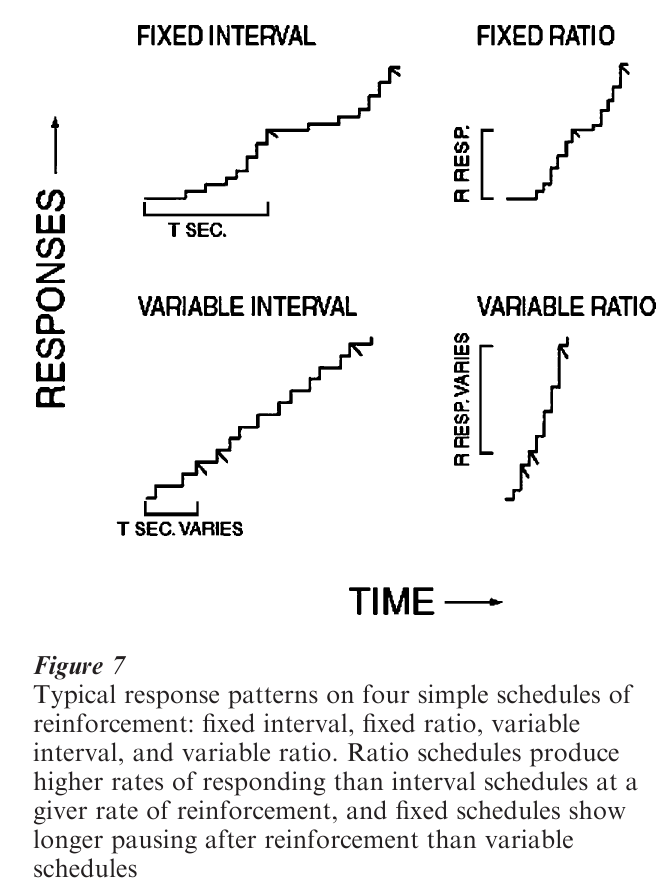

The timing, rate, and persistence of an operant response depends on its reinforcement schedule, a constraint on earning reinforcers that requires the organism to wait for the next available reinforcer, emit a number of responses, or some combination of both. Fig. 7 shows typical patterns of behavior on four simple schedules. In an inter al schedule, a reinforcer is produced by the first response after the passage of a specified interval of time. In a ratio schedule, a reinforcer is produced after the emission of a specified number of responses. Time or response requirements can either be a fixed or variable. Response rate on both ratio and interval schedules increases with reinforcement rate up to a point, and then decreases again at high rates of reinforcement. At low rates of reinforcement or during extinction, behavior on ratio schedules alternates between bouts of rapid responding and pauses, while responses on interval schedules simply occur with lower frequency. Extinction is prolonged after exposure to reinforcement schedules—especially after exposure to long interval- or large ratio-schedules.

When rate of reinforcement in interval and ratio schedules is equated, organisms respond at higher rates on ratio schedules (although the difference is small at very high rates of reinforcement). Organisms are sensitive to the fact that the rate of reinforcement in ratio schedules increases directly as a function of response rate, but not in interval schedules.

Characteristic pausing or waiting occurs after earning a reinforcer. Waiting is determined by the currently expected schedule and not because of the reinforcer. Fixed-interval and fixed-ratio schedules produce long wait times, and variable-interval and variable-ratio schedules produce short wait times. Waiting on fixed-interval schedules is reliably a fixed proportion of the interval value. In VI and VR schedules, wait times are heavily influenced by the shortest inter-reinforcement intervals or ratios in a schedule. Waiting is obligatory—organisms will wait even when doing so reduces the immediacy and overall rate of reinforcement. In schedules that require a single response, with the reinforcer occurring a fixed time after the response, the optimal strategy is to respond immediately in order to minimize delay, but organisms still wait a time proportional to the fixed time before making the response that leads to reinforcement.

Schedules can be combined in almost limitless ways to study questions dealing with choice, conditioned reinforcement, and other complex behavioral processes. In experiments on choice between two concurrent variable-interval schedules, the relative preference of many organisms, including humans, will closely match the percentage of reinforcement pro-vided by each schedule. With choices between different fixed-interval schedules, the preference for the shorter interval is far greater than predicted by the relative intervals, indicating that the value of a delayed reinforcer decreases according to a decelerating function over time. Conditioned reinforcers have been studied with behavior chains in which one signaled schedule is made a consequence of another, for example, a one-minute fixed interval schedule signaled by a red stimulus leads to another one-minute fixed interval signaled by a green stimulus, until a terminal reinforcer is obtained. Behavior extinguishes in the early schedules of chains with three or more schedules, to the extent that reinforcement is severely reduced. Possibly conditioned reinforcers must be contiguous with primary reinforcers to effectively maintain behavior; alternatively obligatory waiting in the early schedules extends the time to the terminal reinforcer, lengthening the time to reinforcement, and generating even longer waiting.

3.2 Pavlovian Responses

Pavlovian conditioning, based on reflexes that serve prey capture, feeding, defense, escape, reproductive behavior, and care of offspring, has been demonstrated with a vast number of conditioned stimuli. Conditioned responses include fear (e.g., acceleration in heart rate and perspiration), sexual arousal, and immunological responses. Pavlovian conditioning serves the organism by permitting it to anticipate the stimuli that elicit these reflexes, so it should follow that the conditioned response should resemble the unconditioned response. (A notable exception occurs with drug US’s, for example, the UR to morphine is euphoria, but the CR to a CS for administration (e.g., a syringe) is illness rather than euphoria.)

The form of the conditioned response can be influenced by both the US and CS. For example, in the rat, a localized-light CS paired with a food US produces rearing on the hind legs, but the same localized-light paired with shock will produce muscular tension; while a tone paired food leads to an increase in activity. A Pavlovian positive contingency produces excitatory conditioning in that the CR becomes more likely; while a Pavlovian negative contingency produces inhibitory conditioning in that the CR becomes less likely (Fig. 4). For example, fear will be elicited by a tone CS that reliably precedes a shock US; but a light that reliably precedes periods of no shock will inhibit fear when paired with CS for shock. A conditioned inhibitor can also be produced by occasionally pairing a stimulus with an excitatory CS (a compound CS) while omitting the US or when a CS is extinguished.

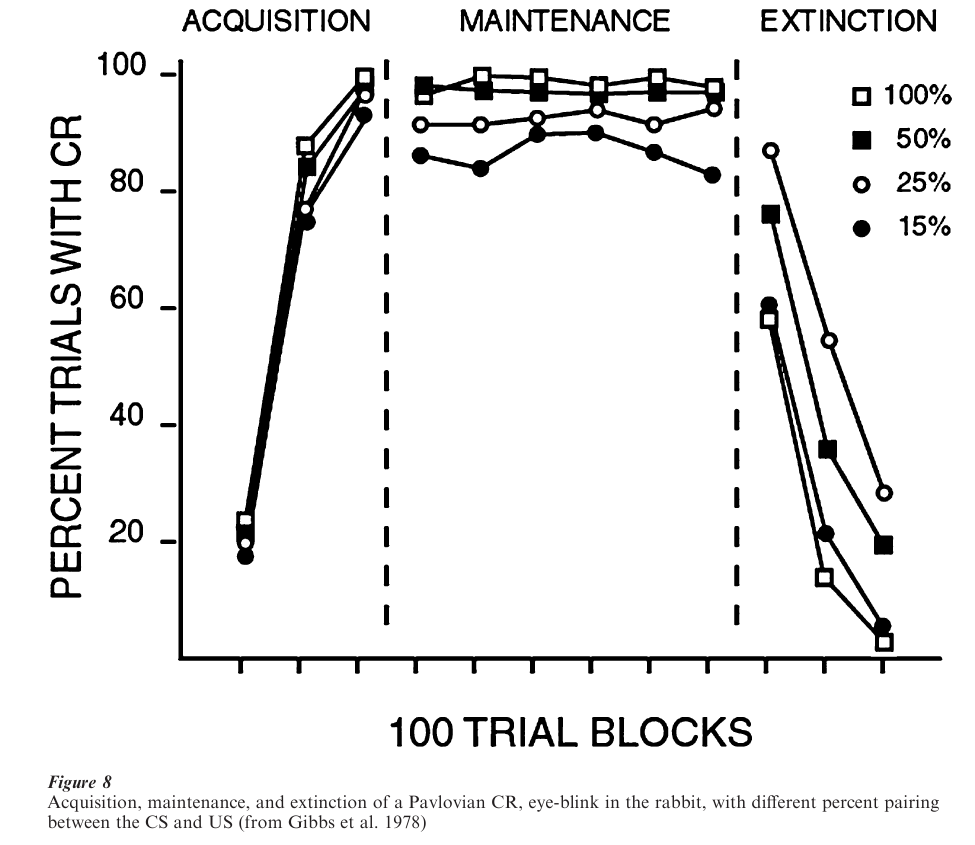

Acquisition of a CR proceeds in a negatively accelerated pattern, illustrated in Fig. 8. Also shown in Fig. 8 is the effect of reducing the probability of a US given a CS (keeping the probability of a CS given no US at zero). Reducing the number of times a CS presentation is followed by a US has the effect of slowing acquisition, reducing the asymptotic number of trials with a CR, and slowing extinction. The acquisition and probability of the CR is also greatly affected by the ratio of CS duration divided by the inter-US interval, with smaller ratios producing higher CR probabilities.

A number of experiments have addressed the question of whether Pavlovian conditioning involves learning associations between the CS and the US, or between the CS and the UR. In experiments in which the CS is paired with a US while the UR is pharmacologically blocked, subjects show a CR to the CS after the block is removed, suggesting CS–US learning. But the matter has not been resolved because the US might still be activating efferent centers in the nervous system.

4. Signal Functions

Organisms can only respond adaptively to contingencies if they provide reliable signals in the form of CS’s and discriminative stimuli. In order to maximize efficiency, an organism must learn a signal after minimal trials, recognize variations, and reject similar but invalid stimuli. Moreover, the organism can’t afford to make too many mistakes or spend too much time learning each variant. The ecological constraints are similar in Pavlovian and operant conditioning, so stimulus functions in both share many features.

4.1 Attention, Blocking, And Preparedness

Organisms do not attend to all stimuli that they can sense when a US or a reinforcer is presented; instead, they are selective. When discriminative stimuli contain multiple dimensions, such as shape and color, organ-isms may show attention to one dimension and ignore the other. A previously learned CS or discriminative stimulus can also block attention to a stimulus. For example, after pairing a light CS with a food US, further training with a light tone CS will not produce learning of the tone CS. This blocking effect shows that contingency and contiguity are not sufficient for learning about signals—learning will only take place if there are no other reliable signals present.

Attention has a strong genetic component because contingencies associated with a particular niche tend to have predictable signals. Rhesus monkeys will fear a snake CS if it is presented with a fear response US from another monkey, but not a flower CS paired with the same US. In operant behavior, several food-storing birds selectively attend to the spatial locations of food sites and not their non-spatial aspects such as colors. In each case, the animal attends to the most eco-logically relevant cue.

4.2 Discrimination And Generalization

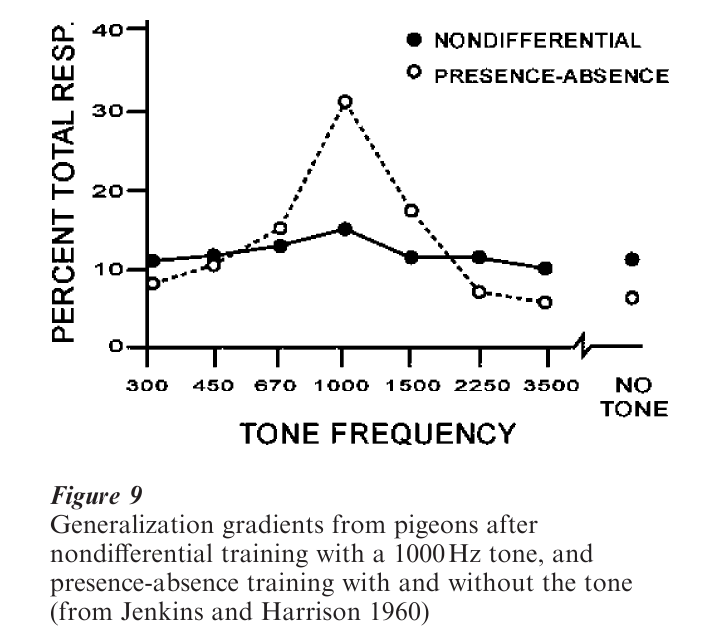

An organism must generalize responding to stimuli that are different to the training stimulus, but not too different. With many stimuli, such as tones and colors, generalization shows a peak around the CS or dis-criminative stimulus that is called a generalization gradient—the probability of responding decreases as a function of difference from the training stimulus. Fig. 9 shows a typical generalization gradient for operant behavior. Discriminative stimulus control is tighter (peaked gradient) when reinforcement in the presence of the discriminative stimulus was alternated with periods without the stimulus (presence–absence training), in comparison to training without extinction periods (nondifferential training). This finding illustrates that discrimination requires differential training; without differential training, organisms generalize broadly.

Signals in the natural environment are likely to be plants, insects, odors, and so forth. Animals readily learn these natural concepts. Experiments investigating the basis of concept learning in animals find that some concepts such as cars require training with several examples but others, such as an oak leaf, can be learned after a single exemplar.

4.3 Conditional Discrimination And Occasion Setting

Reinforcement can be made contingent on conditional relationships between stimuli. In an identity matching task, a pigeon is reinforced for responding on the basis of similarity: selecting the ‘triangle’ comparison is conditional on the ‘triangle’ sample. Conditional discrimination is the basis of symbolic behavior. For example, when prompted with ‘What color?’ in the presence of a blue square, we say ‘Blue’; and with ‘What shape?’ we say, ‘square.’

Animals and humans can learn conditional discriminations such as ‘same’ and ‘different,’ as well as symbolic matching between arbitrarily related stimuli such as words and their referents. However only humans can demonstrate certain kinds of emergent conditional discriminations. Once humans learn the symbolic stimulus relation, ‘if stimulus A then B,’ they can respond correctly to a symmetry test, ‘if B then A,’ without further training. The variables that explain symmetry are not yet understood; the ability may be a uniquely human accomplishment possibly emerging with language. Interestingly, however, both humans and other species show transitivity: ‘if A then B’ and ‘if B then C’; therefore, ‘if A then C.’

In Pavlovian conditioning, a stimulus that signals when a CS will be followed by a US will modulate the CR to the CS. For example, if tone + light CS is followed by a food US, but light by itself signals no food, then light will only show a food CR if tone is also present. Tone in this example is called an occasion setter, and its effects can be shown to be generalized to modulate a CR to other CS’s trained with other occasion setting stimuli and US’s.

5. Applications

The principles of Pavlovian conditioning and operant behavior have been applied to solve numerous practical problems. Pavlovian extinction procedures have proven effective in the treatment of phobias; and clinics have found that conditioned nausea to hospitals after chemotherapy can be prevented by giving patients flavored candy to eat during administration, producing nausea to the flavor and not the hospital. Reinforcement procedures have similarly found many applications. The concept of conditioned reinforcement is behind token economies used in schools and correctional institutions; shaping procedures are being used in programmed instruction with computers; the matching principle has been applied to the reduction of maladaptive behavior in persons with mental retardation without the need for punishment; various principles have been applied to problems of treatment adherence in drug dependency programs; and principles of discrimination learning have been used to develop highly effective language training programs for children with autism. Our understanding of Pavlovian and operant conditioning mechanisms continues to provide simple yet sophisticated approaches to complex problems.

Bibliography:

- Donahoe J W, Palmer D C 1994 Learning and Complex Behavior. Allyn & Bacon, Needham Heights, MA

- Dragoi V, Staddon J E R 1999 The dynamics of operant conditioning. Psychological Review 106: 20–61

- Hineline P N 1981 The several roles of stimuli in negative reinforcement. In: Harzem P, Zeiler M D (eds.) Predictability, Correlation, and Contiguity. Wiley, New York, pp. 203–46

- Rescorla R A 1988 Pavlovian conditioning: It’s not what you think it is. American Psychologist 43: 151–60

- Staddon J E R 1983 Adaptive Behavior and Learning. Cambridge University Press, Cambridge, UK

- Williams B A 1989 Reinforcement, choice, and response strength. In: Atkinson R C, Herrnstein R J, Lindzey G, Luce R D (eds.) Stevens’ Handbook of Experimental Psychology: Vol. 2. Learning and Cognition. Wiley, New York, pp. 167–244