Sample Neural Circuits Research Paper. Browse other research paper examples and check the list of research paper topics for more inspiration. If you need a religion research paper written according to all the academic standards, you can always turn to our experienced writers for help. This is how your paper can get an A! Feel free to contact our research paper writing service for professional assistance. We offer high-quality assignments for reasonable rates.

Information about the external world and internal actions is represented in the brain by the activity of large networks of heavily interconnected neurons, that is, neural circuits. To be efficient, this representation requires neuronal circuits to perform certain functions which cannot be performed by single neurons. In this research paper, some of the current models of emergent functions of neural circuits are described.

Academic Writing, Editing, Proofreading, And Problem Solving Services

Get 10% OFF with 24START discount code

1. Single Neurons And Neural Networks

To some extent, a neural circuit can be viewed as a complex electronic device. Every part of the device (e.g., transistor) may possess rather complex characteristics, yet only the whole device can perform the function for which it is designed. This distinction is even more striking in the case of neural circuits, where the parts of the device, the single neurons, in many cases exhibit a remarkable degree of uniformity. For example, pyramidal cells of the hippocampus, which carry the representation of space, are similar to each other in terms of their anatomical and physiological properties. It appears therefore that a major factor that determines the emerging functional properties of neural circuits is the connections between the neurons in the network. The relation between connectivity and function is the main problem addressed by the models which are described below.

A single neuron exchanges information with other neurons by generating a sequence of sharp repolarizations of its membrane, called action potentials, which propagate along the neuron’s axon until they reach the target neurons. Since every action potential has a largely stereotyped shape, it does not contain any specific information about the state of the neuron. Instead, it is believed that all of the information is represented in the neuron’s spike train, that is, the sequence of times at which action potentials are emitted. In most of the models, this is further simplified into a firing rate, that is, a rate with which a neuron is emitting its action potentials (some of the models however consider the timing of every single action potential to be informative; see, e.g., Hopfield 1995). Once the action potential of a neuron arrives at its destination, its effect can be either increased or decreased depolarization of the target neuron’s membrane potential. These two opposing effects are called excitation and inhibition, respectively. In many parts of the brain each target neuron is subject to excitation and inhibition effects from thousands of other neurons, and responds by generating its own spike train in a particular time window (Abeles 1991).

The amplitudes of the excitatory and inhibitory influences differ widely and define the strengths of the corresponding connections. It is the distribution of the connection strengths across the neural network which enables it to perform a particular function. Since the strength of the connections is not a fixed quantity but can be modified as a result of development and or experience, this mechanism also allows for the acquisition of a certain function with experience, which therefore constitutes the neural basis of learning and memory (see, e.g., Implicit Learning and Memory: Psychological and Neural Aspects)

2. Main Network Paradigms

Understanding the emergence of functions from connectivity in neural networks is a subject of active research. This research has resulted in the formulation of numerous models, each characterized by various degrees of generality and biological realism. Since the emphasis is put on the role of connectivity, a highly simplified description of single neurons is adopted in most of the models. Usually, a single neuron is modeled as an input–output element which integrates excitatory and inhibitory influences from other neurons in the network and transforms them directly to its firing rate

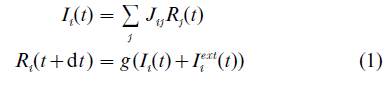

where Ri stands for the rate of the i-th neuron in the network and Jij denotes the strength of the connection between neurons j and i. The total input which the neuron receives is divided in two components: Ii, which is due to the influences coming from the other neurons in the same network, and Iiext resulting from the activity in other areas of the brain. Finally, the input is translated into the neuron’s rate at the next time step by a gain function g(I ). Typically, the gain function is a continuous, monotonically increasing function of its input, such as, for example, a sigmoid or a threshold-linear function. In some of the more realistic models, a spike train is produced as an output. This approach is broadly called connectionism (McClelland et al. 1986).

As an individual neuron can be considered as an input–output element transforming inputs into output rate, so the whole network can be viewed as transforming its external inputs, Iiext into the firing rates of the neurons. Specifying the rates of all of the neurons in the network defines the corresponding network state of activity. The additional complexity brought by the presence of the set of connection strengths however makes the global transformation from external inputs to the network states much richer than the one occurring at the single neuron level. In particular, the network can even display the dynamic behavior which is to a large degree autonomous and is only partially influenced by external inputs. It is this property which enables new functional behaviors to emerge in neural circuits.

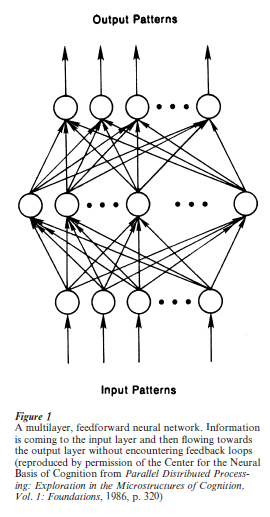

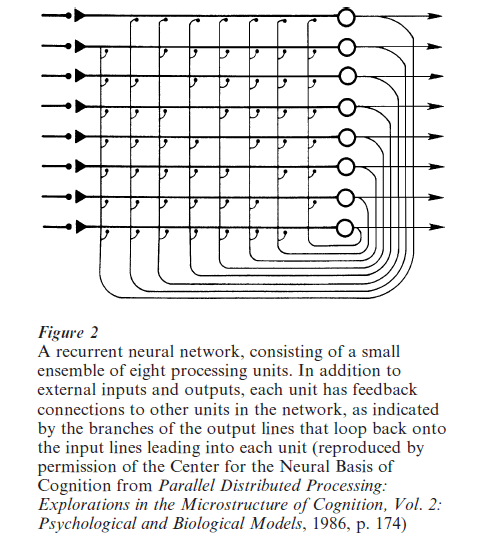

Some of the major network paradigms that were formulated in the framework of connectionist modeling are described below. They are classified according to the architecture of the connections. In the feed-forward networks, the neurons can be divided in an ordered sequence of distinct layers, such that all of the connections originating from the neurons of a particular layer only target the layers which are higher up the stream. Importantly, neither feed-back connections from upper to lower layers, nor recurrent connections within the layers are allowed. The lowest layer usually contains the neurons which are receiving the external inputs, while the highest layer is the one where the output neurons are located. Another type of network which will be described is the recurrent network where information from a single neuron can return to it via the connections which form so-called feedback loops. The division of the network into distinct layers is therefore not possible, and the whole network must be considered as one layer in which both input and output neurons are located.

2.1 Feed-Forward Neural Networks

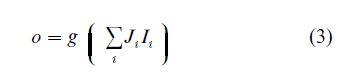

In these networks, information flows in one direction from the input layer to the output layer, without feedback loops. This arrangement of connections does not allow the network to exhibit an autonomous dynamics, and the activity of output neurons is therefore completely determined by the inputs in a finite number of time steps. In other words, a feed-forward network realizes a certain mathematical function on its input

![]()

The precise form of this function depends on the architecture of the network (the number of layers, the number of neurons in each layer, etc.) and the distribution of strength of connections between the layers. The simplest feed-forward network is a famous perceptron, which is also the first studied neural network (Rosenblatt 1962). It consists of an input layer and a single output neuron. The mathematical function which it realizes has, therefore, the following form

It was shown that only a limited family of functions can be realized with the simple perceptron, but adding intermediate layers of neurons expands it to include practically every mathematical function. Effective learning algorithms for feed-forward networks have been developed, the most widely used being the so-called back propagation of errors. This allowed numerous practical applications of feed-forward networks.

Another prominent feed-forward network paradigm, which also contains some elements of recurrent processing, is the self-organizing feature map, developed by Kohonen (1984). It attempts to account for the ubiquitous presence of feature maps in the neocortex of the mammalian brain, ranging from the retinotopic map in the visual cortex to the topographic representation of the body in the soma-to-sensory cortex. From the functional perspective, the network allows extraction of low-dimensional features from the set of multidimensional inputs, and maps them to an array of output neurons arranged in a low(typically one or two-) dimensional sheet in a topographic manner, such that the distance relations in the space of the inputs is represented as distance relationships between the neurons in the output layer.

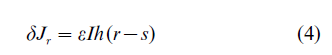

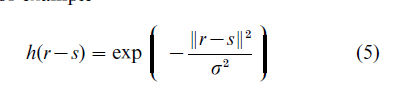

This is achieved by numerous presentations of inputs and gradual update of feed-forward connections. At every presentation of the (high-dimensional) input, I, the excitation received by each neuron, occupying the site r at the output layer, is given by the scalar product ∑iJriIi =JrI, where Jr is a vector of the neuron’s connection strengths. The crucial step of the learning algorithm is finding the neuron which receives the maximum excitation for a given input. After this is done (let’s says s is the site with the maximum excitation), feed-forward connections are modified in a Hebb-like manner, but only for the output neurons which are located in the neighborhood of s

where the function h(r-s) is such that it selects the neighboring sites which are participating in the current update, for example

This learning rule guarantees that neighboring neurons tend to update their connections in response to similar inputs, and thereby develop the selectivity to similar features. Self-organizing feature maps have been applied successfully to model the appearance of ocular dominance and orientation selectivity maps in the primary visual cortex (Erwin et al. 1995).

2.2 Recurrent Neural Networks

In these network models, the emphasis is put on the functions which emerge from the recurrent connections between the neurons in a single layer. As opposed to feed-forward networks, recurrent networks may have nontrivial autonomous dynamics, since activity can propagate along the feedback loops independently of the external input. The most famous and well-studied model of this type is associative memory. This paradigm goes back to the classical ideas of Hebb on neuronal ensembles and synaptic plasticity (Hebb 1949), and was reinvigorated in the highly influential paper by Hopfield (1982). It is based on the observation that under some choice of the connections, the network described by Eqns. (1) can sustain various nontrivial activity states without the presence of external inputs. On the intuitive level, this can come about from the mutual excitation within a group of neurons, resulting in the activity reverberating across this group without ever extinguishing itself. On the mathematical level, this property manifests itself in the presence of many stable stationary solutions to the nonlinear Eqns. (l) with different subgroups of neurons having nonzero rates. The corresponding network states are often called attractors. Every attractor state can be reached autonomously by the network provided that the initial state has a large enough degree of overlap with it, that is, sufficiently many shared neurons with nonzero rates. This process can be identified with an associative recall of stored memories from initial cues containing only partial information. For instance, a telephone number can be recalled after several first digits are provided. As shown in the original paper by Hopfield, the sufficient condition for the network to have stationary attractors is the symmetry of the connections, that is, the condition that any pair of neurons has reciprocal connections of equal strength. More importantly, for any ‘random’ choice of putative memory states, Rµi , one can propose the distribution of connections which makes them attractor states of the network

where summation is performed over the set of putative memories. This construction also suggests a way in which connections could be modified during the learning. For each new memory, the corresponding state should be imposed on the network by external inputs.

Then, for every pair of neurons, the strength of the corresponding connection is modified by adding the product of the terms, each of which depends on the activity levels of the respective neurons. At the end, the distribution of the connection strengths depends on all of the memories, but does not depend on the order in which they were stored in the network. This learning algorithm was first envisioned by Hebb and subsequently gained neurophysiological confirmation. Although some of the features of the Hopfield model, such as the symmetry of connections, are not biologically plausible, subsequent studies showed that they can be relaxed without destroying the performance of the model (Amit 1989, Hertz et al. 1991).

More recently, associative memory networks were directly confronted with experimental results on delayed memory tasks in monkeys. In these experiments, the task of the monkey is to compare the sample and the match stimuli which are separated by the delay period of several seconds. In several cortical areas, neurons have been found which exhibited elevated firing rate during the delay period, which was selective for the sample stimulus, but only if this stimulus was seen many times in the past during training. These experiments provided a wealth of quantitative information about the persistent activity patterns in the brain, which were reasonably reproduced in the model (Amit 1995).

Nonstationary states of recurrent networks can also be of functional significance. In particular, introducing temporal delays in the connections, one can obtain the solutions where the network exhibits a set of transitions between the attractors stored in a temporal sequence (Kleinfeld and Sompolinsky 1988). This property can be used, for instance, in storing a sequence of motor commands associated with a particular action. On the more general level, it was shown that recurrent networks can learn all kinds of time-dependent patterns, both periodic and aperiodic.

Another class of recurrent neural networks was developed in order to model the representation of continuous features rather then discrete sets of memories. In particular, coding of the direction of hand movements in the motor cortex, orientation of stimuli in the visual cortex, and spatial location of the animal and direction of gaze were all proposed to involve the networks with continuous sets of attractor states. This property of the network arises from a special structure of connections, in which neurons with preference for similar features are strongly connected. For example, for neurons in the visual cortex which prefer stimuli oriented at the angles θi (-90° < θi < 90°), the distribution of connection strengths was proposed to be of the form

This structure of connections combines the effects of synergy between neurons with similar preferences (first, excitatory term) and global competition for activity (second, inhibitory term). As a result, the network possesses a set of marginally stable attractors, such that in every attractor the activity is localized on a group of neurons with similar preferences, the rest of the network being in the quiet state. External input serves to evoke a particular attractor out of a set possessed by the network, for example, oriented visual stimulus evokes the activity profile centered at the corresponding neuron. The precise shape of the profile of activity is determined by properties of single neurons (responsiveness) and parameters of interactions, but largely is independent on the variations in the input which evokes it. This property of networks with continuous attractors may account for such peculiar effects as invariance of orientation tuning to the contrast of the stimuli (Sompolinsky and Shapley 1997), persistence of space-related activity in hippocampus in total darkness (Tsodyks 1999), and stability of gaze direction in the darkness (Seung 1996), which are difficult to explain in the more traditional, feed-forward models (see Figs. 1 and 2).

Bibliography:

- Abeles M 1991 Corticonics. Cambridge University Press, New York

- Amit D J 1989 Modeling Brain Function. Cambridge University Press, New York

- Amit D J 1994 The Hebbian paradigm reintegrated: Local reverberations as internal representations. Behavioural Brain Science 18: 617–26

- Erwin E, Obermayer K, Schulten K 1995 Models of orientation and ocular dominance columns in the visual cortex: A critical comparison. Neural Computation 7: 425–68

- Hebb D O 1949 The Organization of Behavior. Wiley, New York

- Hertz J, Krogh A, Palmer R G 1991 Introduction to the Theory of Neural Computation. Addison-Wesley, New York

- Hopfield J J 1982 Neural networks and physical systems with emergent collective properties. Proceedings of the National Academy of Science USA 79: 2554–8

- Hopfield J J 1995 Pattern recognition computation using action potential timing for stimulus representation. Nature 376: 33–6

- Kleinfeld D, Sompolinsky H 1988 Associative neural network model for the generation of temporal patterns. Theory and application to central pattern generators. Biophysics Journal 54: 1039–51

- Kohonen T 1984 Self-organization and Associative Memory. Springer, Heidelberg, Germany

- McClelland J L, Rumelhart D E, PDP Research Group (eds.) 1986 Parallel Distributed Processing: Explorations in the Microstructure of Cognition. MIT Press, Cambridge, MA

- Rosenblatt F 1962 Principles of Neurodynamics: Perceptrons and the Theory of Brain Mechanisms. Spartan, Washington, DC

- Seung H S 1996 How the brain keeps the eyes still. Proceedings of the National Academy of Science USA 93: 13339–44

- Sompolinsky H, Shapley R 1997 New perspectives on the mechanisms for orientation selectivity. Current Opinion in Neurobiology 7: 514–22

- Tsodyks M 1999 Attractor neural network models of spatial maps in hippocampus. Hippocampus 9: 481–9