View sample comparative psychology of audition research paper. Browse research paper examples for more inspiration. If you need a psychology research paper written according to all the academic standards, you can always turn to our experienced writers for help. This is how your paper can get an A! Feel free to contact our writing service for professional assistance. We offer high-quality assignments for reasonable rates.

The world is filled with acoustic vibrations, sounds used by animals for communication, predator evasion, and, in the case of humans, also for artistic expression through poetry, theater, and music. Hearing can complement vision and other senses by enabling the transfer of acoustic information from one animal to the next. In some instances, acoustic signals offer distinct advantages over visual, tactile, and chemical signals. Sound can be effectively transmitted in complete darkness, quickly and over long distances. These advantages may explain why hearing is ubiquitous in the animal world, in air and underwater.

Academic Writing, Editing, Proofreading, And Problem Solving Services

Get 10% OFF with 24START discount code

The ability to detect and process acoustic signals evolved many times throughout the animal kingdom, from insects and fish to birds and mammals. Even within some animal groups, there is evidence that hearing evolved independently several times. Ears appear not only on opposite sides of the head, but also on a variety of body parts. Out of this diversity, one finds fascinating specializations but also a surprising number of general principles of organization and function. Comparative studies of hearing attempt to bring order to these findings and to deepen our understanding of sound processing and perception.

Research on comparative hearing includes a vast number of behavioral measures of auditory function, as well as elaborate neuroanatomical and neurophysiological studies of the auditory structures and signal processing. To review all common measures of auditory function, anatomy, and physiology in all species studied to date is far beyond the scope of this research paper. Instead, we review selected data from representative species, which allow us to highlight general principles and noteworthy specializations. We begin with a brief introduction to acoustic stimuli, followed by a review of ears and auditory systems in a large sample of species, and we conclude with a comparative presentation of auditory function in behavioral tasks. Due to the breadth of this topic, we have omitted most biophysical observations. For the reader who wishes to follow up on any or all topics covered here in more detail, we recommend Fay, Popper, and colleagues.

Overview of Acoustic Stimuli

Many features of hearing organs are simple consequences of the nature of sound waves. These are fluctuations in pressure propagating away from the source with a certain velocity. Therefore, devices sensitive to pressure, either pressure receivers or pressure gradient receivers, may detect sound. Because movement of particles in a medium is directional, receivers sensitive to this component of sound are inherently directional. Both types of detectors have evolved in the animal kingdom.

Sound behaves in a complicated manner close to a sound source (the near field) because sources are rarely the ideal small pulsating sphere. Further away in the far field (about 1 wavelength) sound behaves more simply, especially if there are no reflections. Sound waves can be characterized by their intensity or sound pressure level, frequency, and wavelength, all of which impact the detection, discrimination, and localization of acoustic signals.

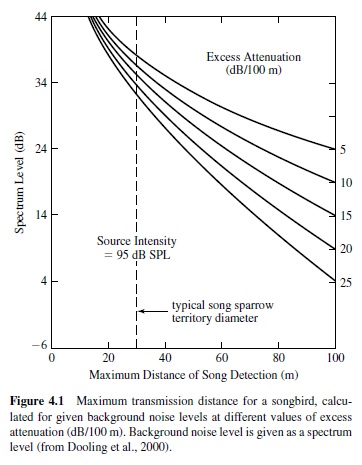

Sound transmission distance is influenced by the characteristics of the acoustic signal and the environment (e.g., Wiley & Richards, 1978). These data, together with psychophysical measures of auditory function, can be used to estimate the communication range of a given species (see Figure 4.1). For a detailed discussion of acoustics and their constraints on hearing in different environments, we refer the reader to comprehensive books by Beranek (1988) and Pierce (1989).

Auditory Periphery

Auditory hair cell bundles must be displaced for sensory transduction to occur. Although the basics of mechanoelectrical transduction are very similar among vertebrates, there are many ways to achieve movement of hair cell cilia because there are different physical constraints on the animals that detect sound in air or water. In water, soft tissues are acoustically transparent. Therefore, sound waves traveling through the body in water cause little direct displacement of hair cell bundles. Fish and amphibians solve this problem through relative motion between the hair cells and a denser overlying structure called an otolith (Lewis & Narins, 1999; Popper & Fay, 1999). In air, tympanic membranes and middle ear bones of terrestrial vertebrates compensate for the impedance mismatch between air and the water-filled inner ear cavities (Fritzsch, Barald, & Lomax, 1998).

Hearing is an evolutionarily ancient sense because vertebrate fossils possess an inner ear. Furthermore, such “primitive” vertebrates as lampreys and the coelacanth have inner ears (Popper & Fay, 1999). Modern vertebrates are thought to have evolved from a primitive group of jawless fishes. These early fishes gave rise to two separate evolutionary lines, the cartilaginous fishes (Chondrichthyes) and the bony fishes (Osteichthyes), as well as to the modern jawless fish. Early in bony fish evolution, the crossopterygian fishes are thought to have split off, to give rise eventually to the tetrapods. This lineage gave rise to the amphibians and then the stem reptiles. These early reptiles then diverged, leading to the evolution of two groups, the birds and crocodilians and the mammals. There have been significant modifications to the ear in all lineages.

Insects

Insect ears appear often in evolution and generally have three major features: (a) thinning of cuticle over the organ to form tympanum that is moved by air pressure, (b) formation of an air cavity of tracheal origin sometimes expanded into a chamber, and (c) innervation of this complex by sensory cells. Sound vibrates the tympanum and transmits motion to the sensory cell. Thus unlike vertebrate ears, where airborne vibrations are converted into vibrations in fluid by middle ear bones, no such conversion is required in insects. Most insect ears do not have many receptor cells, but tonotopic organization has developed where there are many receptors. For example, crickets have relatively large ears, with 60 to 70 auditory receptor neurons divided into two groups. The proximal group is sensitive to lower frequencies, whereas the larger distal population is tuned over a frequency range from the best frequency of cricket song (5 kHz) to ultrasound. Another strategy has been to develop different ears with different sensitivities: Mantis ears exhibit sensitivity to low frequencies in their mesothoracic ear and sensitivity to high (ultrasonic) frequencies in the metathoracic ear (Yager, 1999).

Fishes

The auditory organs of fishes are the saccule, lagena, and utricle. Each has sensory tissue containing hair cells and support cells overlain by an otolith. The otolith organ most used in hearingvariesamongspecies.Intheherringstheutricleisspecially adapted for receiving acoustic input, but in most fishes the saccule is the primary auditory organ. Most bony fishes have a swim bladder or other gas-filled “bubble” in the abdominal cavity or head.As sound pressure fluctuations occur, the bubble expands and contracts according to the amplitude of motion characteristic of the enclosed gas.The swim bladder thus becomes a monopole sound source. The motions of the swim bladder walls may reach the ears of some species and cause relative motion between the otoliths and underlying hair cells. In this case, the sound pressure amplitude determines hair cell stimulation. In most fishes response of the ear to sound is determined simultaneously by the ear detecting particle motion in its “accelerometer” mode and by the ear detecting sound pressure via the swim bladder response. In some species the swim bladder is specifically linked to the ear via specialized mechanical pathways. The best known such pathwayistheWeberianossiclesystem,aseriesoffourbonesconnecting the anterior swim bladder wall to the ears. Fishes with such ossicles are considered to be “hearing specialists” in that their sensitivity and bandwidth of hearing is generally greater than for animals lacking such a system. The herrings and the mormyrids have gas bubbles near the ears in the head and are thus also considered to be hearing specialists (Popper & Fay, 1999).

Frogs

With the movement to land, all the major features of the amniote ear appeared, including the tympanum, middle ear, impedance-matching ossicles inserted in the oval window, a tectorial body overlying the hair cells, and specialized auditory end organs (Lewis & Narins, 1999). The ossicles act as an impedance transformer because they transmit motion of the tympanic membrane to the fluid-filled inner ear. Differential shearing between the membranes and hair cell cilia stimulates the hair cells. The sensory hair cells of the frog (Rana) have been used to identify the cellular basis of transduction, mechanosensitive channels located at the tips of the stereocilia (Hudspeth, Choe, Mehta & Martin, 2000). Hair cells are depolarized by movement of the stereocilia and release neurotransmitter onto their primary afferents.

Frogs have very specialized peripheral auditory systems, with two end organs, the amphibian and basilar papillae. This duplication may increase the frequency range because the basilar papilla is sensitive to lower frequencies than is the amphibian papilla (Lewis & Narins, 1999). The discovery of a papilla structure in the coelacanth similar to the amniotic basilar papilla suggests that this organ arose before the evolution of tetrapods (Fritzsch, 1998). There is debate about the homology of the amphibian papilla with the basilar papilla or cochlea of amniotic vertebrates (see Lewis & Narins, 1999). The amphibian papilla is functionally similar to the amniote papilla, but a lack of structural similarity suggests that these organs arose in parallel, with the common function reflecting a basic auditory role. Paleotological evidence suggests that the amniote tympanic ear may have evolved independently at least 5 times, in synapsids, lepidosauromorph diapsids, archosauromorph diapsids, probably turtles and amphibians (Clack, 1997).

In frogs the air spaces of the middle ear open widely to the mouth cavity via large eustachian tubes. This wide pathway of communication between the two ears and the mouth and lungs (and possibly the endolymphatic sac, which is located dorsally on the animal’s neck and upper back) makes possible several potential pathways of sound both to the outer and inner surface of the tympanic membrane. Evidence exists that the ears of some anurans operate both as pressure receivers and as pressure gradient receivers in certain frequency ranges. Because pressure gradients are vector quantities, the ear operating in this mode is inherently directional (Lewis & Narins, 1999).

Reptiles

The reptilian ear has a new feature: a basilar membrane, a thin partition in the fluid partition along which alternating pressures are transmitted (Wever, 1978). Despite the uncertainty surrounding the amphibian ear, and the parallel evolution of the middle ear in amniotes, the evolution of the stereotypical basilar papilla of modern amniotes begins with the stem reptiles (Manley, 2000; M. R. Miller, 1980). The key features of this auditory organ are seen in turtles. The turtle basilar papilla is a flat strip of membrane populated by approximately 1,000 hair cells (Köppl & Manley, 1992). Salient features in papilla evolution include lengthening and curvature of the sensory epithelia, features thought to both enhance sensitivity and extend the audible frequency range (Gleich & Manley, 2000). The avian-crocodilian and mammalian lineages are thought to have diverged from the stem reptiles quite early, and the papillae of these groups are believed to have evolved in parallel. Elongation relative to the turtle papilla is seen in all groups. In addition, lizards display a unique population of freestanding hair cells that are sensitive to higher frequencies. How is frequency tuning achieved? Recordings from turtle hair cells show that a major part of the peripheral tuning mechanism resides in the individual hair cells; that is, they display electric tuning (Fettiplace, Ricci, & Hackney, 2001). Other mechanisms may also apply (Manley, 2000).

Birds

The outer ear of birds includes an external canal and a middle ear similar to those of the amphibians and reptiles in having a single major ossicle, the columella. The efficiency and frequency response of this system is not unlike that of mammals in the frequency range below about 2000 Hz. The columellar middle ear probably should not be considered the major factor limiting the frequency range of hearing because at least one species (the barn owl) has extended its range considerably without abandoning the columella design (Gleich & Manley, 2000). The inner ear of birds includes a cochlea in addition to an associated lagena. A cross section of the bird basilar membrane and papilla shows many rows of hair cells that vary in height across the membrane. There are not two types of hair cells, like there are in mammals, but the tall hair cells closest to the neural edge of the papilla provide the most afferent input to the auditory nerve dendrites, whereas short hair cells furthest from the neural edge receive purely efferent innervation. In general, the height of the hair cell stereocilia varies smoothly from one end of the papilla to the other. Long stereocilia have been associated with low frequency sensitivity, and short with high frequency sensitivity. It is likely that a frequency analysis occurs along the basilar membrane of the bird ear in much the same way that it occurs among mammals (Fuchs, 1992).

Mammals

Mammals have three middle ear bones that work together as a lever system to amplify the force of sound vibrations. The inner end of the lever moves through a shorter distance but exerts a greater force than the outer end. In combination the bones double or triple the force of the vibrations at the eardrum. The muscles of the middle ear also modify the amplification of this lever system and can act to protect the ear from large vibrations. The stapes passes the vibrations to the oval window or opening in the bony case of the cochlea. The oval window is 15 to 20 times smaller than the eardrum, which produces some of the amplification needed to match impedances between sound waves in the air and in the cochlear fluid and set up the traveling wave in the inner ear.

In mammals, sensory cells are organized on the basilar membrane into one row of inner hair cells (inner because they are closer to the central core of the cochlear) and three to five rows of outer hair cells (Dallos, 1996). Inner hair cells innervate Type 1 primary afferents and are innervated by a very few efferents. Outer hair cells are sparsely innervated by Type 2 primary afferents and receive more efferent terminals. Type 1 afferents comprise 95% of total afferents, and they convey the frequency, intensity, and phase of signal to the auditory nerve. Sound frequency is encoded by place on the cochlea; intensity is encoded by the DC component of receptor potential; and timing is encoded by the AC component. Such a system must act as a low pass filter, which places limits on phase locking. There are two main theories about the function of outer hair cells. One is that the traveling wave is boosted by a local electromechanical amplification process; that is, the outer hair cells act as a cochlear amplifier. The other theory is that the outer hair cells mechanically affect the movement of the tectorial membrane. If outer hair cells are destroyed, frequency tuning is greatly diminished.

Central Auditory Pathways

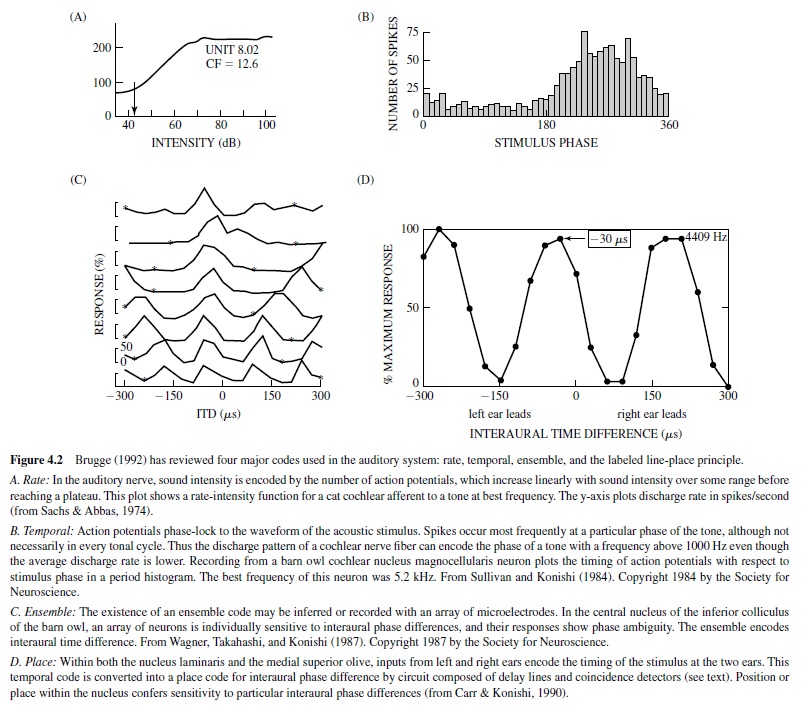

Auditory information is encoded in the activity of both single neurons and arrays of neurons. This activity can be divided into four major codes: rate, temporal, ensemble, and the labeled line-place principle (Brugge, 1992; Figure 4.2). These codes assume the existence of a sensitive receiver or set of neurons whose activity changes in response to the code. None of the codes appear capable of transmitting the entire array of spectral and temporal information (Brugge, 1992). Instead, they appear to operate in various combinations depending on the acoustic environment. Coding strategies also appear to change at different levels of the central auditory pathway, for example, when the phase-locked spikes of the temporal code are converted to the place code output of neurons sensitive to interaural time differences (ITDs). There is no evidence that coding strategies differ among animals.

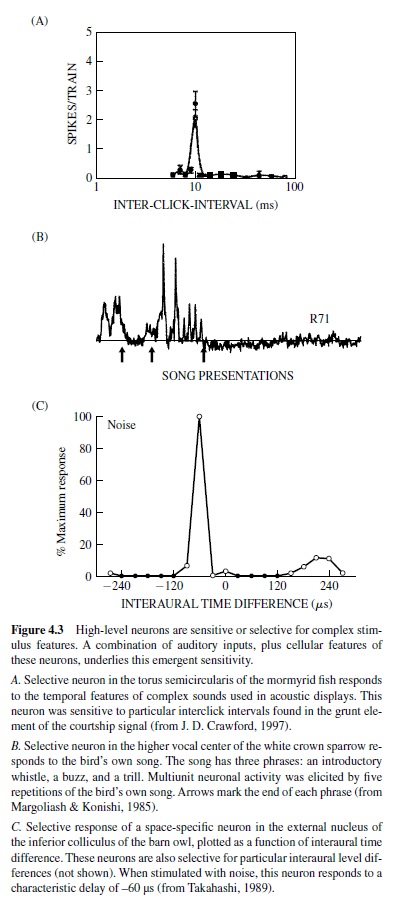

High-level neurons selective for complex stimulus features have been found in every auditory system.These include the song-specific responses found in the song birds, pulseinterval-specific neurons in the midbrain of the mormyrid electric fish, and space-specific neurons in the space-mapped region of the inferior colliculus of the barn owl (Figure 4.3). It is not always clear what combination of inputs and intrinsic properties conveys such specificity.

The basic anatomical organization of the central auditory system does not differ greatly among vertebrates. These connections are reviewed in chapters in The Mammalian Auditory Pathway: Neuroanatomy (Webster, Popper, & Fay, 1993), in Neurobiology of Hearing (Altschuler, Hoffman, Bobbin, & Clopton, 1991), and in The Central Auditory System (Ehret & Romand, 1997). The primary auditory nuclei send a predominantly contralateral projection to the auditory midbrain and in some vertebrates to second-order (olivary) nuclei and lemniscal nuclei. The auditory midbrain generally projects bilaterally to dorsal thalamus and then to hypothalamus and telencephalon. Major differences among central auditory structures appear seldom in evolution. Selective forces driving these changes have been ascribed to the development of new end organs in the auditory periphery and to the increased use of sound (Wilczynski, 1984).

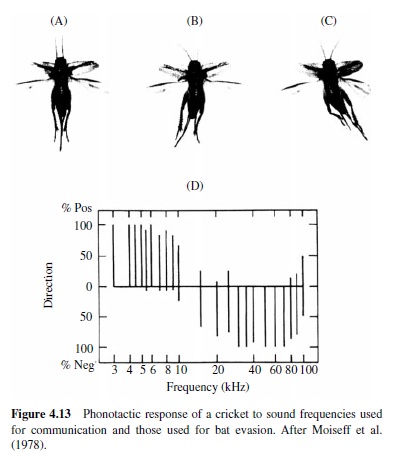

Fay, 1998). The tasks of the insect auditory system are to filter important signals out of the environmental noise including specific frequencies, patterns, and loudness and to determine the location of the sound source. Behavioral studies have shown that crickets can phonotax, or orient toward, sound (as shown later in Figure 3.13). These studies have shown that crickets are sensitive to a wide range of frequencies, with intraspecific signals being most important (Pollack, 1998). They recognize cricket song, particularly pulse period. In the cricket central nervous system, there are neurons that encode the frequency, intensity, direction, and temporal patterns of song. These include multiple pairs of identified interneurons, including the intrasegmental neurons that respond to the temporal pattern of the song (Pollack, 1998).

Fishes

Psychophysical studies have shown that fish hear in the same sense that other vertebrates hear (Fay, 1988). This conclusion is based on behavioral studies of their sensitivity and discriminative acuity for sound. The best sensitivity for hearing specialists is –40 to –50 dB (re 1 dyne per cm2) units, between 200 Hz and 1500 Hz. Fishes without swim bladders or without clear connections between the swim bladder and the ear have best sensitivities between –35 dB and about 10 dB, between 50 Hz and 500 Hz. Sound pressure thresholds for fish that do not use the swim bladder in hearing are inadequate descriptors of sensitivity. The sensitivity of these animals is thus dependent on sound source distance and is better described in terms of acoustic particle motion. Fish ears are also inherently directional (Popper & Fay, 1999).

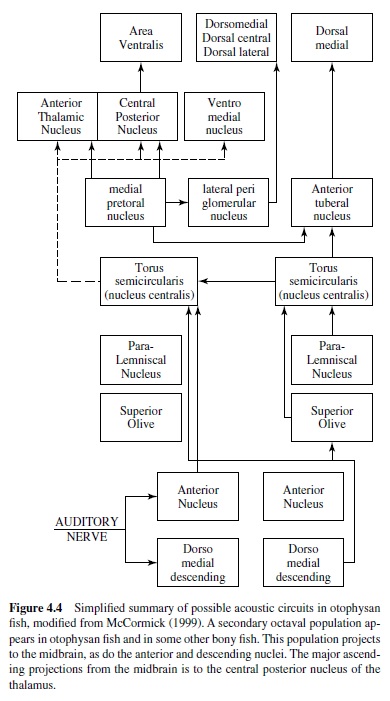

In all vertebrates the auditory nerve enters the brain and divides into two (ascending and descending) branches. In bony fish the ancestral pattern is for auditory and vestibular inputs to project to the anterior, magnocellular, descending and posterior nuclei of the ventral octaval column. Within fish that are auditory specialists, new more-dorsal auditory areas arise from the ventral column (McCormick, 1999). Auditory projections to the descending and anterior octaval nuclei have appeared independently many times in hearing specialists. Both the anterior and descending nuclei project to the auditory area of the central nucleus of the midbrain torus. This area is located medial to the lateral line area. In hearing specialists, secondary octaval and paralemniscal nuclei appear in the hindbrain. The secondary octaval nuclei receive

Insects

Insects hear to obtain information about their environment, so moths and mantises hear the echolocating sounds of bats, whereas crickets localize their mates (see Hoy, Popper, & input from the descending nucleus and project to the central nucleus. Many toral neurons phase-lock to the auditory stimulus, and some exhibit sharp frequency tuning, although the majority of toral units are more broadly tuned (Feng & Schellart, 1999). Some fish use sound for communication, and there are neurons in the central nucleus that are sensitive to the grunts, moans, and howls produced by vocalizing mormyrids (J. D. Crawford, 1997; see Figure 4.3, panel A). The central nucleus has major ascending projections to the dorsal thalamus (central posterior and sometimes anterior). It also projects to the ventromedial nucleus of the ventral thalamus, the posterior tuberculum, and the hypothalamus (McCormick, 1999). The central nucleus and hypothalamus are reciprocally interconnected, which may be related to the role of sound in reproductive and aggressive behavior in some fish. The telencephalon in bony fish is divided into dorsal and ventral areas, with the dorsal area proposed to be homologous to the pallium of other vertebrates, and the ventral area to the subpallium. Two dorsal areas have been shown to respond to sound (within dorsal medial pallium (DM) and dorsal central pallium (DC); see Figure 4.4), but little is know about auditory processing rostral to the midbrain.

Frogs (Anurans)

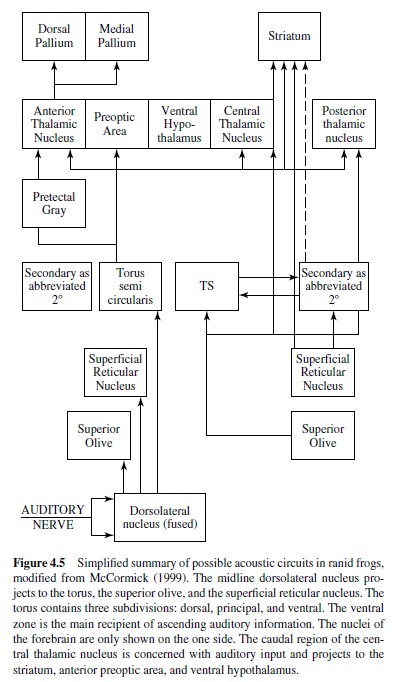

Psychophysical hearing data exist only for the frogs. Many frogs vocalize during mating and other social interactions, and they are able to detect, discriminate, and localize species-specific vocalizations. Behavioral studies have exploited the natural tendency of frogs to orient to sounds broadcast in a more or less natural setting (Zelick, Mann, & Popper, 1999). In frogs afferents project to the specialized dorsal medullary nucleus, and ventrally and laterally to the vestibular column. The dorsal nucleus is tonotopically organized with high-frequency responses from the basilar papilla medial and lower best-frequency responses from the amphibian papilla mapped laterally (McCormick, 1999), as well as typical V-shaped tuning curves (Feng & Schellart, 1999). A major transformation in the signal representation takes place in the dorsal nucleus, with primary like, onset, pauser, and chopper discharge patterns recorded (Feng & Schellart, 1999). These four discharge patterns may correspond to different processing streams or neural codes. The dorsal nucleus projects both directly and indirectly to the auditory midbrain torus, with projections to the superior olive and superficial reticular nucleus (Figure 4.5). The superior olive receives bilateral input from the dorsal nucleus, and many neurons there respond to a wide range of amplitude-modulated stimuli. The ventral zone of the torus receives most of the ascending inputs. It is tonotopically organized; its neurons are often selective to amplitude-modulated stimuli; and more neurons respond to complex sounds than in the medulla (Feng & Schellart, 1999). The torus projects to the central and posterior nuclei of the thalamus and to the striatum. Recordings from the posterior nucleus show sensitivity to the frequency combination present in the frog advertisement calls, and many neurons in central thalamus are broadly tuned and sensitive to specific temporal features of the call (Feng & Schellart, 1999). The central thalamus projects to the striatum, the anterior preoptic area, and the ventral hypothalamus. These connections may mediate control of reproductive and social behavior in frogs (Wilcyznski et al., 1993). The anterior thalamic nucleus supplies ascending information to the medial pallium, although little is known about pallial auditory processing.

Reptiles

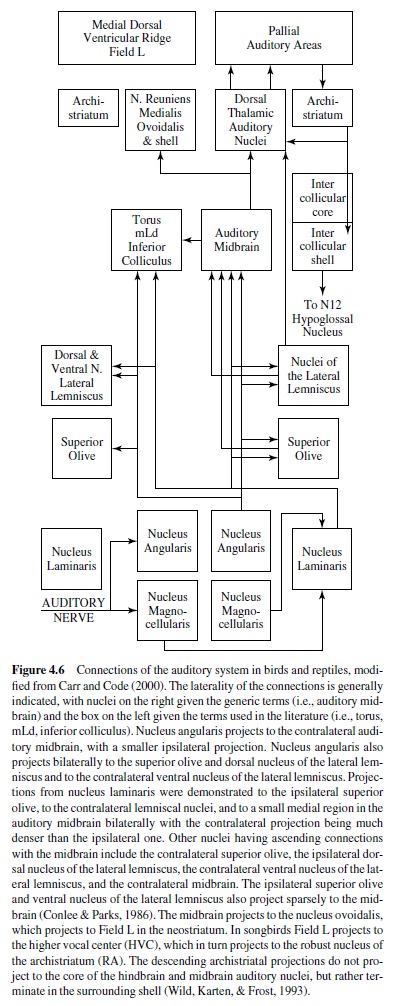

The auditory central nervous system is organized in a common plan in both birds and reptiles, presumably due to the conserved nature of the auditory sense and their close phylogenetic relationship (Carr & Code, 2000; Figure 4.6). The auditory nerve projects to two cochlear nuclei, the nucleus magnocellularis and the nucleus angularis, and sometimes to the second-order nucleus laminaris. The nucleus magnocellularis projects to the nucleus laminaris that in turn projects to the superior olive, to the lemniscal nuclei, and to the central nucleus of the auditory midbrain (torus semicircularis, nucleus mesencephalicus lateralis dorsalis, inferior colliculus). The nucleus angularis projects to the superior olive, to the lemniscal nuclei, and to the central nucleus of the auditory midbrain. The parallel ascending projections of angularis and laminaris may or may not overlap with one another, and probably do overlap in the primitive condition. Hindbrain auditory connections are generally bilateral, although contralateral projections predominate. The lemniscal nuclei project to midbrain, thalamic, and forebrain targets. The central nucleus of the auditory midbrain projects bilaterally to its dorsal thalamic target (nucleus medialis or reuniens in reptiles, nucleus ovoidalis in birds). The auditory thalamus projects to the auditory region of the forebrain (medial dorsal ventricular ridge in reptiles, Field Lin birds). Field Lprojects to other forebrain nuclei that may be involved in the control of song and other vocalizations. Descending projections from the archistriatum to the intercollicular area (and directly to the hypoglossal nucleus in some) appear to mediate vocalization.

The organization of the central auditory pathways in the turtles is considered to be close to the ancestral plan, whereas the brainstem auditory nuclei of lizards and snakes differ somewhat from other reptiles and birds (Gleich & Manley, 2000). This may be because lizards usually have two types of hair cell, tectorial and freestanding. Tectorial hair cells resemble those found in birds and mammals. Auditory nerve fibers that innervate them encode low center frequencies (100–800 Hz) and have sharp asymmetric tuning curves. Fibers from freestanding hair cells have high center frequencies (900–4000 Hz), high spontaneous rates, and broad symmetric tuning curves. Freestanding hair cells may be a uniquely derived feature of lizards that enables this group to respond to higher frequencies.Auditory nerve fibers from tectorial hair cells project to the nucleus magnocellularis and the lateral nucleus angularis. Neurons that contact freestanding hair cells project primarily to the nucleus angularis medialis. There have been very few physiological investigations of the cochlearnucleiinreptiles,althoughtheauditoryperipheryhas been studied extensively (Carr & Code, 2000).

Birds

Birds use sound for communication and hear higher frequencies than turtles, snakes, and lizards (Dooling, Lohr, & Dent, 2000; Klump, 2000). Most birds hear up to 5 kHz to 6 kHz, and the barn owl has exceptional high-frequency hearing, with characteristic frequencies of 9 kHz to 10 kHz in the auditory nerve (Konishi, 1973). Some land birds such as pigeons, chickens, and guinea fowl are also sensitive to infrasound, below 20 Hz (Carr, 1992). Infrasound signals may travel over great distances, and pigeons may use them for orientation.

Cochlear Nuclei Encode Parallel Ascending Streams of Auditory Information

The auditory nerve projects to nucleus magnocellularis and nucleus angularis in the pattern described for the bird and reptile morphotype (as discussed earlier; see Figure 4.6). In the owl, nucleus magnocellularis is the origin of a neural pathway that encodes timing information, while a parallel pathway for encoding sound level and other aspects of the auditory stream originates with nucleus angularis (Takahashi, 1989). Auditory responses include primary like, onset, chopper, and complex Type IV responses (Köppl, Carr, & Soares, 2001). Recordings in the chicken cochlear nuclei have found a similar but less clear segregation of function (Warchol & Dallos, 1990). The similarities between the owl and the chicken suggest that the functional separation of time and level coding is a common feature of the avian auditory system. The auditory system uses phase-locked spikes to encode the timing of the stimulus (Figure 4.2, panel B). In addition to precise temporal coding, behavioral acuity is also assumed to depend on the activity of neural ensembles (Figure 4.2, panel C). Phase locking underlies accurate detection of temporal information, including ITDs (Klump, 2000) and gap detection (Dooling, Lohr, & Dent, 2000). Neural substrates for phase locking include the specialized end-bulb terminal in the nucleus magnocellularis, termed an end-bulb of Held. This large synapse conveys the phase-locked discharge of the auditory nerve fibers to its postsynaptic targets in the nucleus magnocellularis (Trussell, 1997, 1999). AMPA-type (a-Amino-3-hydroxy-5-methyl-4-isoxazole propionic acid) glutamate receptors contribute to the rapid response of the postsynaptic cell by virtue of their rapid desensitization kinetics (Parks, 2000).

Detection of Interaural Time Difference in Nucleus Laminaris

Nucleus magnocellularis projects to the nucleus laminaris (Rubel & Parks, 1988; Carr & Konishi, 1990). The projections from the nucleus magnocellularis to the nucleus laminaris resemble the Jeffress model for encoding ITDs (see Joris, Smith, & Yin, 1998). The Jeffress model has two elements: delay lines and coincidence detectors. A Jeffress circuit is an array of coincidence detectors, each element of which has a different relative delay between its ipsilateral and contralateral excitatory inputs. Thus, ITD is encoded into the position (a place code) of the coincidence detector whose delay lines best cancel out the acoustic ITD (for reviews, see Joris et al., 1998; Konishi, 2000). Neurons of the nucleus laminaris phase-lock to both monaural and binaural stimuli but respond maximally when phase-locked spikes from each side arrive simultaneously, that is, when the difference in the conduction delays compensates for the ITD (Carr & Konishi, 1990). The cochlear nuclei also receive descending GABAergic inputs from the superior olive that may function as gain control elements or a negative feedback to protect nucleus laminaris neurons from losing their sensitivity to ITDs at high sound intensities (Peña, Viete, Albeck, & Konishi, 1996).

Efferent Control

Efferent innervation of the ear characterizes all vertebrates (Roberts & Meredith, 1992). Cochlear efferent neurons near the superior olive innervate the avian basilar papilla (Code, 1997). Differences in the organization of the avian cochlear efferent and the mammalian olivocochlear systems suggest that there may be significant differences in how these two systems modulate incoming auditory information. Abneural short hair cells in birds have only efferent endings, and these efferents appear act to inhibit responses of the auditory nerve and raise auditory thresholds.

Lemniscal Nuclei

The lemniscal nuclei are ventral to the auditory midbrain. There are two identified lemniscal nuclei in reptiles (dorsal and ventral) and three in birds (dorsal, intermediate, and ventral). These names are the same as those of the lemniscal nuclei in mammals, but the nuclei should not be considered homologous. The dorsal nucleus (LLDp) mediates detection of interaural level differences (ILDs) in the barn owl (Carr & Code, 2000). Interaural level differences are produced by the shadowing effect of the head when a sound source originates from off the midline (Klump, 2000). Some owls experience larger than predicted differences because their external ears are also oriented asymmetrically in the vertical plane. Because of this asymmetry, ILDs vary more with the elevation of the sound source than with azimuth. This asymmetry allows owls to use ILDs to localize sounds in elevation, and they use ITDs to determine the azimuthal location of a sound. The level pathway begins with the cochlear nucleus angularis, which responds to changing sound levels over about a 30-dB range (Carr & Code, 2000). Each nucleus angularis projects to contralateral LLDp. The cells of LLDp are excited by stimulation of the contralateral ear and inhibited by stimulation of the ipsilateral ear. Mapping of ILDs begins in LLDp, with neurons organized according to their preferred ILD. LLDp neurons do not encode elevation unambiguously and may be described as sensitive to ILD, but not selective because they are not immune to changes in sound level. The encoding of elevation improves in the auditory midbrain.

Midbrain and Emergence of Relevant Stimulus Features

The auditory midbrain receives ascending input and projects to the thalamus. It is surrounded rostrally and laterally by an intercollicular area that receives descending input from the forebrain archistriatum (Puelles, Robles, Martinez-de-laTorre, & Martinez, 1994). The auditory midbrain mediates auditory processing, whereas the intercollicular area appears to mediate vocalization and other auditory-motor behaviors. The auditory midbrain is divided into an external nucleus and a central nucleus. The nucleus angularis, LLDp, and nucleus laminaris project to regions of the central nucleus (Conlee & Parks, 1986; Takahashi, 1989). Interaural time difference and ILD signals are combined, and the combinations are conveyed to the external nucleus, which contains a map of auditory space (Knudsen, 1980; Konishi, 1986). Studies of the owl auditory midbrain have shown that most neurons are binaural, excited by inputs from the contralateral ear and inhibited by the ipsilateral ear, although bilateral excitation and contralateral excitation are also present. Many neurons are sensitive to changes in interaural level and time difference. The tonotopic organization is consistent with the tonotopy observed in lizards and crocodiles, and low best frequencies are dorsal (Carr & Code, 2000).

Space-specific responses in the barn owl appear to be created through the gradual emergence of relevant stimulus responses in the progression across the auditory midbrain. Information about both interaural time and level differences project to the external nucleus, and each space-specific neuron receives inputs from a population of neurons tuned to different frequencies (Takahashi, 1989). The nonlinear interactions of these different frequency channels act to remove phase ambiguity in the response to ITDs. The representation of auditory space is ordered, with most of the external nucleus devoted to the contralateral hemifield (Knudsen, 1980). The external nucleus projects topographically to the optic tectum that contains maps of visual and auditory spaces that are in register. Activity in the tectum directs the rapid head movements made by the owl in response to auditory and visual stimuli (Knudsen, du Lac, & Esterly, 1987).

Thalamus and Forebrain

The central nucleus projects to both the external nucleus and the nucleus ovoidalis of the thalamus. Nucleus ovoidalis in turn projects ipsilaterally to Field L. Nucleus ovoidalis has been homologized to the mammalian medial geniculate nucleus (MGv; Karten & Shimizu, 1989). Nucleus ovoidalis is tonotopically organized, with high best frequencies located dorsally and low best frequencies ventrally (Proctor & Konishi, 1997). In the barn owl all divisions of the central nucleus project to ovoidalis, and the physiological responses in ovoidalis reflect this diverse array of inputs. Most neurons had responses to ITD or ILD, at stimulus frequencies similar to those found in the midbrain. In contrast to the mapping found in the midbrain, however, no systematic representation of sound localization cues was found in ovoidalis (Proctor & Konishi, 1997). Nevertheless, sound localization and gaze control are mediated in parallel in the midbrain and forebrain of the barn owl (Carr & Code, 2000).

Field L is the principal target of ascending input from ovoidalis. It is divided into three parallel layers, L1, L2, and L3,withL2furtherdividedintoL2aandL2b.Auditoryunitsin L2 generally have narrow tuning curves with inhibitory sidebands, which might be expected from their direct input from dorsal thalamus, whereas the cells of L1 and L3 exhibit more complex responses in the guinea fowl (Scheich, Langer, & Bonke,1979).ThegeneralavianpatternisthatFieldLprojects to the adjacent hyperstriatum and to other nuclei of the caudal neostriatum.Auditory neostriatal targets of Field L(direct and indirect) include dorsal neostriatum in the pigeon, the higher vocal center (HVC) in songbirds, and ventrolateral neostriatum in budgerigars. These neostriatal nuclei project to the auditory areas of the archistriatum (intermediate archistriatum, ventro medial part (AIVM) and the robust nucleus of the archistriatum,orRA),whichprojectbackdowntotheauditory thalamus and midbrain (Carr & Code, 2000).

Song System Is Composed of Two Forebrain Pathways

Many animals make elaborate communication sounds, but few of them learn these sounds. The exceptions are humans and the many thousands of songbird species, as well as parrots and hummingbirds, that acquire their vocal repertoire by learning (Doupe & Kuhl, 1999). Both humans and songbirds learn their vocal motor behavior early in life, with a strong dependence on hearing, both of the adults that they will imitate and of themselves as they practice.

The song system is composed of an anterior and a posterior pathway. The posterior forebrain or motor pathway is composed of a circuit from HVC to the RA and then to the motor nuclei that control the syrinx and respiration (Brainard & Doupe, 2000; Konishi, 1985; Nottebohm, 1980). The posterior pathway is required throughout life for song production. The anterior forebrain pathway is needed during song learning, but not for normal adult song production, and is made up of a projection from HVC to X to DLM (dorsolateral part of the medial thalamus) to LMAN (lateral magnocellular nucleus of the anterior neostriatum) to RA. The posterior pathway is the presumed site where the motor program underlying the bird’s unique song is stored, whereas the anterior pathway contains neurons that respond to song stimuli, consistent with the idea that this pathway is a possible site of template storage and song evaluation (Margoliash, 1997; Brenowitz, Margoliash, & Nordeen, 1997).The anterior pathway projects to the posterior pathway and is well positioned to provide a guiding influence on the developing motor program. It is also homologous to cortical basal-ganglia circuits in other species (Bottjer & Johnson, 1997).

Mammals

Mammals hear high frequencies and use sound for communication.Humans hear up to 20kHz, while microchiropteran bats have evolved high-frequency hearing for use in sonar, with characteristic frequencies of 50 kHz to 120 kHz. Some large mammals (elephants) are also sensitive to infrasound, which they use for communication (K. B. Payne, Langbauer, & Thomas, 1986).

Auditory Nerve

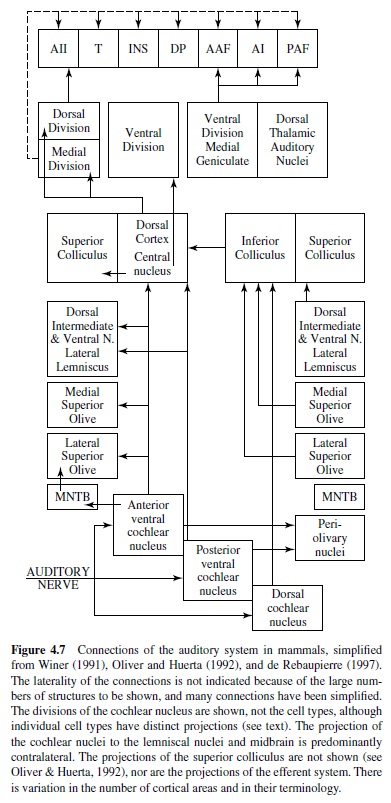

There are two types of auditory nerve afferents in mammals, Type 1 and Type 2. Type 1 afferents receive sharply tuned inputs from inner hair cells and send thick myelinated axons into the brain, where they divide into two. The ascending branch goes to the anterior region of the ventral cochlear nucleus and the descending branch to the posterior region of the ventral cochlear nucleus and to the new dorsal cochlear nucleus. Type 2 afferents are assumed to be unique to mammals, are innervated by outer hair cells, and have thin, unmyelinated axons. They project to granule cell caps of ventral cochlear nucleus (VCN) and dorsal cochlear nucleus (DCN) and are involved in the efferent feedback to the cochlea (Ryugo, 1993). See Figure 4.7.

Tonotopy is preserved in the projections of the auditory nerve. In mammals, the ventral part of each cochlear nucleus receives low center frequency (CF) (apical) input, and dorsal areas receive high CF input. These tonotopic projections are not point to point because each point on the basilar membrane projects to an isofrequency plane across the extent of the cochlear nucleus. Thus the cochlear place representation is expanded into a second dimension in the brain, unlike the visual and somatosensory systems, which are point to point. These tonotopic sheets are preserved all the way to cortex, although it is not clear what features are processed in these isofrequency slabs. Divergent and convergent connections within isofrequency planes may be observed at all levels. The auditory nerve forms different types of terminals onto different cell types in the cochlear nucleus (Ryugo, 1993). End bulbs of Held terminals are formed on bushy cells (as discussed later), whereas more varicose or bouton-like terminals are formed on other cell types in the cochlear nuclei. The auditory nerve appears to use glutamate as a transmitter, often with the postsynaptic cell expressing “fast” AMPA-type glutamate receptors that can mediate precise temporal coding (Oertel, 1999; Parks, 2000; Trussell, 1999).

The Cochlear Nucleus Produces Ascending Parallel Projections

There are four major cell types in the ventral cochlear nucleus (Rhode & Greenberg, 1992; Rouiller, 1997; Young, 1998). First, bushy cells respond in a primary- or auditorynerve-like fashion to the auditory stimulus. Second, octopus cells respond to onsets or stimulus transients; and third, two classes of multipolar neurons respond principally with “chopper” firing patterns. Bushy cells receive end-bulb inputs from the auditory nerve and exhibit accurate temporal coding. There are two forms of bushy cells, spherical and globular. Spherical cells dominate the anterior ventral cochlear nucleus, respond to lower best frequencies, and project to the medial superior olive, which is sensitive to ITDs. Globular bushy cells by comparison sometimes chop or exhibit onset responses to the stimulus, respond to higher frequencies, and project to the lateral superior olive and the medial nucleus of the trapezoid body. These projections may mediate detection of ILDs. Octopus cells in the posterior ventral cochlear nucleus are multipolar, with thick dendrites that extend across the nerve root (Oertel, Bal, Gardner, Smith, & Joris, 2000). This morphology enables them to integrate auditory nerve inputs across a range of frequencies. Octopus cells encode the time structure of stimuli with great precision and exhibit onset responses to tonal stimuli (Oertel et al., 2000). Onsets play an important role in theories of speech perception and segregation and grouping of sound sources (Bregman, 1990). Two classes of multipolar neurons respond to tones principally with “chopper” firing patterns (Doucet & Ryugo, 1997).

The dorsal cochlear nucleus appears for the first time in mammals, perhaps associated with the development of highfrequency hearing and motile external ears. It is composed of a cerebellum-like circuit in the superficial layers, with projection cells below that receive auditory nerve inputs (Berrebi & Mugnaini, 1991; Young, 1998). Dorsal cochlear nucleus cells exhibit a wide variety of response types, with one theory of function relating to echo suppression. The granule cells in the superficial layers receive ascending somatosensory input that may convey information about head and ear position. The deep portion of the dorsal cochlear nucleus contains fusiform and stellate cell types. Fusiform cells exhibit complex (Type IV) frequency tuning curves, with small areas of excitation at best frequency and at sides. This response is well suited to detecting the notches in sound level created by the pinnae that provide cues for locating sound in elevation (May, 2000).

Binaural Interactions and Feedback to the Cochlea Originate in Periolivary and Olivocochlear Nuclei

The superior olivary complex consists of the lateral and medial superior olivary nuclei and a large number of smaller cell groups known as the periolivary nuclei, which are sources of both ascending and descending projections (Helfert & Aschoff, 1997). All receive input from the cochlear nuclei.

Their functions are largely unknown, except for efferent control of the cochlea and encoding sound level (Warr, 1992). The medial nucleus of the trapezoid body (MNTB) projects to the lateral superior olive, ventral nucleus of the lateral lemniscus, medial superior olive, and other periolivary nuclei. Responses of MNTB cells were similar to their primary excitatory input, the globular bushy cell, which connects to the MNTB via end-bulb synapse. The MNTB cell output forms an important inhibitory input to a number of ipsilateral auditory brain stem nuclei, including the lateral superior olive. MNTB neurons are characterized by voltage-dependent potassium conductances that shape the transfer of auditory information across the bushy cell to MNTB cell synapse and allow high-frequency auditory information to be passed accurately across the MNTB relay synapse (Trussell, 1999).

Two populations of olivary neurons project to the cochlea: lateral and medial (Warr, 1992). Thin olivocochlear fibers arise from the lateral olivocochlear group located ipsilaterally in the lateral superior olive. Thick olivocochlear fibers arise from the medial olivocochlear group located bilaterally in the periolivary nuclei. Although they project primarily to the cochlea, olivocochlear neurons also give off branches to a variety of nuclei in the brainstem, and to inferior colliculus, thus involving auditory and nonauditory nuclei in the olivocochlear reflex system. Olivocochlear neurons can be activated by sound, whereas activation of the medial olivocochlear bundle results in suppression of spontaneous and tone-evoked activity in the auditory nerve.

Olivary Nuclei and Interaural Interactions

The olivary nuclei regulate the binaural convergence of acoustic information and mediate spatial hearing.Neural computations of sound location take place at this first site of binaural convergence. The lateral superior olive encodes ILD, whereas the medial superior olive encodes time differences. Thus an important transformation takes place here. Information conveyed by temporal and rate codes is transformed in the olivary nuclei into labeled line-place principle codes for location.

The lateral superior olive principal cells receive excitatory inputs from ipsilateral globular bushy cells, as well as inhibitory glycinergic inputs onto their cell bodies and proximal dendrites, relayed from the contralateral ear via the MNTB. The MNTB input acts to reverses the sign of bushy cell input from excitatory to inhibitory to make an EI response—that is, excited (E) by the ipsilateral ear and inhibited (I) by the contralateral ear. Traditionally, the lateral superior olive has been assigned the role of extracting azimuthal angle information of high-frequency sound from ILD. Some sensitivity to time differences has also been observed. Almost all lateral superior olive responses have monotonic rate-level functions, typically with sigmoidal ILD sensitivity functions. In general, as the strength of the contralateral input increases with increasing loudness in the contralateral ear, the maximum rate decreases. Thus the lateral superior olive rate signals a range of ILDs (Kuwada, Batra, & Fitzpatrick, 1997).

Sensitivity to ITDs originates in the medial superior olive. The organization of the medial superior olive circuit appears to conform to the requirements of the Jeffress model for transforming ITDs into a place code (Joris et al., 1998). The Jeffress model is made up of delay lines and coincidence detectors. Each coincidence detector in the array has a different relative delay between its ipsilateral and contralateral excitatory inputs. Interaural time difference is encoded into the position or place in the array whose delay lines best cancels out the ITD. Neurons of the medial superior olive act as coincidence detectors. They phase-lock to both monaural and binaural stimuli and respond maximally when phase-locked spikes from each side arrive simultaneously, that is, when the difference in the conduction delays compensates for the ITD (Joris et al., 1998). The overall result of this scheme is the creation of an array of cells tuned to specific ITDs and arranged according to their best azimuth. The azimuth of a sound source is coded by the location of maximal activity in the array (Joris et al., 1998).

Auditory Midbrain: Inferior Colliculus and the Emergence of Biologically Important Parameters

The inferior colliculus is the midbrain target of ascending auditory information. It has two major divisions, the central nucleus and dorsal cortex, and both divisions are tonotopically organized. The inputs from brainstem auditory nuclei are either distributed across or superimposed on maps to form what are believed to be locally segregated functional zones for processing different aspects of the auditory stimulus (Ehret, 1975; Oliver & Huerta, 1992). The central nucleus receives both direct monaural input and indirect binaural input. Physiological studies show both binaural and monaural responses (Ehret, 1975).

Casseday and Covey (1996) proposed that tuning processes in the inferior colliculus are related to the biological importance of sounds. Their ideas are summarized here. There is a change in timing properties at the inferior colliculus, from rapid input to slowed output, and they propose that this transformation is related to the timing of specific behaviors. The framework proposed by Casseday and Covey is useful because at least some neurons in the inferior colliculus are tuned to behaviorally relevant stimuli that trigger species-specific behavior, and the processing of these sign stimuli triggers action patterns for hunting, escape, or vocal communication. Evidence for the theory comes from the convergence of parallel auditory pathways at the inferior colliculus, the interaction of the inferior colliculus with motor systems, tuning of auditory midbrain neurons to biologically important sounds, the slow pace of neural processing at the inferior colliculus, and the slow pace of motor output.

Thalamus

Three major features characterize the auditory forebrain (de Rebaupierre, 1997; Winer, 1991). First, there is a primary, lemniscal pathway from the cochlear nuclei to primary auditory cortex (A1) with a systematic representation of tonotopy, binaural signals, and level. Second, a parallel nonprimary pathway arises in midbrain tegmentum, dorsal medial geniculate body, and nonprimary auditory cortex with broad tuning curves and nontopical representation predominate. Third, an even more broadly distributed set of connections and affiliations link auditory forebrain with cortical and subcortical components of the limbic forebrain and associated autonomic areas, as well as elements of the motor system that organize behavioral responses to biologically significant sounds (Winer, 1991).

The primary target of the inferior colliculus in dorsal thalamus is the medial geniculate. This nucleus has three subdivisions: medial, ventral, and dorsal. The ventral division receives major ascending input from the central nucleus of the inferior colliculus and contains sharply tuned cells like those of the inferior colliculus. The ventral division is tonotopically organized although the organization is not simple (there is a concentric component with low frequencies in the center; Imig & Morel, 1988). The cells of the dorsal and medial divisions are fairly unresponsive to tones or noise and respond with long latencies, consistent with major projection back from perirhinal cortex. The functional role of the dorsal and medial divisions is not clear, except to note that nonmonotonic (i.e., selective) responses are common there. In the mustached bat (Pteronotus), both medial and dorsal divisions contain fine delay-tuned neurons (Olsen & Suga, 1991; Suga, 1988). Recent studies on the bat’s auditory system indicate that the corticofugal system mediates a highly focused positive feedback to physiologically “matched” subcortical neurons, and widespread lateral inhibition to physiologically “unmatched” subcortical neurons, to adjust and improve information processing (Suga, Gao, Zhang, Ma, & Olsen, 2000). Suga proposed that the processing of complex sounds by combination-sensitive neurons is heavily dependent on the corticofugal system.

Auditory Cortex

The greatest difference between mammals and other vertebrates is the evolution of the cortex in place of the nuclear organization of the forebrain (Karten & Shimizu, 1989). Whether or not this new structure has facilitated the creation of new auditory areas, new areas are a feature of the mammalian auditory specialists. Whereas primitive mammals like tenrecs have few auditory areas (Krubitzer, Kunzle, & Kaas, 1997),thereareatleastseventonotopicmapsinthecatandthe mustached bat. In the cat these areas include A1; secondary auditory cortex (A2); the anterior auditory field; posterior, ventral, and ventral posterior areas as well as insular; Te; and other anterior ectosylvian fields with uncertain tonotopy (de Rebaupierre, 1997). A1 and A2 share physiological features of alternating bands of EE and EI neurons that are mapped orthogonal to the tonotopic axis. In primary auditory cortex responses tend to be more transient than auditory nerve responses, and they show inhibition away from their best frequency. Most responses are binaural and similar to responses from brainstem. These binaural responses are generated by short latency excitatory input from contra ear, and ipsilateral input that might be E, I, or mixed, with the best frequency matched to the input from the contralateral ear.

In the mustached bat there are at least seven cortical areas, many of which are related to processing echolocation signal components. A1 systematically represents frequency with an enlarged representation of the Doppler shift compensation region (pulse frequency range), mapping not just frequency, but amplitude as well. There are several maps of echo delay, for delays that represent near,midrange,andfartargets.Thereisalso a map of the contralateral azimuth and a second Doppler shift region. Suga (1988) used these data to construct a parallelhierarchical scheme for signal processing. Because the different constant-frequency and frequency-modulated components differ in frequency, they are processed in parallel channels in the auditory system by virtue of their tonotopy. In the cortex, however, combination-sensitive neurons may be created by comparing across frequency channels (Suga, 1988).

Auditory Function and Behavior

Absolute Auditory Thresholds

A fundamental behavioral measure of hearing sensitivity is the audiogram, a plot of detection thresholds for pure tones across the audible spectrum, which provides an estimate of the frequency range and limits of an animal’s hearing. These parameters are influenced by the features of the peripheral auditory system (Wever, 1949), and in mammals these features include the size and impedance-matching characteristics of the middle ear system (Dallos, 1973; Geisler & Hubbard, 1975; Guinan & Peake, 1967; Møller, 1983; Nedzelnitsky, 1980; Rosowski, 1994), the length and stiffness of the basilar membrane (Békésy, 1960; Echteler, Fay, & Popper, 1994; Manley, 1972), the size of the helicotrema (a small opening at the cochlear apex; Dallos, 1970), the density of hair cells (Burda & Voldrich, 1980; Ehret & Frankenreiter, 1977), and the density of hair cell innervation (Guild, Crowe, Bunch, & Polvogt, 1931) along the basilar membrane. In other animals, features of the auditory periphery also play a role in defining the limits and range of hearing in birds (Gleich & Manley, 2000), fish (Popper & Fay, 1999), anurans (Capranica & Moffat, 1983; Lewis, Baird, Leverenz, & Koyama, 1982), and insects (Yager, 1999).

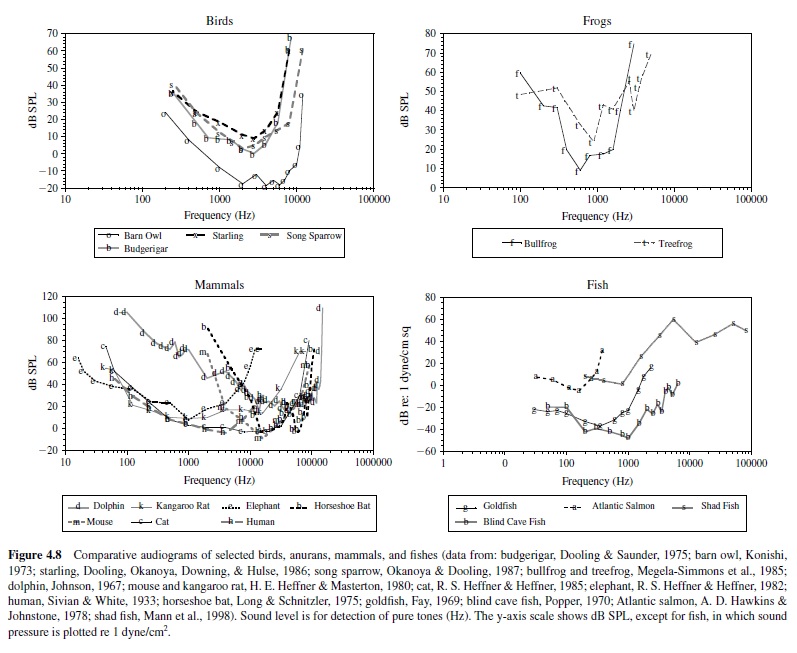

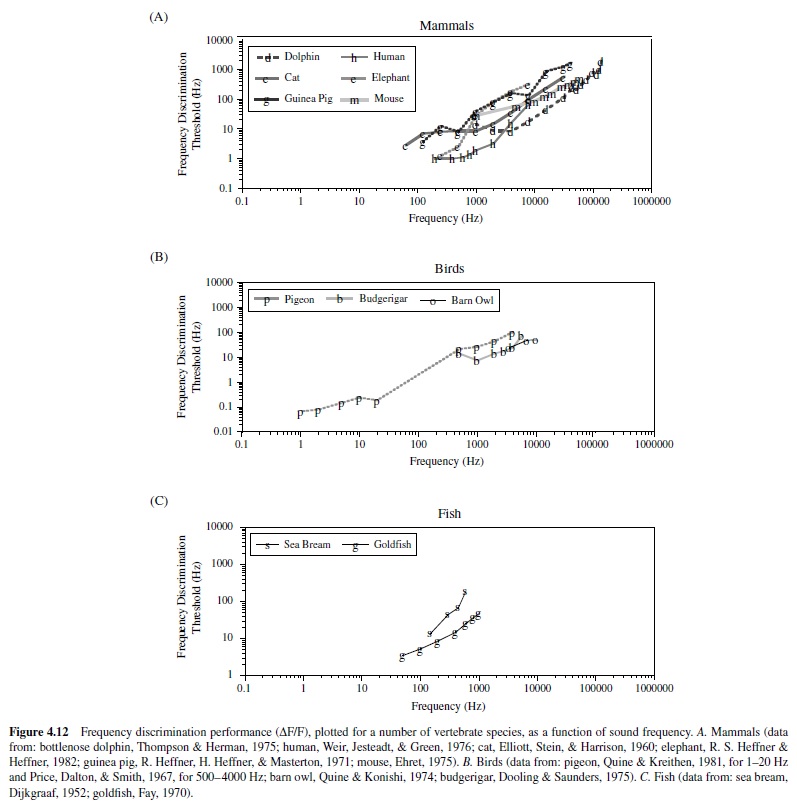

For most vertebrates, the audiogram is a smooth U-shaped function; thresholds are high at the lower and upper frequency boundaries compared to intermediate frequencies where thresholds are lowest (see, e.g., Masterton, Heffner, & Ravizza, 1969). Mammals differ greatly in the octave range over which they can hear, from as little as 3.5 octaves in the mouse and horseshoe bat to over 8 octaves in the dolphin, raccoon, cat, and kangaroo rat. The smaller octave range of hearing in the mouse and bat nonetheless covers a large frequency bandwidth, as these animals hear ultrasound, in which a single octave (frequency doubling) spans a minimum of 40 kHz. Humans show greatest sensitivity between 1 kHz and 4 kHz and hear over a range of about seven octaves (Sivian & White, 1933; see Figure 4.8).

Some animals show enhanced sensitivity nested within their range of hearing. For instance, the audiogram of the echolocating horseshoe bat is highly irregular in shape (Long & Schnitzler, 1975). Between 10 kHz and 40 kHz, a plot of threshold change with frequency resembles the standard U-shaped function of most vertebrates, but the range of this animal’s hearing extends far above 40 kHz. Threshold declines gradually at higher frequencies between 40 kHz and 70 kHz before rising rapidly at approximately 81 kHz. The audiogram then shows a very sharp peak in sensitivity at about 83 kHz; the auditory threshold at neighboring lower and upper bounding frequencies (81 kHz and 90 kHz) is elevated by about 30 dB. This bat emits echolocation signals adjusted to return at 83 kHz and has evolved a highly specialized auditory system to detect this biologically important sound frequency. The basilar membrane of the horseshoe bat shows considerable expansion of its frequency map in the region that responds to frequencies around 83 kHz, and this magnification is preserved in the tonotopic organization of the ascending auditory pathway. Thus, the unusual shape of this animal’s audiogram reflects an adaptation to facilitate the reception of species-specific acoustic signals (Neuweiler, Bruns, & Schuller, 1980).

Adaptations in the auditory periphery also support specializations for low-frequency hearing. Examples are the kangaroo rat, mole rat, and Mongolian gerbil, small mammals that have evolved enlarged external ears and middle ear cavities that serve to collect and amplify low-frequency sounds (A. Ryan, 1976; H. E. Heffner & Masterton, 1980; Ravicz, Rosowski, & Voight, 1992). In fact, these organs take up roughly two thirds of the cross section of the Mongolian gerbil’s head.These animals rely on low-frequency hearing to receive warning signals from conspecifics that must carry over long distances (Ravicz et al., 1992). Elephants also hear very lowfrequencies(65dBSPLat16Hz;R.S.Heffner&Heffner, 1982), which is presumably important to long-distance communication through infrasound (K. B. Payne et al., 1986).

In vertebrate animals whose hearing sensitivity spans a narrow frequency range, a communication receiver may appear to dominate the auditory system. The frequency range of maximum sensitivity in birds is about 1 kHz to 5 kHz, with absolute hearing sensitivity approaching 0 dB SPL (Dooling, 1980; Dooling et al., 2000). There appears to be a general correspondence between a bird’s peak auditory sensitivity and the average power spectrum of its species-specific song (e.g., canary, budgerigar, field sparrow, red-winged blackbird; Dooling, Mulligan, & Miller, 1971; Dooling & Saunders, 1975; Heinz, Sinnott, & Sachs, 1977; Konishi, 1970), suggesting the relative importance of a communication receiver in the avian auditory system. Nocturnal predators (hawks and owls) generally have lower thresholds than songbirds and nonsongbirds, and they use acoustic signals in part to detect and localize prey. Hearing sensitivity in birds falls off dramatically at 8 kHz to 12 kHz, depending on the species.

Behavioral measures of hearing in anurans (frogs and toads) also suggest that a communication receiver dominates the auditory system of these animals, but most data come from experiments that have relied on behavioral responses that the animals normally make in the context of vocal communication. One such technique, evoked calling, exploits the observation that male frogs will vocalize in response to recordings of natural or synthetic conspecific mating calls, while recordings of other species’ calls fail to elicit vocalizations. In the bullfrog the sound pressure level of a species-specific call must be approximately 60 dB SPL to evoke calling (Megela, 1984).Another technique commonly used to measure hearing in frogs is selective phonotaxis, which exploits the observation that a gravid female will approach a speaker that broadcasts either natural or synthetic conspecific mating calls in preference to one that broadcasts other acoustic stimuli. The female green tree frog exhibits selective phonotaxis to pure tone stimuli at frequencies corresponding to the two major spectral peaks of the mating call, 900 Hz and 3000 Hz (Gerhardt,1974).The minimum sound pressure level that elicits selective phonotaxis from the female green tree frog is approximately 55 dB SPLfor a 900-Hz pure tone and 90 dB SPL for a 3000-Hz pure tone (Gerhardt, 1976). With a synthetic mating call (900- and 3000-Hz tones presented together), the phonotaxis threshold is 48 dB SPL(Gerhardt, 1981).

Using a neutral psychophysical technique that does not require behavior in the context of acoustic communication, Megela-Simmons, Moss, and Daniel (1985) measured hearing sensitivity in the bullfrog and green tree frog at frequencies within and outside those used by these animals for species-specific communication. The bullfrog’s audiogram, like many other vertebrates, is a U-shaped function, ranging between about 300 Hz and 3000 Hz, with highest sensitivity between 600 Hz and 1000 Hz, where this species’s mating call contains peak spectral energy. By contrast, the green tree frog’s audiogram is a W-shaped function, with highest hearing sensitivity at 900 Hz and 3000 Hz, frequencies where spectral energy in the species-specific mating call is greatest. The differences between the audiograms of the bullfrog and the green tree frog can be attributed to a larger separation of frequency tuning of the two hearing organs in the frog’s auditory periphery. In both species the amphibian papillae respond to frequencies up to about 1200 Hz, but the basilar papilla of the green tree frog resonates to approximately 3000 Hz, higher than that of the bullfrog’s basilar papilla, which resonates to approximately 1800 Hz (Lewis, Baird, et al., 1982).

The frequency range of hearing is generally largest in mammals, followed by birds, frogs, fish, and insects (e.g., see the goldfish audiogram plotted in Figure 4.8). However, there are some noteworthy exceptions to this trend. One example is the American shad, a fish species that shares its habitat with the echolocating dolphin. The shad can hear sounds over a frequency range from 100 Hz to an astonishing 180 kHz. While this fish’s threshold is higher in the ultrasonic range than in the audible range, this species can detect 100-kHz signals at about 140 dB re 1 Pa (Mann, Lu, Hastings, & Popper, 1998). Although a variety of fish species is subject to predation by dolphins, the shad has apparently evolved ultrasonic hearing to detect the sonar signals of its predator.

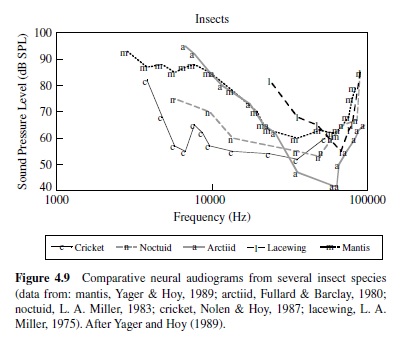

The importance of audition for the evasion of predators is well illustrated by insects that have evolved hearing for the evasion of echolocating bats. The hearing range and sensitivity in insects are often inferred from responses of auditory neurons, and many hear ultrasonic frequencies,which are produced by echolocating bats as they hunt insect prey (see Figure 4.9). Examples of insects that hear ultrasound include the praying mantis (a single ear located on the midline of the ventral thorax; Yager & Hoy, 1986), green lacewings (ears on the wings; L. A. Miller, 1970, 1984), noctuid moths (ears on the dorsal thorax; Roeder & Treat, 1957), hawk moths (ear built into mouthparts; Roeder, Treat, & Vandeberg, 1970), Hedyloidea butterflies (ears at the base of the forewings; Yack & Fullard,1999),crickets(prothoracictibia;Moiseff,Pollack,& Hoy, 1978; Oldfield, Kleindienst, & Huber, 1986), and tiger beetles (ears on the abdomen; Spangler, 1988; Yager, Cook, Pearson, & Spangler, 2000; Yager & Spangler, 1995). Generally,insect auditory thresholds in the ultrasonic range are high, at or above 50 dB SPL, and the frequency range of hearing is typically one to two octaves (Yager, 1999).

Examples also exist for insect sound detection in the human audio range, and often (but not exclusively), low-frequency hearing supports species-specific acoustic communication. Crickets and bush crickets have ears on the proximal tibiae of the prothoracic legs, and the low-frequency range of a large set of auditory receptors corresponds with the spectral content of their species-specific communication calls, generally between 2 kHz and 6 kHz (Imaizumi & Pollack, 1999; Michelsen, 1992; Pollack, 1998).

Masked Auditory Thresholds

When an acoustic signal coincides with interfering background noise, its detection may be partially or completely impaired. The process by which one sound interferes with the detection of another is called masking. Several stimulus parameters influence the extent to which masking occurs, including the relation among the temporal structure, amplitude, and frequency composition of the signal and the masker (e.g., Jeffress, 1970; Scharf, 1970). Predictably, the more similar the temporal and spectral characteristics of the masker are to those of the signal, the more effectively it interferes with the detection of the signal (e.g., Jesteadt, Bacon, & Lehman, 1982; Small, 1959; Vogten, 1974, 1978; Wegel & Lane, 1924). And when the sound pressure level of the masker increases, so does the detection threshold of the signal (e.g., Egan & Hake, 1950; Greenwood, 1961a; J. Hawkins & Stevens, 1950; B. C. J. Moore, 1978; Vogten, 1978; Zwicker & Henning, 1984).

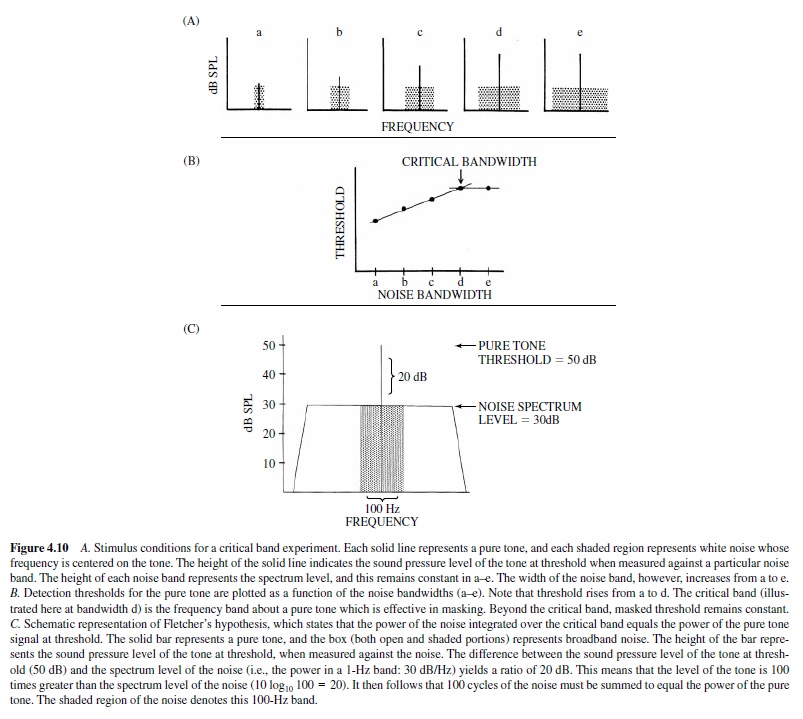

If a masking stimulus is broadband white noise, only a portion of the noise band actually contributes to the masking of a pure tone stimulus. This was originally demonstrated by Fletcher (1940), who measured detection thresholds in humans for pure tones against white noise of varying bandwidths. In this experiment, noise bands were geometrically centered at the frequency of a test tone. The spectrum level of thenoise(i.e.,thepowerofthenoiseina1-Hzband)remained constant, but as the bandwidth varied, so did its total power. Because the total power of white noise is proportional to its bandwidth, it is perhaps not surprising that the threshold for detecting the pure tone increased as the noise band widened. The interesting observation was, however, that the detection threshold for the pure tone increased as the noise band increased only up to a critical value, beyond which the threshold remained constant. Fletcher termed this value the critical band—the frequency region about a pure tone that is effective in masking that tone. This effect is illustrated in Figure 4.10, panel A.

Figure 4.10 presents a schematic representation of the stimulus conditions in a critical band experiment. The solid bar in each graph (a–e) represents a pure tone of a fixed frequency, and the shaded area represents white noise, centered at the frequency of the tone. The spectrum level of the noise in each graph is the same; however, the bandwidth increases from a to e. Accordingly, the total power of the noise also increases from a to e. The height of each bar indicates the level of the pure tone at threshold, when measured against the noise. From a to d the height of the bar increases, indicating that a higher amplitude tone is required for detection as the noise band widens. However, in e the height of the bar is the same as that in d, even though the bandwidth of the noise has again increased. Below (B) the amplitude of the pure tone at threshold is plotted for each of the five noise bandwidths. This figure summarizes the data presented earlier, showing that threshold increases up to bandwidth d and thereafter remains constant. The breakpoint in the function at bandwidth d represents the critical band.

The importance of the results of critical band experiments rests on the implication that the ear sums the noise power or energy over a limited frequency region. A large critical band indicates that the noise must be summed over a wide frequency band in order to mask the signal and therefore indicates relatively poor frequency resolution of the auditory system. By contrast, a small critical band indicates relatively high frequency resolution.

Fletcher (1940) included in the concept of the critical band a hypothesis proposing that the power of the noise integrated over the critical band equals the power of the pure tone signal at threshold. This implies that a critical band can be determined indirectly by measuring the detection threshold for a pure tone against broadband masking noise, rather than directly by measuring the threshold against a variety of noise bandwidths. If one knows the level of the tone at threshold and the spectrum level of the noise, the ratio of the two provides the necessary information to determine the critical bandwidth based on Fletcher’s assumptions. The level of the tone and the spectrum level of the noise are expressed in logarithmic units (dB); therefore, the ratio of the two is simply dB tone – dB noise spectrum level. Given this ratio, one can then calculate the frequency band over which the noise must be integrated to equal the power of the pure tone. Figure 4.10, panel C, illustrates this analysis.

In Figure 4.10, panel C, the solid line represents a pure tone, and the boxed-in area (both open and shaded portions) represents broadband white noise. The height of the bar denotes the amplitude of the pure tone at threshold (50 dB SPL) when measured against the background noise (spectrum level 30 dB SPL/Hz), and the difference between the two is 20 dB. This ratio of 20 dB, in linear units, equals a ratio of 100 (10 log10 100=20 dB). That is, the power of the pure tone is 100 times greater than the power in one cycle of noise; therefore, 100 cycles of the noise must be added together to equal the power of the tone. The shaded portion of the noise represents the 100-Hz frequency region about the pure tone that contributes to the masking. If Fletcher’s assumptions were correct, this value (100 Hz) should equal the critical band, as measured directly; in accordance with this logic, the ratio of the pure tone at threshold to the spectrum level of the broadband noise has been termed the critical ratio (Zwicker, Flottorp, & Stevens, 1957).

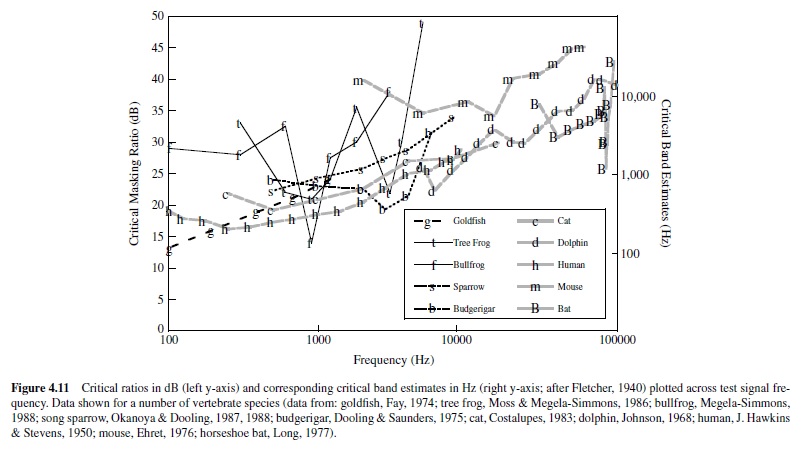

Fletcher’s assumptions have been tested, and it is now well established that critical bands (measured directly) are in fact approximately 2.5 times larger than estimates made from critical ratios (Saunders, Denny, & Bock, 1978; Zwicker et al., 1957). This outcome indicates that Fletcher’s assumptions were not entirely correct; however, the two measures do follow almost parallel patterns of change with signal frequency. Figure 4.11 illustrates this relation, summarizing data collected from several vertebrate species, including humans.The critical ratios have been transformed to estimates of critical bands, following Fletcher’s assumption that the power of the pure tone at threshold equals the power integrated over the critical band of noise. For most species tested, both critical bands and critical ratios increase systematically as a function of signal frequency, and the proportionality between the critical band and the critical ratio exists across a wide range of frequencies. In fact, had Fletcher assumed that the critical band contained 2.5 times the power of the masked tone at threshold (rather than equal power), the two functions would overlap for human listeners at frequencies above 300 Hz.

There are other empirically determined parallels between critical bands and critical ratios. Results of both critical band and critical ratio experiments show that the threshold for detecting a pure tone signal varies with the spectrum level of the masking noise. As the power of the noise increases, there is a proportionate increase in detection threshold (e.g., J. Hawkins & Stevens, 1950; Zwicker et al., 1957). Moreover, experimental findings also indicate that estimates of both the critical band and the critical ratio are invariant with the level of the masking stimulus, except at high noise spectrum levels (exceeding 60–70 dB; Greenwood, 1961a; J. Hawkins & Stevens, 1950).

Prior to Fletcher’s study of the critical band, research on the peripheral auditory system revealed the existence of a frequency map along the cochlear partition (Guild et al., 1931; Steinberg, 1937). High frequencies are coded at the base of the basilar membrane, and lower frequencies are coded progressively toward the apex. This place coding arises from changes in the stiffness of the basilar membrane from base to apex. At the base, where the membrane is stiffest, high frequencies produce maximal displacement; and toward the apex, where relative stiffness decreases, lower frequencies produce maximal displacement (Békésy, 1960).

Fletcher approached his work on the critical band with the assumption that this measure would permit psychophysical estimates of the frequency coordinates of the basilar membrane. Indeed, he found that the function relating stimulus frequency to position along the basilar membrane paralleled the function relating stimulus frequency to the width of the critical band. Both the range of frequencies encoded by a fixed distance along the basilar membrane and the size of the critical band increase as an exponential function of sound frequency (Fletcher, 1940; Greenwood, 1961b; Liberman, 1982). This observation led to the hypothesis that a critical band represents a constant distance along the basilar membrane over which the neural response is integrated (Fletcher, 1940; Zwicker et al., 1957).

Following the early psychophysical studies of critical ratios and critical bands in humans, auditory masking research began on other vertebrate species. These experiments have permitted a comparative approach to the study of frequency selectivity in the auditory system. Remarkably, in a variety of vertebrates (e.g., cat: Watson, 1963; Costalupes, 1983; Pickles, 1975; mouse: Ehret, 1975; chinchilla: J. D. Miller, 1964; rat: Gourevitch, 1965), measures of critical bands and critical ratios show similar frequency-dependent trends, and this pattern resembles that observed in humans—that is, increasing systematically with signal frequency (3 dB/octave). This general pattern has led to the suggestion that frequency selectivity in the auditory systems of vertebrates depends on a common mechanism, the mechanical response of the cochlea (Greenwood, 1961b).

Direct measures of frequency selectivity in single VIIIth nerve fibers differ from those obtained psychophysically, indicating that critical ratios and critical bands are not simple correlates of the tuning curves of primary fibers (Pickles & Comis, 1976). This finding does not rule out the possibility that neural integration along the cochlear partition lays the foundation for frequency selectivity, although it does suggest that other processes, such as the distribution and temporal pattern of neural discharge in the central auditory system, may be involved in frequency discrimination.

Although critical bands and critical ratios increase systematically with signal frequency in most vertebrates, there are noteworthy exceptions. The parakeet shows a U-shaped function; critical ratios are lowest at an intermediate frequency of this animal’s hearing range, and this frequency region corresponds to the dominant frequency components of its vocalizations.Also in this frequency region, the parakeet’s absolute detection thresholds are lowest (Dooling & Saunders, 1975). Asecond example can be found in the echolocating horseshoe bat, which shows a sharp decline in critical ratio (i.e., a marked increase in frequency resolution) at 83 kHz, relative to neighboring frequencies (Long, 1977). This specialization for frequency resolution at 83 kHz parallels that observed for absolute sensitivity described earlier (Neuweiler et al., 1980).

In the parakeet and the horseshoe bat, the spectral regions of greatest frequency selectivity and absolute sensitivity coin-cide; however, it is important to emphasize that these two measures of auditory functionare not typically related.The shapes of the audiogram and the critical ratio function differ markedly in most animals; at frequencies where absolute sensitivity is relatively high, frequency selectivity is not necessarily also high. Nonetheless, measures of hearing in the parakeet and horseshoe bat suggest that auditory specializations (possibly, e.g., the mechanical response of the cochlea, hair cell density and innervation patterns, tonotopic representation in the central auditory system, etc.) do occur to facilitate discrimination of biologically significant signals from noise.

The shape of the green tree frog’s critical ratio function departs from that of most vertebrates. This animal shows a W-shaped critical ratio function, with lowest critical ratios at 900 Hz and 3000 Hz, corresponding to the dominant spectral peaks of its mating call. The smallest critical ratios obtained in the green tree frog are approximately 22 dB, indicating good resolving power of this animal’s ear at biologically salient frequencies, 900 Hz and 3000 Hz. These data compare closely with estimates from other vertebrates at 900 Hz and 3000 Hz and suggest that the ear of the anuran, despite its distinct morphology, can filter frequency as well as that of other vertebrates, including those that possess basilar membranes (Moss & Simmons, 1986).