View sample Vision For Action Research Paper. Browse other research paper examples and check the list of research paper topics for more inspiration. If you need a religion research paper written according to all the academic standards, you can always turn to our experienced writers for help. This is how your paper can get an A! Feel free to contact our custom writing service for professional assistance. We offer high-quality assignments for reasonable rates.

Sensory input received by the visual receptors from an object in space results in a complex pattern of excitation from which object properties relevant to different functions have to be extracted. This selection is the basis for a specific mechanism called visuomotor transformation, the shaping of the motor effectors into configurations precisely adapted to capturing, manipulating, using, and transforming visual objects. In primates, the remarkable evolution of the hand into a grasping apparatus represents an ultimate acquisition for skilled interaction with objects and use of tools. This research paper will focus on anatomical and functional analysis of neural mechanisms involved in visuomotor transformation.

Academic Writing, Editing, Proofreading, And Problem Solving Services

Get 10% OFF with 24START discount code

Beginning in the 1970s, the anatomical description of cortical visual pathways in the monkey has led to the formulation of the ‘two visual systems’ model (Ungerleider and Mishkin 1982). According to this model, the primary visual cortex in the occipital lobe projects along two main streams that reach, respectively, the posterior parietal lobe (the dorsal pathway) and the inferotemporal cortex (the ventral pathway). Although the two pathways are densely interconnected with each other, they are now viewed as relatively independent functional entities.

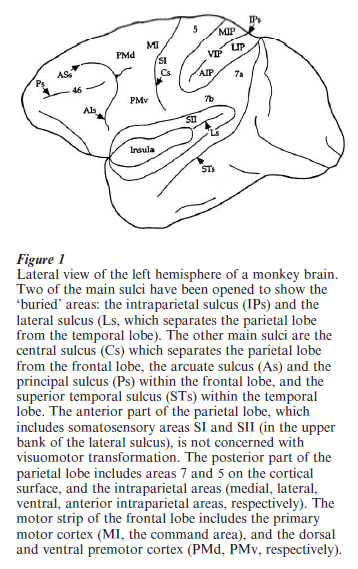

The dorsal pathway, which will be under scrutiny in this research paper, was once considered as a space channel. Indeed, its experimental destruction in the monkey produced spatial disorientation of the animal. Later on, a more complete description of the anatomical organization of the posterior parietal cortex, which is outlined in Fig. 1, led to a new conception of its functional role. First, a number a specialized areas, many of them located in the intraparietal sulcus (e.g., LIP, MIP, AIP), were added to the classical cytoarchitectonic areas (e.g., areas 7 and 5). Second, these newly described areas were found to be connected with motor regions of the frontal cortex. These connections appear to be selectively organized: parietal area MIP is preferentially connected with the upper part of the premotor cortex (PMd in Fig. 1), whereas AIP is preferentially connected with its lower part (PMv). Both PMd and PMv project to the motor cortex itself, where the commands to the relevant muscles are generated. Thus, the dorsal pathway, whereby visual information is transferred from the primary visual cortex to the premotor and motor cortex, can better be conceived as a pathway specialized for visuomotor transformation.

1. A Mechanism For Reaching Objects In Visual Extrapersonal Space

Reaching for a visual object requires that the set of coordinates in which the object-oriented movement is computed must have its origin on the agent’s body (egocentric coordinates). This constraint stems from the fact that object position in space must be reconstructed by adding signals from eye, head, and body positions for the arm to be ultimately directed at the proper location. Determining object position in space by computing its retinal position alone would be inappropriate, because this locus in space would correspond to different retinal positions according to respective eye, head, and body positions (Jeannerod 1988). The following paragraphs describe some of the mechanisms which have been identified in recent experiments in monkeys and which could account for transforming retinal (eye centered) coordinates into (body centered) egocentric coordinates. These mechanisms are primarily located in the intraparietal sulcus.

An influential hypothesis proposes that the basic information about where to reach for an object is the motor command sent to the eyes to move in its direction (the eye position signal). Neurons in area 7a have been shown to encode visual stimuli for a specific position of the eye in the orbit, i.e., they fire preferentially when the object stimulates a given location on the retina (the neuron’s receptive field), and when the gaze is fixating in that direction (Andersen and Mountcastle 1983). These neurons would provide a representation of visual space that could subsequently be projected to the premotor cortex for guiding the arm reach. This mechanism is apparently compatible with the organization of visuomotor behavior: the gaze systematically moves first to the location of the desired stimulus, followed by the arm movement within about 150 ms. A closer look, however, shows that, because the motor command to the arm muscles anticipates arm displacement by about the same amount, the motor commands to the eye and to the arm are in fact more or less synchronous (Biguer et al. 1982). Duhamel and his colleagues found the solution to that enigma. They discovered in area LIP neurons which fire in response to a stimulus briefly presented, not within their receptive field, but within the area of space where their receptive field will project after an eye movement is made. This effect begins some 80 ms before the eye movement starts (Duhamel et al. 1992). The retinal position of receptive fields is thus modified prior to the occurrence of an eye movement. This remapping of the representation of space in the parietal cortex accounts for perceptual stability of the visual scene during eye movements. In addition, the existence of this mechanism demonstrates that signals related to the intended eye position are available to the motor cortex. In area VIP, neuron discharges testify to a different form of remapping. Their receptive fields remain in the same spatial position irrespective of eye position in the orbit. In this case, therefore, the receptive fields appear to be anchored, not to the eye, but to the head, i.e., the receptive fields move across the retina when the eyes move (Duhamel et al. 1997).

Eye and head position signals, however, should not be sufficient in themselves for generating a directional signal for guiding the arm. Besides the position of the stimulus on the retina and the position of the eye in the orbit, the position of the arm with respect to the body must also be available to the arm motor command. This function is achieved by neurons presenting receptive fields in two sensory modalities. Neurons in area MIP, for example, respond to both a localized visual stimulus and a cutaneous stimulation applied to one of their hands. These bimodal neurons are strongly activated when the monkey reaches with that hand to the visual target within their receptive field (see Andersen et al. 1997, Colby 1998).

Neurons located in motor areas outside the parietal cortex also present bimodal activation. Graziano and his colleagues found bimodal neurons in the ventral premotor cortex: these neurons, which in addition encode the direction of arm movements, have tactile receptive fields and visual receptive fields that extend from the tactile area into adjacent space. For those neurons which have their tactile receptive field on the arm, the visual receptive field moves when the arm is displaced (Graziano et al. 1994). These visual receptive fields anchored to the arm encode visual stimulus location in body (arm) coordinates and thus may provide a solution for directional coding of reaching. In what way does this premotor mechanism relate to parietal mechanisms? What is the role of connections between parietal areas and the premotor cortex? These points remain unclear. It is likely that, instead of a single directional signal used for all purposes, multiple transformations and multiple spatial maps are generated and adapted to each purpose: guiding the eye, guiding the arm, stabilizing the visual world, etc.

Data from single cell recordings are supported by experimental data in human studies which clearly point to the parietal lobe as the site of visuomotor transformation for reaching. Although human data are less direct than monkey data, they provide deeper insight into the function due to the unique human ability to execute tasks without having to learn them. Functions like visually guided reaching can be examined in more naturalistic situations. Observation of patients with focal posterior parietal lesions in one hemisphere show deep alterations of reaching movements, usually limited to the hand contralateral to the lesion: kinematics are altered, and large reaching errors are observed. This misreaching is more marked in the condition where the visual target is presented outside the fixation point and increases when the target distance from the fixation point increases (e.g., Milner et al. 1999). These clinical findings are corroborated by brain imaging studies (based on changes in localized cerebral blood flow) in normal subjects during pointing at visual targets. The activated area, in the parietal lobe contralateral to the pointing hand, corresponds closely to the location of lesions that produce misreaching (Faillenot et al. 1997). Finally, the involvement of the posterior parietal cortex in visuomotor control is confirmed by transcranial magnetic stimulation (TMS). This technique allows the application, via magnetic induction, of very brief single shocks to a given cortical zone. When TMS was applied to posterior parietal cortex shortly after onset of a reaching movement directed to a visual target, it prevented online corrections needed to reach the target. TMS only affected the arm contralateral to the stimulated cortex, which excludes the possibility that the stimulation perturbed visual processing of target location: only the adaptation of the motor command to the visual position of the target was perturbed (Desmurget et al. 1999).

2. The Case Of Hand And Finger Movements

Grasping is often the ultimate achievement of reaching. For this reason, the two functions can hardly be dissociated. Grasping, however, relies on a quite different system than reaching: it involves distal segments, multiple degrees of freedom, and a high degree of reliance on tactile function. It is an ensemble of visuomotor transformations which preshape the hand while it is transported to the object location. Preshaping deals with object features which are not relevant to reaching, like object size, shape, or orientation (Jeannerod et al. 1995).

Indeed, anatomical data suggest that the cortical pathway connecting posterior parietal and premotor finger areas and controlling grasping is separate from the pathway controlling reaching. Hand movement related neurons were disclosed by Sakata and his colleagues (1995) in a small zone within the anterior part of intraparietal sulcus, corresponding to an area (AIP) closely connected with the ventral part of the premotor cortex (PMv in Fig. 1). Neurons from AIP were recorded in monkeys trained to manipulate various types of objects, which elicited from the animal different motor configurations of the hand: most of them were selective for grasping objects of a given shape. Task-related neurons were classified as ‘motor dominant’ or ‘visual dominant’ according to whether their discharge related more to the action of grasping or to the inspection of the object. Most importantly, neuron activity was not influenced by changing the position of the object in space, which suggests that they were related to distal hand and finger movements (for which spatial position is irrelevant) rather than to proximal (reaching) movements of the arm (Sakata et al. 1995). Finally, a distinct population of neurons sensitive to the 3-D orientation of the longitudinal axis of visual stimuli was found in the caudal part of the intraparietal sulcus. It is therefore likely that these neurons process the 3-D characteristics of the object and that the output of this processing is sent to AIP.

Transient inactivation of the AIP region, by locally injecting a GABA agonist (muscimol), produces a subtle change in the performance of visually guided movements during grasping. Lack of preshaping and grasping errors are observed in tasks requiring a precision grip. In addition, there is a clear-cut dissociation of the muscimol effects on grasping, which is altered, and reaching, which is preserved. These results (Gallese et al. 1994) support the view that these parietal neurons play a specific role in the visual guidance of grasping.

In humans, clinical observations also stress the role of the posterior parietal cortex as a structure controlling the visuomotor action of grasping. During prehension of objects, parietal lesioned patients show little or no preshaping with their contralateral hand; they tend to open their finger grip too wide with respect to object size and to close it only when their fingers come into contact with the object; the opposition axis (the axis which passes through the two opposing fingertips and the center of gravity of the object) is improperly oriented, which results in misplacement of fingertips on the object surface (Jeannerod et al. 1994). In normal subjects, the above brain imaging study (Faillenot et al. 1997) revealed an area specifically involved in grasping, at the ventral extremity of the intraparietal sulcus, also including part of the somatosensory area S II. It is likely that this cortical zone includes the human homologue of the monkey AIP area.

An intriguing question thus remains to be discussed: in which system of coordinates are finger movements during grasping coded? The main aspect of grasping, grip formation, has been described using parameters such as grip size, orientation of opposition axis, number of fingers involved, etc., which, arguably, should not depend on the object position in space. This is also the case for another aspect of grasping, the encoding of grasp and lift forces: these parameters depend on visual cues for object weight, for which object location is irrelevant. There are limits to this reasoning, however. Reaching and grasping cannot be considered in isolation, as they represent two aspects of the same action, reaching to grasp an object. The need for coordination between these two aspects becomes apparent when measuring the orientation of the opposition axis during grasping. The position of the fingertips on the object surface should only be determined by object shape and 3-D characteristics in order to achieve a stable grasp. Paulignan et al. (1997), however, using upright cylinders as targets (e.g., objects affording multiple possible positions for the fingertips) showed that orientation of the opposition axis changed as a function of the cylinder position in the workspace. This result indicates that the action of grasping must encode not only finger movements but also movements of the proximal joints: due to biomechanical limitations of the arm, the configuration of the upper limb cannot be the same for grasping the same object at two different locations.

Even though reaching and grasping cannot be considered as functionally independent (in spite of segregation of their anatomical pathways), they process different types of information and operate at different rates. Experiments using visual ‘perturbations’ synchronized with movement onset clearly demonstrate this difference (Paulignan et al. 1991a, 1991b). If, for example, the target object to be reached by the subject jumps (by means of an optical device) by 10 degrees to the right as the subject starts reaching in its direction, a corrective movement is produced so that the new target is adequately reached: the first kinematic evidence for correction occurs around 100 ms following the perturbation. If, in another experiment, the object keeps the same location, but changes size (e.g., increases) as the subject starts to move, it takes at least 300 ms for the finger grip to adjust to the changing object size. This sharp contrast between the timing of the two types of corrections accounts for the functional differences between the two components of the action. Although fast execution during reaching has an obvious adaptive value (e.g., for localizing and catching moving targets), this is not the case for grasping, the function of which is to prepare fine manipulation of the object once it has been caught.

3. Conclusion

Posterior parietal cortex thus appears as a critical zone for organizing object-oriented action, whether movements are executed by the proximal or the distal channels. The specialization of the dorsal visual pathway for visuomotor transformation is complementary with that of other brain regions, mostly located within the ventral pathway, and specialized for object identification and recognition. This suggests the existence of a dual mode of processing for object properties like spatial location, orientation, shape, or size. Perception requires that object properties are processed within an allocentric frame of reference, that is, independent from the perceiver and where the relationships between objects within the visual array are preserved. Action, by contrast, as has been shown in this research paper, requires that information is processed within an egocentric frame of reference where everything in the visual array that is not the target for the movement is ignored.

Bibliography:

- Andersen R A, Mountcastle V B 1983 The influence of the angle of gaze upon the excitability of light-sensitive neurons of the posterior parietal cortex. Journal of Neuroscience 3: 532–48

- Andersen R A, Snyder L H, Bradley D C, Jing Xing 1997 Multimodal representation of space in the posterior parietal cortex and its use in planning movements. Annual Review of Neuroscience 20: 303–30

- Biguer B, Jeannerod M, Prablanc C 1982 The coordination of eye, head and arm movements during reaching at a single visual target. Experimental Brain Research 46: 301–4

- Colby C L 1998 Action-related spatial reference frames in cortex. Neuron 20: 15–24

- Desmurget M, Epstein C M, Turner R S, Prablanc C, Alexander G E, Grafton S T 1999 Role of the posterior parietal cortex in updating reaching movements to a visual target. Nature Neuroscience 2: 563–7

- Duhamel J R, Bremmer F, BenHamed S, Graf W 1997 Spatial invariance of visual receptive fields in parietal cortex neurons. Nature 389: 845–8

- Duhamel J R, Colby C L, Goldberg M E 1992 The updating of the representation of visual space in parietal cortex by intended eye movements. Science 255: 90–2

- Faillenot I, Toni I, Decety J, Gregoire M C, Jeannerod M 1997 Visual pathways for object-oriented action and object recognition. Functional anatomy with PET. Cerebral Cortex 7: 77–85

- Gallese V, Murata A, Kaseda M, Niki N, Sakata H 1994 Deficit of hand preshaping after muscimol injection in monkey parietal cortex. Neuroreport 5: 1525–9

- Graziano M S A, Yap G S, Gross C G 1994 Coding of visual space by premotor neurons. Science 266: 1054–6

- Jeannerod M 1988 The Neural and Behavioural Organization of Goal-Directed Movements. Oxford University Press, Oxford, UK

- Jeannerod M, Arbib M A, Rizzolatti G, Sakata H 1995 Grasping objects. The cortical mechanisms of visuomotor transformation. Trends in Neuroscience 18: 314–20

- Jeannerod M, Decety J, Michel F 1994 Impairment of grasping movements following a bilateral posterior parietal lesion. Neuropsychologia 32: 369–80

- Milner A D, Paulignan Y, Dijkerman H. C, Michel F, Jeannerod M 1999 A paradoxical improvement of misreaching in optic ataxia. New evidence for two separate neural systems for visual localization. Proceedings of the Royal Society London B 266: 2225–9

- Paulignan Y, Frak V G, Toni I, Jeannerod M 1997 Influence of object position and size on human prehension movements. Experimental Brain Research 114: 226–34

- Paulignan Y, Jeannerod M, MacKenzie C, Marteniuk R 1991a Selective perturbation of visual input during prehension movements. I. The effects of changing object size. Experimental Brain Research 87: 407–20

- Paulignan Y, MacKenzie C, Marteniuk R, Jeannerod M 1991b Selective perturbation of visual input during prehension movements. II. The effect of changing object position. Experimental Brain Research 83: 502–12

- Sakata H, Taira M, Murata A, Mine S 1995 Neural mechanisms of visual guidance of hand action in the parietal cortex of the monkey. Cerebral Cortex 5: 429–38

- Ungerleider L, Mishkin M 1982 Two cortical visual systems. In: Ingle D J, Goodale M A, Mansfield R J W (eds.) Analysis of Visual Behavior. MIT Press, Cambridge, MA, pp. 549–86