View sample Sign Language Research Paper. Browse other research paper examples and check the list of research paper topics for more inspiration. If you need a religion research paper written according to all the academic standards, you can always turn to our experienced writers for help. This is how your paper can get an A! Feel free to contact our custom writing services for professional assistance. We offer high-quality assignments for reasonable rates.

Until recently, most of the scientific understanding of the human capacity for language has come from the study of spoken languages. It has been assumed that the organizational properties of language are connected inseparably with the sounds of speech, and the fact that language is normally spoken and heard determines the basic principles of grammar, as well as the organization of the brain for language. There is good evidence that structures involved in breathing and chewing have evolved into a versatile and efficient system for the production of sounds in humans. Studies of brain organization indicate that the left cerebral hemisphere is specialized for processing linguistic information in the auditory–vocal mode and that the major language-mediating areas of the brain are connected intimately with the auditory–vocal channel. It has even been argued that hearing and the development of speech are necessary precursors to this cerebral specialization for language. Thus, the link between biology and linguistic behavior has been identified with the particular sensory modality in which language has developed.

Academic Writing, Editing, Proofreading, And Problem Solving Services

Get 10% OFF with 24START discount code

Although the path of human evolution has been in conjunction with the thousands of spoken languages the world over, recent research into signed languages has revealed the existence of primary linguistic systems that have developed naturally independent of spoken languages in a visual–manual mode. American Sign Language (ASL), for example, a sign language passed down from one generation to the next of deaf people, has all the complexity of spoken languages, and is as capable of expressing science, poetry, wit, historical change, and infinite nuances of meaning as are spoken languages. Importantly, ASL and other signed languages are not derived from the spoken language of the surrounding community: rather, they are autonomous languages with their own grammatical form and meaning. Although it was thought originally that signed languages were universal pantomime, or broken forms of spoken language on the hands, or loose collections of vague gestures, now scientists around the world have found that there are signed languages that spring up wherever there are communities and generations of deaf people (Klima and Bellugi 1988).

One can now specify the ways in which the formal properties of languages are shaped by their modalities of expression, sifting properties peculiar to a particular language mode from more general properties common to all languages. ASL, for example, exhibits formal structuring at the same levels as spoken languages (the internal structure of lexical units and the grammatical scaffolding underlying sentences) as well as the same kinds of organizational principles as spoken languages. Yet the form this grammatical structuring assumes in a visual–manual language is apparently deeply influenced by the modality in which the language is cast (Bellugi 1980).

The existence of signed languages allows us to enquire about the determinants of language organization from a different perspective. What would language be like if its transmission were not based on the vocal tract and the ear? How is language organized when it is based instead on the hands moving in space and the eyes? Do these transmission channel differences result in any deeper differences? It is now clear that there are many different signed languages arising independently of one another and of spoken languages. At the core, spoken and signed languages are essentially the same in terms of organizational principles and rule systems. Nevertheless, on the surface, signed and spoken languages differ markedly. ASL and other signed languages display complex linguistic structure, but unlike spoken languages, convey much of their structure by manipulating spatial relations, making use of spatial contrasts at all linguistic levels (Bellugi et al. 1989).

1. The Structure Of Sign Language

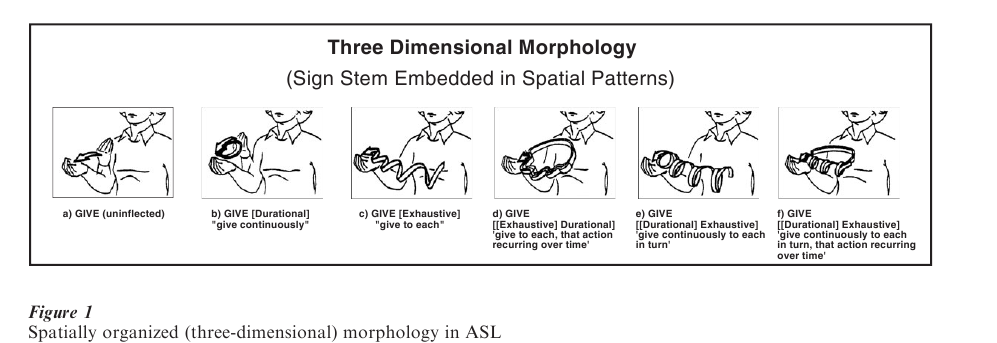

As already noted, the most striking surface difference between signed and spoken languages is the reliance on spatial contrasts, most evident in the grammar of the language. At the lexical level, signs can be separated from one another minimally by manual parameters (handshape, movement, location). The signs for summer, ugly, and dry are just the same in terms of handshape and movement, and differ only in the spatial location of the signs (forehead, nose, or chin). Instead of relying on linear order for grammatical morphology, as in English (act, acting, acted, acts), ASL grammatical processes nest sign stems in spatial patterns of considerable complexity (see Fig. 1), marking grammatical functions such as number, aspect, and person spatially. Grammatically complex forms can be nested spatially, one inside the other, with different orderings producing different hierarchically organized meanings.

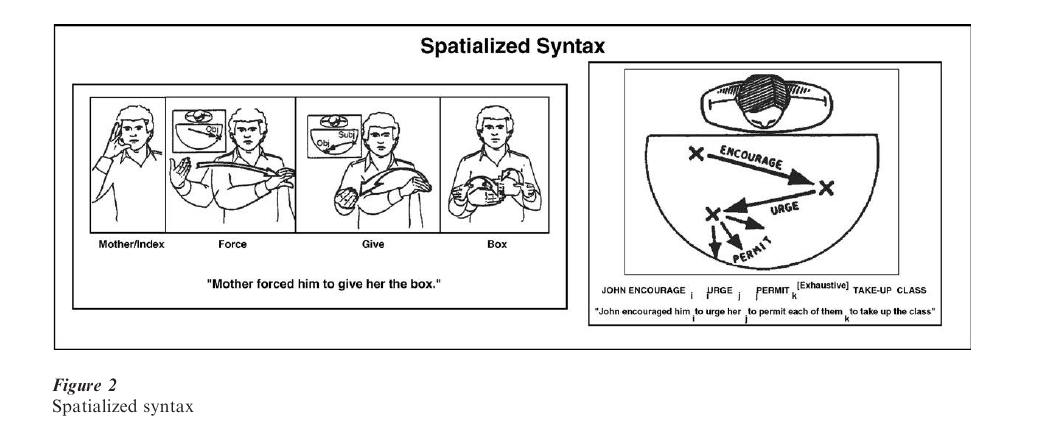

Similarly, the syntactic structure specifying relations of signs to one another in sentences of ASL is also essentially organized spatially. Nominal signs may be associated with abstract points in a plane of signing space, and it is the direction of movement of verb signs between such endpoints that marks grammatical relations. Whereas in English, the sentences ‘the cat bites the dog’ and ‘the dog bites the cat’ are differentiated by the order of the words, in ASL these differences are signaled by the movement of the verb between points associated with the signs for cat and dog in a plane of signing space. Pronominal signs directed toward such previously established points or loci clearly function to refer back to nominals, even with many signs intervening (see Fig. 2). This spatial organization underlying syntax is a unique property of visual-gestural systems (Bellugi et al. 1989).

2. The Acquisition Of Sign Language By Deaf Children Of Deaf Parents

Findings revealing the special grammatical structuring of a language in a visual mode lead to questions about the acquisition of sign language, its effect on nonlanguage visuospatial cognition, and its representation in the brain. Despite the dramatic surface differences between spoken and signed languages—simultaneity and nesting sign stems in complex co-occurring spatial patterns—the acquisition of sign language by deaf children of deaf parents shows a remarkable similarity to that of hearing children learning a spoken language. Grammatical processes in ASL are acquired at the same rate and by the same processing by deaf children as are grammatical processes by hearing children learning English, as if there were a biological timetable underlying language acquisition.

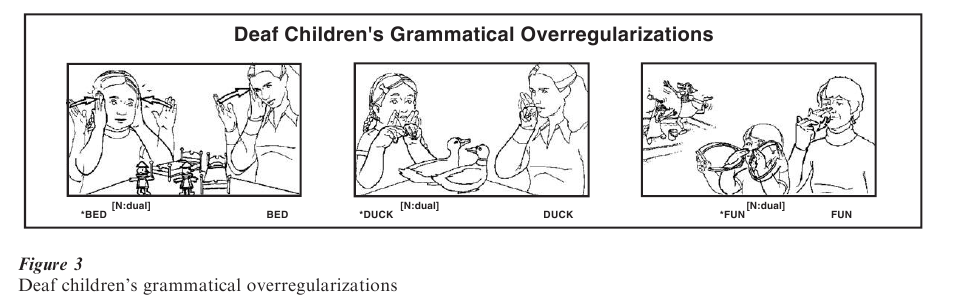

First words and first signs appear at around 12 months; combining two words or two signs in children, whether deaf or hearing, occurs by about 18–20 months, and the rapid expansion signaling the development of grammar (morphology and syntax) develops in both spoken and signed languages by about 3–3 1/2 years. Just as hearing children show their mastery and discovery of grammatical regularities by producing over regularizations (‘I goed there,’ ‘wehelded the rabbit’), deaf children learning sign language show evidence of learning the spatial morphology signaling plural forms and aspectual forms by producing over regularizations in spatial patterns (see Fig. 3).

3. Neural Systems Subserving Visuospatial Languages

The differences between signed and spoken languages provide an especially powerful tool for understanding the neural systems subserving language. Consider the following: In hearing speaking individuals, language processing is mediated generally by the left cerebral hemisphere, whereas visuospatial processing is mediated by the right cerebral hemisphere. But what about a language that is communicated using spatial contrasts rather than temporal contrasts? On the one hand, the fact that sign language has the same kind of complex linguistic structure as spoken languages and the same expressivity might lead one to expect left hemisphere mediation. On the other hand, the spatial medium so central to the linguistic structure of sign language clearly suggests right hemisphere or bilateral mediation. In fact, the answer to this question is dependent on the answer to another, deeper, question concerning the basis of the left hemisphere specialization for language.

Specifically, is the left hemisphere specialized for language processing per se (i.e., is there a brain basis for language as an independent entity)? Or is the left hemisphere’s dominance generalized to process any type of information that is presented in terms of temporal contrasts? If the left hemisphere is indeed specialized for processing language itself, sign language processing should be mediated by the left hemisphere, as is spoken language. If, however, the left hemisphere is specialized for processing fast temporal contrasts in general, one would expect sign language processing to be mediated by the right hemisphere. The study of sign languages in deaf signers permits us to pit the nature of the signal (auditory-temporal vs. visual-spatial) against the type of information (linguistic vs. nonlinguistic) that is encoded in that signal as a means of examining the neurobiological basis of language (Poizner et al. 1990).

One program of studies examines deaf lifelong signers with focal lesions to the left or the right cerebral hemisphere. Major areas, each focusing on a special property of the visual-gestural modality as it bears on the investigation of brain organization for language, are investigated. There are intensive studies of large groups of deaf signers with left or right hemisphere focal lesions in one program (Salk); all are highly skilled ASL signers, and all used sign as a primary form of communication throughout their lives. Individuals were examined with an extensive battery of experimental probes, including formal testing of ASL at all structural levels; spatial cognitive probes sensitive to right-hemisphere damage in hearing people; and new methods of brain imaging, including structural and functional magnetic resonance imaging (MRI, fMRI), event-related potentials (ERP), and positron emission tomography (PET). This large pool of well-studied and thoroughly characterized subjects, together with new methods of brain imaging and sensitive tests of signed as well as spoken language allows for a new perspective on the determinants of brain organization for language (Hickok and Bellugi 2000, Hickok et al. 1996).

3.1 Left Hemisphere Lesions And Sign Language Grammar

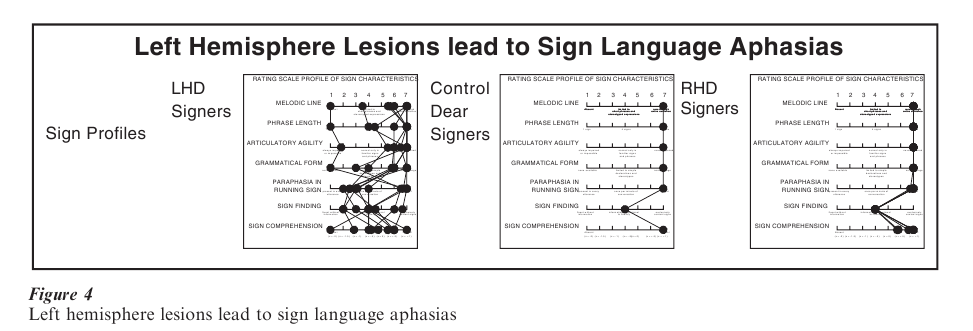

The first major finding is that so far only deaf signers with damage to the left hemisphere show sign language aphasias. Marked impairment in sign language after left hemisphere lesions was found in the majority of the left hemisphere damaged (LHD) signers, but not in any of the right hemisphere damaged (RHD) signers, whose language profiles were much like matched controls. Figure 4 presents a comparison of LHD, RHD, and normal control profiles of sign characteristics from The Salk Sign Diagnostic Aphasia Examination—a measure of sign aphasia. The RHD signers showed no impairment at all in any aspect of ASL grammar; their signing was rich, complex, and without deficit, even in the spatial organization underlying sentences of ASL. By contrast, signers with LHD showed markedly contrasting profiles: one was agrammatic after her stroke, producing only nouns and a few verbs with none of the grammatical apparatus of ASL, another made frequent paraphasias at the sign internal level, and several showed many grammatical paraphasias, including neologisms, particularly in morphology.

Another deaf signer showed deficits in the spatially encoded grammatical operations which link signs in sentences, a remarkable failure in the spatially organized syntax of the language. Still another deaf signer with focal lesions to the left hemisphere reveal dissociations not found in spoken language: a dissociation between sign and gesture, with a cleavage between capacities for sign language (severely impaired) and manual pantomime (spared). In contrast, none of the RHD signers showed any within-sentence deficits; they were completely unimpaired in sign sentences and not one showed aphasia for sign language (in contrast to their marked nonlanguage spatial deficits, described below) (Hickok and Bellugi 2000, Hickok et al. 1998).

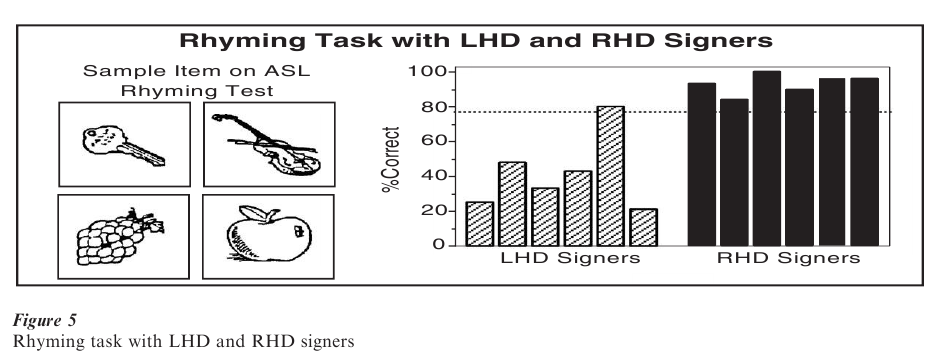

Moreover, there are dramatic differences in performance between left-and right-hemisphere damaged signers in formal experimental probes of sign competence. For example, a test of the equivalent of rhyming in ASL provides a probe of phonological processing. Two signs ‘rhyme’ if they are similar in all but one phonological parametric value such as hand- shape, location, or movement. To tap this aspect of phonological processing, subjects are presented with an array of pictured objects and asked to pick out the two objects whose signs rhyme (Fig. 5). The ASL signs for key and apple share the same hand-shape and movement, and differ only in location, and thus are like rhymed pairs. LHD signers are significantly impaired relative to RHD signers and controls on this test, another sign of the marked difference in effects of right-and left-hemisphere lesions on signing. On other tests of ASL processing at different structural levels, there are similar distinctions between left- and right-lesioned signers, with the right-lesioned signers much like the controls, but the signers with left hemisphere lesions significantly impaired in language processing. Moreover, studies have found that there can be differential breakdown of linguistic components of sign language (lexicon and grammar) with different left hemisphere lesions.

3.2 Right Hemisphere Lesions And Nonlanguage Spatial Processing

These results from language testing contrast sharply with results on tests of nonlanguage spatial cognition. RHD signers are significantly more impaired on a wide range of spatial cognitive tasks than LHD signers, who show little impairment. Drawings of many of the RHD signers (but not those with LHD) show severe spatial distortions, neglect of the left side of space, and lack of perspective. RHD deaf signers show lack of perspective, left neglect, and spatial disorganization on an array of spatial cognitive nonlanguage tests (block design, drawing, hierarchical processing), compared with LHD deaf signers. Yet, astonishingly, these severe spatial deficits among RHD signers do not affect their competence in a spatially nested language, ASL. The case of a signer with a right parietal lesion leading to severe left neglect is of special interest: Whereas his drawings show characteristic omissions on the left side of space, his signing (including the spatially organized syntax) is impeccable, with signs and spatially organized syntax perfectly maintained.

The finding that sign aphasia follows left hemisphere lesions but not right hemisphere lesions provides a strong case for a modality-independent linguistic basis for the left hemisphere specialization for language. These data suggest that the left hemisphere is predisposed biologically for language, independent of language modality. Thus, hearing and speech are not necessary for the development of hemisphere specialization—sound is not crucial. Furthermore, the finding of a dissociation between competence in a spatial language and competence in nonlinguistic spatial cognition demonstrates that it is the type of information that is encoded in a signal (i.e., linguistic vs. spatial information) rather than the nature of the signal itself (i.e., spatial vs. temporal) that determines the organization of the brain for higher cognitive functions.

4. Language, Modality, And The Brain

Analysis of patterns of breakdown in deaf signers provides new perspectives on the determinants of hemispheric specialization for language. First, the data show that hearing and speech are not necessary for the development of hemispheric specialization: sound is not crucial. Second, it is the left hemisphere that is dominant for sign language. Deaf signers with damage to the left hemisphere show marked sign language deficits but relatively intact capacity for processing nonlanguage visuospatial relations. Signers with damage to the right hemisphere show the reverse pattern. Thus, not only is there left hemisphere specialization for language functioning, there is also complementary specialization for nonlanguage spatial functioning. The fact that grammatical information in sign language is conveyed via spatial manipulation does not alter this complementary specialization.

Furthermore, components of sign language (lexicon and grammar) can be selectively impaired, reflecting differential breakdown of sign language along linguistically relevant lines. These data suggest that the left hemisphere in humans may have an innate predisposition for language, regardless of the modality. Since sign language involves an interplay between visuospatial and linguistic relations, studies of sign language breakdown in deaf signers may, in the long run, bring us closer to the fundamental principles underlying hemispheric specialization.

Bibliography:

- Bellugi U 1980 The structuring of language: Clues from the similarities between signed and spoken language. In: Bellugi U, Studdert-Kennedy M (eds.) Signed and Spoken Language: Biological Constraints on Linguistic Form. Dahlem Konferenzen. Weinheim Deerfield Beach, FL, pp. 115–40

- Bellugi U, Poizner H, Klima E S 1989 Language, modality and the brain. Trends in Neurosciences 10: 380–8

- Emmorey K, Kosslyn S M, Bellugi U 1993 Visual imagery and visual-spatial language: Enhanced imagery abilities in deaf and hearing ASL signers. Cognition 46: 139–81

- Hickok G, Bellugi U 2000 The signs of aphasia. In: Boller F, Grafman J (eds.) Handbook of Neuropsychology, 2nd edn. Elsevier Science Publishers, Amsterdam, The Netherlands

- Hickok G, Bellugi U, Klima E S 1996 The neurobiology of signed language and its implications for the neural organization of language. Nature 381: 699–702

- Hickok G, Bellugi U, Klima E S 1998 The basis of the neural organization for language: Evidence from sign language aphasia. Reviews in the Neurosciences 8: 205–22

- Klima E S, Bellugi U 1988 The Signs of Language. Harvard University Press, Cambridge, MA

- Poizner H, Klima E S, Bellugi U 1990 What the Hands Reveal About the Brain. MIT Press Bradford Books, Cambridge, MA